Tuesday, March 16th 2010

GeForce GTX 480 has 480 CUDA Cores?

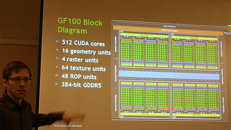

In several of its communications about Fermi as a GPGPU product (Next-Gen Tesla series) and GF100 GPU, NVIDIA mentioned the GF100 GPU to have 512 physical CUDA cores (shader units) on die. In the run up to the launch of GeForce 400 series however, it appears as if GeForce GTX 480, the higher-end part in the series will have only 480 of its 512 physical CUDA cores enabled, sources at Add-in Card manufacturers confirmed to Bright Side of News. This means that 15 out of 16 SMs will be enabled. It has a 384-bit GDDR5 memory interface holding 1536 MB of memory.

This could be seen as a move to keep the chip's TDP down and help with yields. It's unclear if this is a late change, because if it is, benchmark scores of the product could be different when it's finally reviewed upon launch. The publication believes that while the GeForce GTX 480 targets a price point around $449-499, while the GeForce GTX 470 is expected to be priced $299-$349. The GeForce GTX 470 has 448 CUDA cores and a 320-bit GDDR5 memory interface holding 1280 MB of memory. In another report by Donanim Haber, the TDP of the GeForce GTX 480 is expected to be 298W, with GeForce GTX 470 at 225W. NVIDIA will unveil the two on the 26th of March.

Sources:

Bright Side of News, DonanimHaber

This could be seen as a move to keep the chip's TDP down and help with yields. It's unclear if this is a late change, because if it is, benchmark scores of the product could be different when it's finally reviewed upon launch. The publication believes that while the GeForce GTX 480 targets a price point around $449-499, while the GeForce GTX 470 is expected to be priced $299-$349. The GeForce GTX 470 has 448 CUDA cores and a 320-bit GDDR5 memory interface holding 1280 MB of memory. In another report by Donanim Haber, the TDP of the GeForce GTX 480 is expected to be 298W, with GeForce GTX 470 at 225W. NVIDIA will unveil the two on the 26th of March.

79 Comments on GeForce GTX 480 has 480 CUDA Cores?

As Wolf said: bring on the reviews.

IMO it's mostly a good thing, because the only significant move (to the higher clock) are the TMU. The setup engine, rasterizer and tesselators are suposedly much smaller than SPs, TMUs or ROPs, so they should not have any effect on keeping the shader domain reaching higher clocks or on the temperatures and stability of the GPC, IMO. The units that are supposed to be more sensible to clocks like the ROPs and L2 remain at the slower core clock.

The architecture of both GPUs and the software they run hasn't changed fundamentally. So until that does, all of the hype on upcoming GPUs as we know them, is neither here nor there.

What we'll get, is another powerful card, that still falls down like all the rest, in all the same places, for all the same reasons - of which are the same reasons that have existed for the last decade or more.

To me that's not impressive. I like big, I like powerful, it's how I like my vehicles, but not my computer components. I'm tired of getting bigger cases, bigger motherboards, bigger radiators and bigger PSUs, only to have the overrated max FPS of a game, go plummeting straight back down to twenty-five frames, because another character strolled onto the screen, and all this supposed Direct X special effects, that unfortunately we cannot actually see, has just sucked away the performance.

Don't get me wrong, I'm pro Direct X. When people were crying and whining about DX10 being a failure, I wasn't. I understood, I got it. Had you tried to run a lot of the background processing of DX10 on DX9(if it was possible) it wouldn't be a pretty sight, and DX11 brings some much needed tools for developers.

But I'm just not 'pro waiting six months or more every year' to see these 'fabled' graphics processors be put on a pedestal, and be released and yet don't provide anything really tangible over the last generation.

Consider that brute power alone, and computational flexibility, something like a GTX 295 or 4870X2 should be MORE than enough for modern games, and they usually are. Heck I can run the X2 at clocks of 500/500 in about 90% of modern games, and still have over 50fps. But then you get those moments where it all comes crashing down, and no matter how powerful the cards, it never ends.

plus

All brand new architectures are head of their time. Becuase no developer will spend oodles of money and time to develop a game for a hardware feature that 0.0001% of the gaming market has. (sometimes a token game comes out with sponsorship of ati/nv but changes nothing).

Its just exciting bc these cards do bring something new to the table... unlike the 4xxx or gt200 or g92 or rv670 - its been a long time since that has happened.

disabled SM to control TPD

:roll: and there are fools who support that.....

All this ongoing delays and lack of communication from Nvidia and those rumours about insanely high TPD are clearly pointing to a disaster release from Nvidia. If they were confident in their product, they would brag about it to no end instead of hiding it far from the rogue benchmarkers.

more HERE

So 87 cores ready at least :p Wonder if they leave that marker stuff under IHS or those are just quality assurance samples.

Oh nooooo! Fermi A3 silicon also has 2% yields. Because we can clearly see a number 2 written over that die and as everybody knows when a company gets few samples back from factory they write numbers on them (and only when they get very few of them, otherwise they would never write over them, it would be stupid to do so) and always ALWAYS show the one with the higher number, in this case a 2 (in other a 7 :)). That number clearly means 2% yields. :rolleyes:

There's no attack to Nvidia, it's just fair to recognize when there's a disappointment, that's how human civilization has evolved, learning from errors.

ATi, for instance, failed with their HD2xxx/3xxx series, that's why I kept my X1650Pro a little longer. Nvidia delivered the mythical G92 and the sole reason I chose my HD4830 over the 9800GT was the price (in my country Nvidia is really overpriced).

Nvidia failed with the FX series and ATi triumphed with the 9xxx (9 a lucky number?).

There's no need to fight, even a fanboy has to reckon when his/her company screwed it up.

More rumors? See this:

www.semiaccurate.com/2010/02/20/semiaccurate-gets-some-gtx480-scores/