Friday, March 16th 2012

GTX 680 Generally Faster Than HD 7970: New Benchmarks

For skeptics who refuse to believe randomly-sourced bar-graphs of the GeForce GTX 680 that are starved of pictures, here is the first set of benchmarks run by a third-party (neither NVIDIA nor one of its AIC partners). This [p]reviewer from HKEPC has pictures to back his benchmarks. The GeForce GTX 680 was pitted against a Radeon HD 7970, and a previous-generation GeForce GTX 580. The test-bed consisted of an extreme-cooled Intel Core i7-3960X Extreme Edition processor (running at stock frequency), ASUS Rampage IV Extreme motherboard, 8 GB (4x 2 GB) GeIL EVO 2 DDR3-2200 MHz quad-channel memory, Corsair AX1200W PSU, and Windows 7 x64.

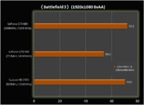

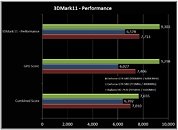

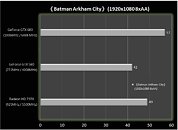

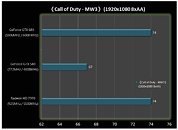

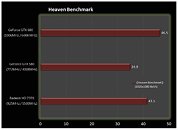

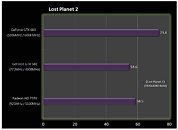

Benchmarks included 3DMark 11 (performance preset), Battlefield 3, Batman: Arkham City, Call of Duty: Modern Warfare 3, Lost Planet 2, and Unigine Heaven (version not mentioned, could be 1). All tests were run at a constant resolution of 1920x1080, with 8x MSAA on some tests (mentioned in the graphs).More graphs follow.

Source:

HKEPC

Benchmarks included 3DMark 11 (performance preset), Battlefield 3, Batman: Arkham City, Call of Duty: Modern Warfare 3, Lost Planet 2, and Unigine Heaven (version not mentioned, could be 1). All tests were run at a constant resolution of 1920x1080, with 8x MSAA on some tests (mentioned in the graphs).More graphs follow.

273 Comments on GTX 680 Generally Faster Than HD 7970: New Benchmarks

The good thing: AMD will be forced to lower their prices on current offerings.

The bad thing: nVidia will probably sell these @ the price AMD is selling theirs right now and charge even more for the top cards (assuming this is the mid-range card).

I really was hoping for a tighter race here. I don't care who wins as long as it's done so by a small margin so that more aggressive pricing is warranted.

This is bad ... :(

GTX680 works other clocks...

This is the correct picture from source... (not killing the 7970.) www.hkepc.com/7672/page/6#view

Just realised....

772 --> 1006 = 30% core clock increase

4008 ---> 6008 = 50% memory clock increase

54.2 ---> 72.2 fps = 33% fps boost

Now, given the clocks are 30% higher and there is likely a AA algorithm at work, I've just had a terrible dawning of, "it's not a super performer at all". The 28nm process just makes it allow for faster clocks. And with some clever tech helping out AA (which isn't a bad thing) it takes the biscuit.

But I'm just thinking if those 1GHz clocks and memory speed are true then it's simply a logical performance increase based on clocks, not architecture.

Need to differentiate between high-end card/SKU and high-end chip. It's not the same. AMD's X2 cards where high-end cards, made of 2 mid-range GPUs. That is what their "small die" strategy is about. Apparently now Nvidia will do the same except with 1 GPU lol.

I was only expecting more of it because of all the "great" HYPE this card got! IF Nvidia did cut out the top performing card and send out it's mid range chip in it's place that is a TOTAL low blow to the customer's. If they have/had a monster they should have put it on the shelve's. Lord know's they always charge top $$$ might as well get top performance, when i say "top performance" i don't mean 5% - 10% more performance :o

3DMark11 Performance - 41.4%

BF3 - 33%

Batman AC - 35.7%

COD MW3 - 10%

Heaven Benchmark - 33.2%

Lost Planet 2 - 34.4%

This is how much faster it is over the GTX 580. Not bad at all for the 'mainstream card'. But again, those clock speeds make a big diff.

Given that a 14% clock increase on a GTX580 Lightning resulted in a 10% 3DMark11 Performance score on this review (www.guru3d.com/article/msi-geforce-gtx-580-lightning-review/20) you could argue that a 'X' % clock increase gives a ~70% 'X' increase in performance.

So by default with nothing else considered the architecture explains 30% of it's improvement but it's higher clocks make up 70%. Or in laymans terms, more than 2/3 of the extra fps from the GTX 680 are due to higher clocks.

This is simple percentages here based on figures. Not opinions and not conjecture. I know folk will argue but I dont care, to me, the clocks make the biggest impact.

Need a good W1zz review to make it all clear.

And I like reviews...

It gives me the right to post things and then demand validation of my thoughts.

Thanks for giving me an excuse to reply!! :laugh:

Okay, next question for Btarunr and W1zz.

WHEN IS THE FRICKIN' NDA UP?

a) It looks like a mid-range chip and is called like a mid-range chip, but it was all part of a covert plan to confuse people. (And badly loose the high-end to AMD, had Tahiti performed as it should -> comparably as well as Pitcairn)

b) it looks like a mid-range chip and is called like a mid-range chip, because it IS a mid-range chip.

Occam's Razor== b) ;)We are discussing different things. I'm not discussing at which price segment it belongs now, but which chip in the Kepler line this is. Now some people even pretend that GK100 and GK110 never existed and never will, but it does exist and will be released. Just because it may come several months later that does not change the fact that it will and it will be Kepler and it will be bigger than GK104. So by the fact that a faster/bigger Kepler chip is going to be released and was always planned to be released, GK104 is NOT, never was and never will be a high-end chip. it cannot be high-end, when there's something bigger on top of it. Period.

NDA lift is 22nd of March IIRC and further reason why i don't FULLY believe/trust any of these released "stat's" ;)If both card's were at same clock's i think 7970 would just barely pass (1% - 2%) it or they would be dead even.

Hmm that's weird because isn't Nvidia the one with the Fairy girl and AMD the one with Ruby? How come did AMD get that fairy dust first and for so many generations?!??! :mad::cool:

GF 104 = GTX 460

GF 100 = oven, I mean GTX 480

GF 114 = GTX 560

GF 110 = GTX 580

GK 104 = GTX

660whoops GTX 680GK 100 or 110 = GTX

680whoops GTX 780?Always so feisty Ben :rolleyes: Process shrinks allow faster clocks due to all the power hoo ha that happens at lower scales. I can't explain that in tech terms but i know it's a facet of process shrinking up to logical point. I know the architecture is different but if 40nm allowed 1GHz clocks we'd be seeing far lower improvements.It's all moot anyway, I'm looking forward to seeing the pricing and then I can decide what to get until GK100/110 comes out :cool: