Tuesday, February 19th 2013

AMD "Jaguar" Micro-architecture Takes the Fight to Atom with AVX, SSE4, Quad-Core

AMD hedged its low-power CPU bets on the "Bobcat" micro-architecture for the past two years now. Intel's Atom line of low-power chips caught up in power-efficiency, CPU performance, to an extant iGPU performance, and recent models even feature out-of-order execution. AMD unveiled its next-generation "Jaguar" low-power CPU micro-architecture for APUs in the 5W - 25W TDP range, targeting everything from tablets to entry-level notebooks, and nettops.

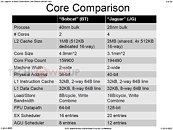

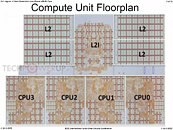

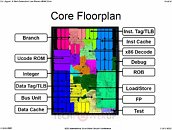

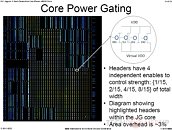

At its presentation at the 60th ISSC 2013 conference, AMD detailed "Jaguar," revealing a few killer features that could restore the company's competitiveness in the low-power CPU segment. To begin with, APUs with CPU cores based on this micro-architecture will be built on TSMC's 28-nanometer HKMG process. Jaguar allows for up to four x86-64 cores. The four cores, unlike Bulldozer modules, are completely independent, and only share a 2 MB L2 cache."Jaguar" x86-64 cores feature a 40-bit wide physical address (Bobcat features 36-bit), 16-byte/cycle load/store bandwidth, which is double that of Bobcat, a 128-bit wide FPU data-path, which again is double that of Bobcat, and about 50 percent bigger scheduler queues. The instruction set is where AMD is looking to rattle Atom. Not only does Jaguar feature out-of-order execution, but also ISA instruction sets found on mainstream CPUs, such as AVX (advanced vector extensions), SIMD instruction sets such as SSSE3, SSE4.1, SSE4.2, and SSE4A, all of which are quite widely adopted by modern media applications. Also added is AES-NI, which accelerates AES data encryption. In the efficiency department, AMD claims to have improved its power-gating technology that completely cuts power to inactive cores, to conserve battery life.

At its presentation at the 60th ISSC 2013 conference, AMD detailed "Jaguar," revealing a few killer features that could restore the company's competitiveness in the low-power CPU segment. To begin with, APUs with CPU cores based on this micro-architecture will be built on TSMC's 28-nanometer HKMG process. Jaguar allows for up to four x86-64 cores. The four cores, unlike Bulldozer modules, are completely independent, and only share a 2 MB L2 cache."Jaguar" x86-64 cores feature a 40-bit wide physical address (Bobcat features 36-bit), 16-byte/cycle load/store bandwidth, which is double that of Bobcat, a 128-bit wide FPU data-path, which again is double that of Bobcat, and about 50 percent bigger scheduler queues. The instruction set is where AMD is looking to rattle Atom. Not only does Jaguar feature out-of-order execution, but also ISA instruction sets found on mainstream CPUs, such as AVX (advanced vector extensions), SIMD instruction sets such as SSSE3, SSE4.1, SSE4.2, and SSE4A, all of which are quite widely adopted by modern media applications. Also added is AES-NI, which accelerates AES data encryption. In the efficiency department, AMD claims to have improved its power-gating technology that completely cuts power to inactive cores, to conserve battery life.

71 Comments on AMD "Jaguar" Micro-architecture Takes the Fight to Atom with AVX, SSE4, Quad-Core

That "32ns" sounds a lot like tRFC on DDR3 chips, not access latency.

Yeah, there might be more latency, it's possible, but I don't think it will make that much of a difference. Also with more bandwidth you can load more data into cache in one clock than DDR3. So I think the benefits will far outweigh the costs.

So 45+45+ say 45 again (cpu) is 135w+!! Something has to be a miss.

and the highest end jaguar apu with its graphics cores(128 of them?) is rated at 25watt and with much higher clockspeed than 1.6ghz(amd in their presentation said jaguar will clock 10-15 higher than what bobcat wouldve clocked at 28nm) so ur talking atleast over 2ghz.

and if llano with 400outdated radeon cores, and 4 k10.5 cores clocked atleast 1.6 before turbo, so expect jaguar to be much more efficient on a new node and power efficient architecture, say 25watt max for the cpu cores only, if not less, that leaves them with 75-100watt headroom to work with(think hd7970m rated at 100w, thats 1280gcn cores at 800mhz, this would have 1152gcn cores at 800mhz and after a year of optimization its easily at 75watt)to add up to 100-125w which is very reasonable and since its an apu u just need one proper cooler, also think of graphics cards rated at 250w only requiring one blower fan and a dual slot cooler to cool both gddr5 chips and the gpu. in other words the motherboard and the chip can be as big as a hd7970(but with 100-125w u only need something the size of hd7850 which is rated at 110w-130w) but then of course add the br drive and other goodies. main point is cooling is no problem unless multiple chips are involved requiring cooling the case in general rather than the chip itself using a graphic card style coolermore like between hd7850 and hd7970m, it seems 800mhz is the sweet spot in terms of performance/efficiency/die size considering an hd7970m with 1280gnc cores at 800mhz is at 100w versus 110w measured/130w rated on hd7850 with 1024w with 860mhz

not to mention the mobile pitcairn loses 30watts-75watts measured/rated when clocked at 800mhz(advertised tdp on desktop pitcairn is 175wat but measured at 130w according to the link i have below)

www.guru3d.com/articles_pages/amd_radeon_hd_7850_and_7870_review,6.html

here is a reference in regards to the measured tdp, because advertised tdp by amd is higher but also consider other parts on the board and allowing overclock headroom or whatever the case is

GDDR5 itself can do whatever it wants, there are no packages or CPU IMCs that will handle it though, that does not mean that it can not be used. PS4 is lined up to use GDDR5 for system and graphics memory and I suspect that Sony isn't just saying that for shits and giggles.

Also it's not all that different, latencies are different, performance is (somewhat, not a ton,) optimized for bandwidth other latency but other than that, communication is about the same sans two control lines for reading and writing. It's a matter of how that data is transmitted, but your statement here is really actually wrong.

Just because devices don't use a particular bit of hardware to do something doesn't mean that you can't use that hardware to do something else. For example, for the longest time low voltage DDR2 was used in phones and mobile devices and not DDR3. Does that mean that DDR3 will never get used in smartphones? Most of us know the answer to that and it's a solid no, GDDR5 is no different. Just because it works best on video cards doesn't mean that it can not be used of a CPU that would be build with a GDDR5 memory controller.

many thanks:toast:

many thanks:toast:PS4 is NOT PC...But if what you all say is true, than why nobody introduced GDDR5 for PC?? Is from a long time on video cards. And why is it called Graphic DDR then?

EDIT: With a custom OS.

Just because GDDR5 isn't a PC memory standard doesn't mean it can't be used as system main memory. It would have comparatively high latency to DDR3, but it still yields high bandwidth. GDDR5 stores data in the same ones and zeroes as DDR3, SDR, and EDO.

You're just digging yourself into a hole.

I'm guessing latency is not as much of an issue when it comes to a specific design for a console rather than a broad compatibility design for PC.

this gives game devs the ability to split that 8GB up at will, between CPU and GPU. that could really extend the life of the console, and its capabilities.

The N64 was a console and developers actually released titles on it, but this doesn't change the fact how horrid the memory latency really was on that system, and how much extra effort and work the programmers had to make to get over that huge limitation (probably the main reason why Nintendo introduced 1T-SRAM in the Gamecube, which was basically eDram on die).

If you really wan't to split unified memory into CPU and GPU memory (you can't btw, but let's assume you could), it's extremely unlikely that developers will use more than 1-2GB for "Video memory" in the PS4, not only because the bandwidth would be not enough to use more, but also because it's simply not needed (ok, the ps4 is extremely powerful on the bandwidth side and perhaps there will be some new rendering technique in the future which we don't know about yet, but current methods like deferred rendering, voxel, megatexturing, etc will run just fine using only 1-2GB for "rendering").