Thursday, July 18th 2013

More Core i7-4960X "Ivy Bridge-E" Benchmarks Surface

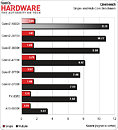

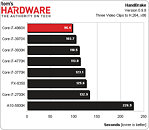

More benchmarks of Intel's upcoming socket LGA2011 flagship client processor, the Core i7-4960X "Ivy Bridge-E," surfaced on the web. Tom's Hardware scored an engineering sample of the chip, and wasted no time in comparing it with contemporaries across three previous Intel generations, and AMD's current generation. These include chips such as the i7-3970X, i7-4770K, i7-3770K, i7-2700K, FX-8350, and A10-5800K.

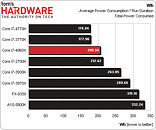

In synthetic tests, the i7-4960X runs neck and neck with the i7-3970X, offering a 5 percent performance increment at best. It's significantly faster than the i7-3930K, Intel's $500-ish offering for over 7 quarters running. Its six cores and twelve SMT threads give it a definite edge over quad-core Intel parts in multi-threaded synthetic tests. In single-threaded tests, the $350 i7-4770K is highly competitive with it. The only major surprise on offering is power-draw. Despite its TDP being rated at 130W, on par with the i7-3960X, the i7-4960X "Ivy Bridge-E" offers significantly higher energy-efficiency, which can be attributed to the 22 nm process on which it's built, compared to its predecessor's 32 nm process. Find the complete preview at the source.

Source:

Tom's Hardware

In synthetic tests, the i7-4960X runs neck and neck with the i7-3970X, offering a 5 percent performance increment at best. It's significantly faster than the i7-3930K, Intel's $500-ish offering for over 7 quarters running. Its six cores and twelve SMT threads give it a definite edge over quad-core Intel parts in multi-threaded synthetic tests. In single-threaded tests, the $350 i7-4770K is highly competitive with it. The only major surprise on offering is power-draw. Despite its TDP being rated at 130W, on par with the i7-3960X, the i7-4960X "Ivy Bridge-E" offers significantly higher energy-efficiency, which can be attributed to the 22 nm process on which it's built, compared to its predecessor's 32 nm process. Find the complete preview at the source.

72 Comments on More Core i7-4960X "Ivy Bridge-E" Benchmarks Surface

Haswell -e if i remember correctly will shift the enthusiast line to 6 & 8 core cpus with the next chipset.

Higher IPC from Intel CPUs also helps a ton on minimum framerates, average doesn't tell the whole story.

Are you completely sure that an AMD Octa can/starts to take the lead on a multithreaded game scenario against an Intel Hexa?

If so please show me proof because every single overclock review I've seen shows the AMD CPU way behind.

I'm betting the CPUs here don't even get properly stressed.

Throw in one or a couple more GPUs and you'll see the difference.

Win loose or draw it shows the minimum FPS on the AMD side for multiple games. That means there is something within the AMD setup allowing better performance.

You can argue GPU bound vs CPU bound all you want, but in the real world those graphs show it all there will be no difference in actual performance while gaming. Mind you that is a basically stock clocked 9590 as well.

Wanna bet the average/maximum and minimum framerate with a 5GHz 3930/3960 will always be favouring the Intel CPU?

Take a look here, how the game changes when you add more than one GPU :)

www.anandtech.com/show/6985/choosing-a-gaming-cpu-at-1440p-adding-in-haswell-/7

Two GPU's Dirt 3 and it is within 10%. I would honestly be curious how it did with one of the boards running PCI-E 3.0 vs 2.0. It appears to have madea huge difference with the intel chips.

What I really don't like about the AMD CPU (for gaming) is the fact it blows on poorly threaded games.

It's a really good multithreaded CPU but you can feel it lacks on the IPC department and that's a deal killer for me.

They need to increase that to get back into the game and not get laughed at.

From the same review

Really shows you AMD has some serious catch up if they want to price their products where they have.

Doesn't even begin to match a STOCK i7 hexa.

Why do you have to compromise when you can get a product that performs well everywhere?