Thursday, July 18th 2013

More Core i7-4960X "Ivy Bridge-E" Benchmarks Surface

More benchmarks of Intel's upcoming socket LGA2011 flagship client processor, the Core i7-4960X "Ivy Bridge-E," surfaced on the web. Tom's Hardware scored an engineering sample of the chip, and wasted no time in comparing it with contemporaries across three previous Intel generations, and AMD's current generation. These include chips such as the i7-3970X, i7-4770K, i7-3770K, i7-2700K, FX-8350, and A10-5800K.

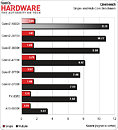

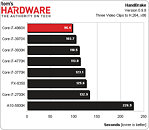

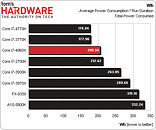

In synthetic tests, the i7-4960X runs neck and neck with the i7-3970X, offering a 5 percent performance increment at best. It's significantly faster than the i7-3930K, Intel's $500-ish offering for over 7 quarters running. Its six cores and twelve SMT threads give it a definite edge over quad-core Intel parts in multi-threaded synthetic tests. In single-threaded tests, the $350 i7-4770K is highly competitive with it. The only major surprise on offering is power-draw. Despite its TDP being rated at 130W, on par with the i7-3960X, the i7-4960X "Ivy Bridge-E" offers significantly higher energy-efficiency, which can be attributed to the 22 nm process on which it's built, compared to its predecessor's 32 nm process. Find the complete preview at the source.

Source:

Tom's Hardware

In synthetic tests, the i7-4960X runs neck and neck with the i7-3970X, offering a 5 percent performance increment at best. It's significantly faster than the i7-3930K, Intel's $500-ish offering for over 7 quarters running. Its six cores and twelve SMT threads give it a definite edge over quad-core Intel parts in multi-threaded synthetic tests. In single-threaded tests, the $350 i7-4770K is highly competitive with it. The only major surprise on offering is power-draw. Despite its TDP being rated at 130W, on par with the i7-3960X, the i7-4960X "Ivy Bridge-E" offers significantly higher energy-efficiency, which can be attributed to the 22 nm process on which it's built, compared to its predecessor's 32 nm process. Find the complete preview at the source.

72 Comments on More Core i7-4960X "Ivy Bridge-E" Benchmarks Surface

Please.

What I find interesting though is the fact that CPU performance differences in gaming becomes far less when game resolutions are increased and all that. You are right that AMD and Intel are pretty close in gaming performance, but that's only close, Intel still has the performance crown, and they will continue to have it till AMD does a big overhaul on their sockets and chipsets.

The Haswell mainstream chips are a nice toss into the mix, but the half ass heatspreader and heat issue would make me mad, not to mention the extra cost of a entire system change for me makes it not worth it. :laugh:

The amd or intel debate is just like the chicken or the egg debate.... It don't matter.... people eat'em both.

Plus it ain't Haswell, why bother?

From Mercury Research'sown analysis (presumably "96%" is rounded up from 95.7%) :As for the hoohah about gaming and CPUs...the bulk of games are of course graphics constrained, so a user would need to have some pretty narrow focus to argue gaming as a fundamental feature of a $1K processor IMO. Personally, if its a gaming benchmark comparison, I'd be more inclined to check out games that actually keep a CPU gainfully employed - i.e. strong AI routines, comprehensive CPU physics etc. - usually the province of RTS games - i.e. Skyrim

It's little wonder that games such as Tomb Raider and MLL are showing little differentiation between CPUs or clockspeeds

On a side note I'd really want both AMD and Intel to increase core count for the Desktop platform.

Feel free to stone the messenger, but until sites utilize a CPU intensive game (preferably one that scales with core count adequately), you're stuck with what is benchmarked in CPU comparative game testing - and of course, the paucity of games that fit the bill says something in itself about the general requirement.

Reason AMD gets bad performance in Skyrim is because of just that too, AMD FX chips are not known for their single thread performance, where as Intel is.There's not much of a reason though. Software that everyday consumers use has kind of hit a wall in terms of the amount of cores they need. Once software begins to demand more cores, then we will begin to see more cores in our hardware.

AMD seems to have kinda forgotten that single threaded performance is also important.

Why people gets so upset about SB/IB-E if all you need is Gaming oriented i5-K chip? Intel give "E's" to us who need it, not for thoose who don't. It's like a truck: It does consume x-times more fuel than a personal vehicle, it's not good on cornering, is noisy and heats a lot. But try to convince some cargo company, that using a family car would be better for them. I do a lot of video rendering: Secods faster are multiplyed many times and at the end of day I save tens of minutes, at the end of month I save hours at the end of year I save days of my valuable working time. My ROI for "only" 10% faster chip or next generation of GPU is 3-6 months. But for office computer, for making invoices, I still have E6800 with 4GB ram. (and will have it for a while).

2nd: Intel CAN NOT give us Haswell-E until server platform wil do that move. Server owners does not care about computer games, but cares about of TCO and ROI, so changing platform every year would instantly penalize Intel and reward compeption. That's it.

In something like Cinebench11.5 or Any video converter using 8threads x264 codec it hits 62-74C it will be better in winter though and im only at H90, atm outside 40C inside 29C helps too xD

That H90 you have is one hell of a cooler at the claimed 29c inside temperature.

:shadedshu

Try loading it up under OCCT or better yet Prime95!

I betcha you stop the program once you see the temp shoot to the moon ;)

I ran IBT, linx, Prime95 it and yeah it was 80-90C, so? I will never ever see that in any app. or game so im not bothered with those tools.. Even intel said its useless and not a real stability indicator.. And its true, I passed all torture tests and yet failed in Bf3 lol :DI never said its optimal and that's the worse case scenario in summer. When i get higher static pressure fans 2.7mm h2o it will change a lot (atm 1.5mm h20):)

About soldering, someone leaked IB-e deliding and it showed proper soldering.

I use that as stability test and I've never had my pc hang after passing that.

Beware it shoots your temps to the moon (unlike Linx non-AVX), makes my 3930K go up to 80C on a gigantic custom water loop.

m.hardocp.com/article/2013/08/30/intel_ivy_bridgee_core_i74960x_ipc_ocing_review/6#.UiHP7NJkNyx