Monday, January 5th 2009

More GT212 Information Forthcoming

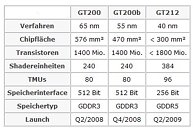

NVIDIA's G200b, its current flagship GPU will be suceeded later this year with the GT212, and as Hardware Infos discovers, NVIDIA seems to have given some interesting specifications to the GT212. To begin with, the GPU holds more than 1.8 billion transistors. It is built on TSMC's 40nm manufacturing node. The shader domain gets a boost with 384 shader units (a 60% increase over G200(b)). The GPU holds 96 texture memory units and a 256-bit wide GDDR5 memory bus with a clock speed of 1250 MHz (5000 MT/s).

The transition to GDDR5 seemed inevitable, with there being a genuine incentive of cutting down the number of memory chips (due to the efficient memory bus), with NVIDIA having completely avoided GDDR4. With the die-size being expected to be around 300 sq. mm, these GPUs will be cheaper to manufacture. The GT212 is slated for Q2 2009.

Source:

Hardware-Infos

The transition to GDDR5 seemed inevitable, with there being a genuine incentive of cutting down the number of memory chips (due to the efficient memory bus), with NVIDIA having completely avoided GDDR4. With the die-size being expected to be around 300 sq. mm, these GPUs will be cheaper to manufacture. The GT212 is slated for Q2 2009.

27 Comments on More GT212 Information Forthcoming

1TB of XDR 24,000! XD

Sorry. Side-tracking.

My guess is that they will release G212, and in the first few months ATi will have nothing competitive, so nVidia will charge an arm and a leg for it to make up as much money as possible. Then ATi will release something that is competitive but priced lower to try and take market share from nVidia, and nVidia will lower the prices to compete...and the cycle goes on...

I'm always try to wait as long as I could and jump a big step to the next one. ;)

I tend to do one major upgrade ever few years or so, then do a few small ones very quickly after the major upgrade, then I am content. In the past year I went from Dual-7900GTs to a 8800GTS 512MB to a 9800GTX to Dual-9800GTX's and now this GTX260. I plan to use the step-up on the GTX260 to go to a GTX285 and then I think I will stick with that for awhile.

The upgrade from the 7900GTs to the 8800GTS only cost me about $100 after selling the 7900GTs, the upgrade to the 9800GTX cost me nothing as it was a step-up from my 8800GTS. The second 9800GTX cost me $150. And after selling the 9800GTX's the upgrade to the GTX260 costs about $15.