- Joined

- Oct 9, 2007

- Messages

- 46,349 (7.68/day)

- Location

- Hyderabad, India

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | ASUS ROG Strix B450-E Gaming |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 8GB G.Skill Sniper X |

| Video Card(s) | Palit GeForce RTX 2080 SUPER GameRock |

| Storage | Western Digital Black NVMe 512GB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

Today's top video games use complex programming and rendering techniques that can take months to create and tune in order to get the image quality and silky-smooth frame rates that gamers demand. Thousands of developers worldwide including members of Blizzard Entertainment, Crytek, Epic Games, and Rockstar Games rely on NVIDIA development tools to create console and PC video games. Today, NVIDIA has expanded its award-winning development suite with three new tools that vastly speed up this development process, keeping projects on track and costs under control.

The new tools which are available now include:

"These new tools reinforce our deep and longstanding commitment to help game developers fulfill their vision," said Tony Tamasi, vice president of technical marketing for NVIDIA. "Creating a state-of-the-art video game is an incredibly challenging task technologically, which is why we invest heavily in creating powerful, easy-to-use video game optimization and debugging tools for creating console and PC games."

More Details on the New Tools

PerfHUD 6 is a new and improved version of NVIDIA's graphics debugging and performance analysis tool for DirectX 9 and 10 applications. PerfHUD is widely used by the world's leading game developers to debug and optimize their games. This new version includes comprehensive support for optimizing games for multiple GPUs using NVIDIA SLI technology, powerful new texture visualization and override capabilities, an API call list, dependency views, and much more. In a recent survey, more than 300 PerfHUD 5 users reported an average speedup of 37% after using PerfHUD to tune their applications.

"Spore relies on a host of graphical systems that support a complex and evolving universe. NVIDIA PerfHUD provides a unique and essential tool for in-game performance analysis," said Alec Miller, Graphics Engineer at Maxis. "The ability to overlay live GPU timings and state helps us rapidly diagnose, fix, and then verify optimizations. As a result, we can simulate rich worlds alongside interactive gameplay. I highly recommend PerfHUD because it is so simple to integrate and to use."

FX Composer 2.5 is an integrated development environment for fast creation of real-time visual effects. FX Composer 2.5 can be used to create shaders for HLSL, CgFX, and COLLADA FX Cg in DirectX and OpenGL. This new release features an improved user interface, DirectX 10 Support, ShaderPerf with GeForce 8 and 9 Series support, visual models and styles, and particle systems.

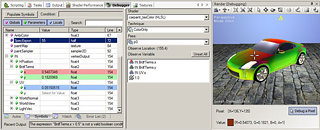

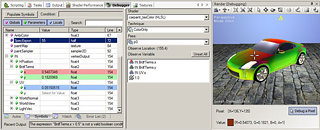

As longer, more complex shaders become pervasive, debugging shaders has become more of a challenge for developers. To assist developers with this task, NVIDIA introduces the brand-new NVIDIA Shader Debugger, a plug-in for FX Composer 2.5 that enables developers to inspect their code while seeing shader variables applied in real time on their geometry. The Shader Debugger can be used to debug HLSL, CgFX, and COLLADA FX Cg shaders in both DirectX and OpenGL.

The NVIDIA Shader Debugger is the first product in the NVIDIA Professional Developer Tools lineup. These are new tools directed at professional developers who need more industrial-strength capabilities and support. For example, the NVIDIA Shader Debugger will run on leading GPUs from all vendors.

In addition to the free versions available for non-commercial use, some of the new tools are subject to a license fee, but are priced to be accessible to developers. Existing free tools (such as FX Composer, PerfHUD, Texture Tools, and SDKs) will not be affected-they will continue to be available to all developers at no cost. Shader Debugger pricing information is available at www.shaderdebugger.com.

NVIDIA encourages developers to visit its developer web site here and its developer tools forums here.

View at TechPowerUp Main Site

The new tools which are available now include:

- PerfHUD 6-a graphics debugging and performance analysis tool for DirectX 9 and 10 applications.

- FX Composer 2.5-an integrated development environment for fast creation of real-time visual effects.

- Shader Debugger-helps debug and optimize shaders written with HLSL, CgFX, and COLLADA FX Cg in DirectX and OpenGL.

"These new tools reinforce our deep and longstanding commitment to help game developers fulfill their vision," said Tony Tamasi, vice president of technical marketing for NVIDIA. "Creating a state-of-the-art video game is an incredibly challenging task technologically, which is why we invest heavily in creating powerful, easy-to-use video game optimization and debugging tools for creating console and PC games."

More Details on the New Tools

PerfHUD 6 is a new and improved version of NVIDIA's graphics debugging and performance analysis tool for DirectX 9 and 10 applications. PerfHUD is widely used by the world's leading game developers to debug and optimize their games. This new version includes comprehensive support for optimizing games for multiple GPUs using NVIDIA SLI technology, powerful new texture visualization and override capabilities, an API call list, dependency views, and much more. In a recent survey, more than 300 PerfHUD 5 users reported an average speedup of 37% after using PerfHUD to tune their applications.

"Spore relies on a host of graphical systems that support a complex and evolving universe. NVIDIA PerfHUD provides a unique and essential tool for in-game performance analysis," said Alec Miller, Graphics Engineer at Maxis. "The ability to overlay live GPU timings and state helps us rapidly diagnose, fix, and then verify optimizations. As a result, we can simulate rich worlds alongside interactive gameplay. I highly recommend PerfHUD because it is so simple to integrate and to use."

FX Composer 2.5 is an integrated development environment for fast creation of real-time visual effects. FX Composer 2.5 can be used to create shaders for HLSL, CgFX, and COLLADA FX Cg in DirectX and OpenGL. This new release features an improved user interface, DirectX 10 Support, ShaderPerf with GeForce 8 and 9 Series support, visual models and styles, and particle systems.

As longer, more complex shaders become pervasive, debugging shaders has become more of a challenge for developers. To assist developers with this task, NVIDIA introduces the brand-new NVIDIA Shader Debugger, a plug-in for FX Composer 2.5 that enables developers to inspect their code while seeing shader variables applied in real time on their geometry. The Shader Debugger can be used to debug HLSL, CgFX, and COLLADA FX Cg shaders in both DirectX and OpenGL.

The NVIDIA Shader Debugger is the first product in the NVIDIA Professional Developer Tools lineup. These are new tools directed at professional developers who need more industrial-strength capabilities and support. For example, the NVIDIA Shader Debugger will run on leading GPUs from all vendors.

In addition to the free versions available for non-commercial use, some of the new tools are subject to a license fee, but are priced to be accessible to developers. Existing free tools (such as FX Composer, PerfHUD, Texture Tools, and SDKs) will not be affected-they will continue to be available to all developers at no cost. Shader Debugger pricing information is available at www.shaderdebugger.com.

NVIDIA encourages developers to visit its developer web site here and its developer tools forums here.

View at TechPowerUp Main Site

. I mean like both companies have their unique strengths and weaknesses which sets them apart

. I mean like both companies have their unique strengths and weaknesses which sets them apart , why can't they just compete fairly with mediocre game engines that don't give a $h** for any side so that we all know who is the best on the block. I know for a fact that ATI will bust this whole graphics propaganda bull$h** especially for the position they are in. So by the looks of it because Microsoft french kisses Nvidia, they will replaced the DirectX ATI is really getting good at and then start with DirectX 11 announcements. Nvidia, you are a snitch SOB who thinks about living a lie because you can't handle the truth that you just bride your way out of situations.

, why can't they just compete fairly with mediocre game engines that don't give a $h** for any side so that we all know who is the best on the block. I know for a fact that ATI will bust this whole graphics propaganda bull$h** especially for the position they are in. So by the looks of it because Microsoft french kisses Nvidia, they will replaced the DirectX ATI is really getting good at and then start with DirectX 11 announcements. Nvidia, you are a snitch SOB who thinks about living a lie because you can't handle the truth that you just bride your way out of situations.