HBM should mean smaller die required too connect the memory which translates to lower TDP.

I think you missed the point of HBM. The lower power comes about due to the lower speed of the I/O ( which is more than offset by the increased width). GDDR5 presently operates at 5-7Gbps/pin. HBM as shipped now by Hynix is operating at 1Gbps/pin

The TDP growth is not coming from the HBM, it is coming from elsewhere.

Maybe the 45% increase in core count over Hawaii ?

Correct me if wrong the Flagship graphics card, codenamed "Fiji" would via the GM200 as the 390/390X, then "Bermuda" is said to become the 380/380X and via the 970/980 correct?

There seem to be two schools of thought on that. Original roadmaps point to Bermuda being the second tier GPU, but some sources are now saying that Bermuda is some future top-tier GPU on a smaller process. The latter begs the question: If this is so, what will be the second tier when Fiji arrives? Iceland is seen as entry level, and Tonga/Maui will barely be mainstream. There is a gap unless AMD are content to sell Hawaii in the $200 market.

So where does btarunr come up with, "Despite this, "Fiji" could feature TDP hovering the 300W mark..."? It appears to be pure speculation/assumption, not grounded in any evidence?

I would have thought the answer was pretty obvious. btarunr's article is based on a Tech Report article (which is referenced as source). The Tech Report article is

based upon a 3DC article (which they linked to) which does reference the 300W number along with other salient pieces of information.

I also would like btarunr to expound on "despite slow progress from foundry partner TSMC to introduce newer silicon fabs".With a 16nm FinFET would be strange for either side to really need push the 300W envelope?

TSMC aren't anywhere close to volume production of 16nmFF required for large GPUs (i.e. high wafer count per order).

TSMC are on record themselves as saying that 16nmFF / 16nmFF+ will account for 1% of manufacturing by Q3 2015.

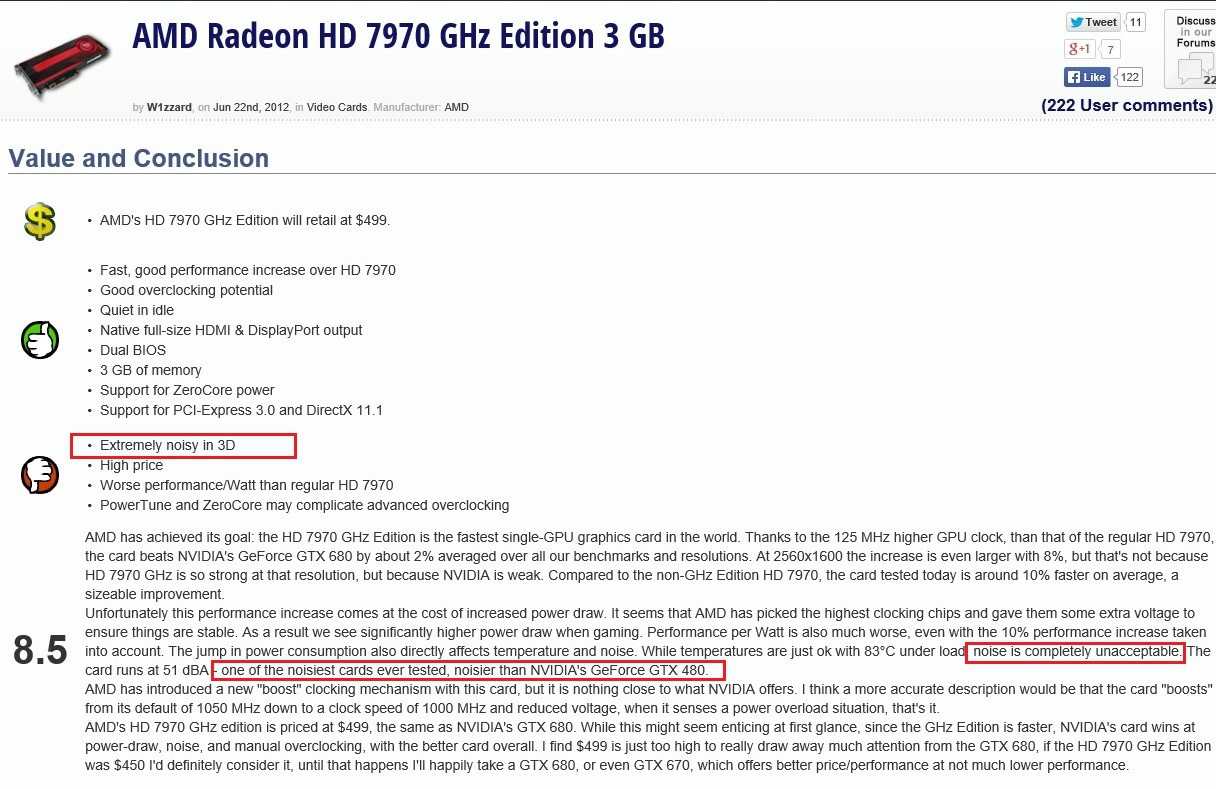

I believed AMD learned that approaching 300W is just too much for the thermal effectiveness of most reference rear exhaust coolers (Hawaii).

IDK about that. The HD 7990 was pilloried by review sites, the general public, and most importantly, OEMs for power/noise/heat issues. It didn't stop AMD from going one better with Hawaii/Vesuvius. If AMD cared anything for heat/noise, why saddle the reference 290/290X with a pig of reference blower design that was destined to follow the HD 7970 as the biggest example GPU marketing suicide in recent times?

Why would you release graphics cards with little of no inherent downsides from a performance perspective, with cheap-ass blowers that previously invited ridicule? Nvidia proved that the blower design doesn't have to be some Wal-Mart looking, Pratt & Whitney sounding abomination as far back as the GTX 690, yet AMD hamstrung their own otherwise excellent product with a cooler guaranteed to cause a negative impression.

Despite many rumors of that mocked up housing, I don’t see AMD releasing a single card "reference water" type cooler for their initial "Fiji" release, reference air cooling will maintain. I don't discount they could provide a "Gaming Special" to find the market reaction as things progress for a "reference water" cooler, but not primarily.

That reference design AIO contract Asetek recently signed

was for $2-4m. That's a lot of AIO's for a run of "gaming special" boards don't you think?