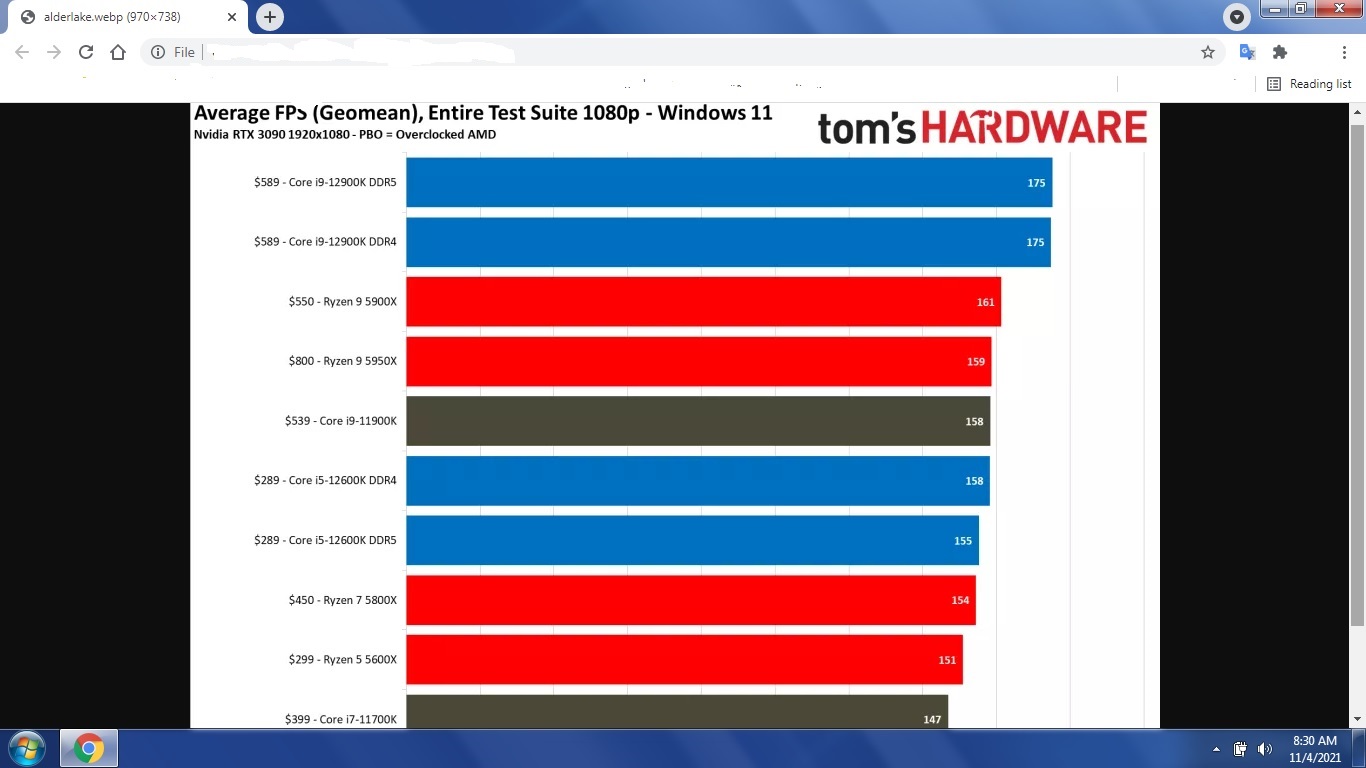

I know for a fact that 10900k is the best CPU for Warzone (most CPU demanding game out there), because it can be paired with really low latency RAM (like 4200 C16, for example) and has 10 cores on a monolhitic die. It averages 220fps with 190ish lows. While 5950x averages 200 with 170ish lows. 5900x, 5800x, 5600x, 11900k, 11700k etc are crap in comparasion.

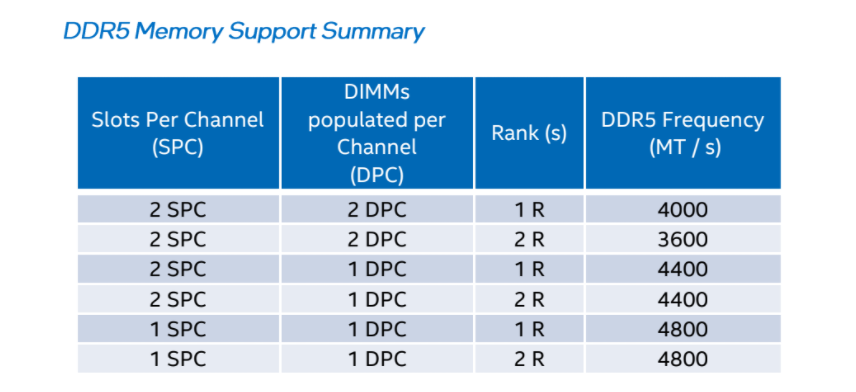

I have no doubts that Warzone will have worse fps when using DDR5. Latency is the most important thing for CPU bound games. My question was... who is going to buy a mainstream platform and take "advantage" of higher speed DDR5 ram on certain applications that clearly would benefit more from other platforms? That´s what I don´t understand. These are gaming CPUs above anything. Don´t tell me you are going to buy a mainstream platform for 24/7 rendering, makes no sense.

So this is about to happen, people spending loads on new fancy DDR5 just to have worse performance, because the timings are not quit there yet. And if you think about "long term", then waiting for Raptor Lake/Zen 4 would be way better option, as by that time DDR5 will for sure be faster.

People will have a lot of surprises in 2 days when the reviews drop... quick tip, look up for the lower end boards with DDR4... or just skip this gen. Because, oh boy, that 12900k with dual rank DDR4 at 3866 C14 in Gear 1, will completly obliterate any DDR5 config...

boring and really uninteresting. If that's where Intel chooses to dominate, I say great. Take all the trash games and we'll keep the rest.

boring and really uninteresting. If that's where Intel chooses to dominate, I say great. Take all the trash games and we'll keep the rest.