Thursday, October 1st 2009

NVIDIA Unveils Next Generation CUDA GPU Architecture – Codenamed ''Fermi''

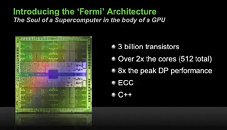

NVIDIA Corp. today introduced its next generation CUDA GPU architecture, codenamed "Fermi". An entirely new ground-up design, the "Fermi" architecture is the foundation for the world's first computational graphics processing units (GPUs), delivering breakthroughs in both graphics and GPU computing.

"NVIDIA and the Fermi team have taken a giant step towards making GPUs attractive for a broader class of programs," said Dave Patterson, director Parallel Computing Research Laboratory, U.C. Berkeley and co-author of Computer Architecture: A Quantitative Approach. "I believe history will record Fermi as a significant milestone."Presented at the company's inaugural GPU Technology Conference, in San Jose, California, "Fermi" delivers a feature set that accelerates performance on a wider array of computational applications than ever before. Joining NVIDIA's press conference was Oak Ridge National Laboratory who announced plans for a new supercomputer that will use NVIDIA GPUs based on the "Fermi" architecture. "Fermi" also garnered the support of leading organizations including Bloomberg, Cray, Dell, HP, IBM and Microsoft.

"It is completely clear that GPUs are now general purpose parallel computing processors with amazing graphics, and not just graphics chips anymore," said Jen-Hsun Huang, co-founder and CEO of NVIDIA. "The Fermi architecture, the integrated tools, libraries and engines are the direct results of the insights we have gained from working with thousands of CUDA developers around the world. We will look back in the coming years and see that Fermi started the new GPU industry."

As the foundation for NVIDIA's family of next generation GPUs namely GeForce, Quadro and Tesla − "Fermi" features a host of new technologies that are "must-have" features for the computing space, including:

"NVIDIA and the Fermi team have taken a giant step towards making GPUs attractive for a broader class of programs," said Dave Patterson, director Parallel Computing Research Laboratory, U.C. Berkeley and co-author of Computer Architecture: A Quantitative Approach. "I believe history will record Fermi as a significant milestone."Presented at the company's inaugural GPU Technology Conference, in San Jose, California, "Fermi" delivers a feature set that accelerates performance on a wider array of computational applications than ever before. Joining NVIDIA's press conference was Oak Ridge National Laboratory who announced plans for a new supercomputer that will use NVIDIA GPUs based on the "Fermi" architecture. "Fermi" also garnered the support of leading organizations including Bloomberg, Cray, Dell, HP, IBM and Microsoft.

"It is completely clear that GPUs are now general purpose parallel computing processors with amazing graphics, and not just graphics chips anymore," said Jen-Hsun Huang, co-founder and CEO of NVIDIA. "The Fermi architecture, the integrated tools, libraries and engines are the direct results of the insights we have gained from working with thousands of CUDA developers around the world. We will look back in the coming years and see that Fermi started the new GPU industry."

As the foundation for NVIDIA's family of next generation GPUs namely GeForce, Quadro and Tesla − "Fermi" features a host of new technologies that are "must-have" features for the computing space, including:

- C++, complementing existing support for C, Fortran, Java, Python, OpenCL and DirectCompute.

- ECC, a critical requirement for datacenters and supercomputing centers deploying GPUs on a large scale

- 512 CUDA Cores featuring the new IEEE 754-2008 floating-point standard, surpassing even the most advanced CPUs

- 8x the peak double precision arithmetic performance over NVIDIA's last generation GPU. Double precision is critical for high-performance computing (HPC) applications such as linear algebra, numerical simulation, and quantum chemistry

- NVIDIA Parallel DataCache - the world's first true cache hierarchy in a GPU that speeds up algorithms such as physics solvers, raytracing, and sparse matrix multiplication where data addresses are not known beforehand

- NVIDIA GigaThread Engine with support for concurrent kernel execution, where different kernels of the same application context can execute on the GPU at the same time (eg: PhysX fluid and rigid body solvers)

- Nexus - the world's first fully integrated heterogeneous computing application development environment within Microsoft Visual Studio

49 Comments on NVIDIA Unveils Next Generation CUDA GPU Architecture – Codenamed ''Fermi''

;)

Edit: Ah, I think it was more or less directed at shevanel's post.OpenGL is to Direct3D as OpenCL is to DirectCompute. So yeah, Windows only software will be inclined to use the DirectX variety while cross-platform software will use the Open variety. There's not much room for CUDA, I'm afraid.

Now I'm always wary of proprietary stuff, but sometimes a proprietary standard blows away the open-source one in terms of actual performance and functionality. I definitely think that this is the case here.

I think he meant Linux types will use open source, whilst windows type will use DX11 rather then cuda.

nVidia is going to face (and probably already has) massive technical issues on this one, only to be compounded by ridiculous TDP and a price they can't possibly turn profitable. Maybe if Larabee were out we'd be looking at a different competitive landscape, but I think for now gamers are more interested in gaming than spending an extra $100-200 to fold proteins.

(That said, this may end up benefiting their Quadro line significantly. Those sales are way too low-volume to save them if this thing fails in the consumer market though...)

I see this as a direction shift from Nvidia, they're starting to look at different areas for revenue (HPC etc). They'll still be big in the descrete gpu market, but it wont be their sole focus. They may lose market share to ATI (and eventually Intel), but if they offset that with increased profit elsewhere then it wont matter. Indeed they may be more stable as a company having a more diverse business model.

For Nvidia to not have a DX11 card ready in 2009 is a major fail. This card could be 4-5 months away and i doubt even die hard nvidia lovers will be prepared to wait until next year while there are 5850's and 5870's around.

ATI started with high precision stream cores back on the X1K and now it has branched to DX11, and CUDA as the competing platform. This is all going to come down to consumers, this will be another "format war".

I am liking the offerings of the green team this round, I love the native code drop in, the expected performance at common tasks and folding, but I will probably hate the price. ATI might have a real problem here if they don't get their ass in gear with some software to run on their hardware, and show it to be as good as or better than NV. I for one am tired of paying either company for a card, hearing all the options and only having a few actually made and working. I bought a high end high def camcorder, and a card I was understanding could/was going to handle the format and do it quickly. I still use the CPU based software to manipulate my movies. FAIL......

DX11 offers unified sound support, unified input support, unified networking support, and more that CUDA does not. But that's not what you were asking. CUDA and DirectCompute are virtually the same with one caveat: Microsoft will flex their industry muscles to get developers to use it and developers will want to use it because the same code will work on NVIDIA, AMD, and Intel GPUs.

DirectCompute has everything to do with parallel computing. That is the reason why it was authored.