Thursday, October 1st 2009

NVIDIA 'Fermi', Tesla Board Pictured in Greater Detail, Non-Functional Dummy Unveiled

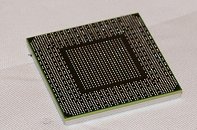

Unveiled at the footnote of the GPU Technology Conference 2009, by none other than NVIDIA CEO Jen-Hsun Huang, NVIDIA's Fermi architecture looks promising, at least in the field of GPGPU, which was extensively discussed upon in his address. The first reference board based on NVIDIA's newest 'GT300' GPU is a Tesla HPC processor card, which quickly became the face of the Fermi architecture. Singapore HardwareZone, and PCPop caught some of the first closeup pictures of the Tesla accelerator, and the GPU's BGA itself. Decked in a dash of chrome, the Tesla HPC processor card isn't particularly long, instead a great deal of compacting by its designers is evident. It draws power from one 8-pin, and 6-pin PCI-E power connectors, which aren't located next to each other. The cooler's blower also draws air from openings in the PCB, and a backplate further cools the GPU (and possibly other components located) from behind. From the looks of it, the GPU package itself isn't larger than that of the GT200 or its predecessor, the G80. Looks like NVIDIA is ready with a working prototype against all odds, after all, doesn't it? Not quite. On close inspection of the PCB, it doesn't look like a working sample. Components that are expected to have pins protruding soldered on the other side, don't have them, and the PCB seems to be abruptly ending. Perhaps it's only a dummy made to display at GTC, and give an indication of how the card ends up looking like. In other words, it doesn't look like NVIDIA has a working prototype/sample of the card they intended to have displayed the other day.

Sources:

Singapore HardwareZone, PCPop

94 Comments on NVIDIA 'Fermi', Tesla Board Pictured in Greater Detail, Non-Functional Dummy Unveiled

Nvidia is just saying "look this way geeks, we have something tasty for you if you will not spend your money on ATi cards, it can be yours REALLY soon, so just hold on! just a little more!"

I don't blame them, it's a good tactic from a a business point

Can't brag about my post count though :o :respect:

Is team green really going to give ATI the entire holiday season with no direct competition? If they are, what a gift.

yes it was a great move by them but the price have to be agressive, yeah massive cruncher it will be, but as times goes more ppl want more "bang for the buck" and they have to remember that some or most of the ppl won't use the all the cuda capacities and will just want raw graphic power. Some espaculation say that having a bigger proccesor, a bigger memory interface and a multy layer PCB they say the price it's going to round 400-500 $.

(secretly proclaiming victory).

Seriously cut off the PCB? LOL

Did you know the new Micro GPU, the GT500 due for 2015, is going to look like:img.techpowerup.org/091002/lol363.jpg

What we need is a G92 successor and a new Intel chipset.

Did you know the hardware thats in a Quaddro or FireGL is exactly the same except for the GUID of the card itself? These cards are basicly sold for like 1000 to 2000$ in avg because there's pretty much better support on drivers then a regular 3D card. Maybe nvidia is depening on this type of modelling-market, instead of Gamers.

I guess there's where the big money is, and there will be a shrinked version of this card that has a Gamers-stamp on it. On the other hand, Nvidia was pretty much lowzy back in the DX 10.1 age, and rebranding 8x00 series into 9x00 or GT200 etc. I think Ati was the more innovativer team this time and nvidia is going to pay for this.

Untill Q1, Ati holds pretty much the market, esp when christmass is coming :)

It's by Charlie.

To me this certainly makes nvidia a company im less inclined to deal with. And these cards are about 5+months out anyway, so this is just a poor attempt at ruining DAAMITs party. :shadedshu

Why release a dummy model that looks like this, when we all know this is nothing like the final design?

Wow, the saying's really true, huh? The bigger they are, the harder they fall (ie, the bigger the co. & GPU size in this case)!:shadedshu