Tuesday, October 1st 2013

Radeon R9 290X Clock Speeds Surface, Benchmarked

Radeon R9 290X is looking increasingly good on paper. Most of its rumored specifications, and SEP pricing were reported late last week, but the ones that eluded us were clock speeds. A source that goes by the name Grant Kim, with access to a Radeon R9 290X sample, disclosed its clock speeds, and ran a few tests for us. To begin with, the GPU core is clocked at 1050 MHz. There is no dynamic-overclocking feature, but the chip can lower its clocks, taking load and temperatures into account. The memory is clocked at 1125 MHz (4.50 GHz GDDR5-effective). At that speed, the chip churns out 288 GB/s of memory bandwidth, over its 512-bit wide memory interface. Those clock speeds were reported by the GPU-Z client to us, so we give it the benefit of our doubt, even if it goes against AMD's ">300 GB/s memory bandwidth" bullet-point in its presentation.

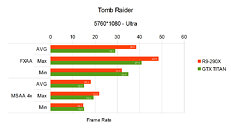

Among the tests run on the card include frame-rates and frame-latency for Aliens vs. Predators, Battlefield 3, Crysis 3, GRID 2, Tomb Raider (2013), RAGE, and TESV: Skyrim, in no-antialiasing, FXAA, and MSAA modes; at 5760 x 1080 pixels resolution. An NVIDIA GeForce GTX TITAN was pitted against it, running the latest WHQL driver. We must remind you that at that resolution, AMD and NVIDIA GPUs tend to behave a little differently due to the way they handle multi-display, and so it may be an apples-to-coconuts comparison. In Tomb Raider (2013), the R9 290X romps ahead of the GTX TITAN, with higher average, maximum, and minimum frame rates in most tests.RAGE

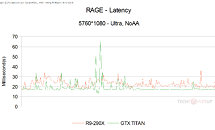

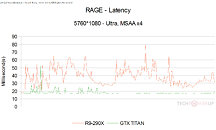

The OpenGL-based RAGE is a different beast. With AA turned off, the R9 290X puts out an overall lower frame-rates, and higher frame latency (lower the better). It gets even more inconsistent with AA cranked up to 4x MSAA. Without AA, frame-latencies of both chips remain under 30 ms, with the GTX TITAN looking more consistent, and lower. At 4x MSAA, the R9 290X is all over the place with frame latency.TESV: Skyrim

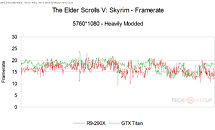

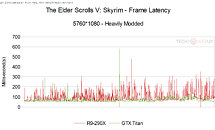

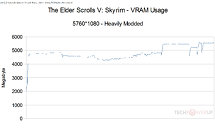

The tester somehow got the game to work at 5760 x 1080. With no AA, both chips put out similar frame-rates, with the GTX TITAN having a higher mean, and the R9 290X spiking more often. In the frame-latency graph, the R9 290X has a bigger skyline than the GTX TITAN, which is not something to be proud of. As an added bonus, the VRAM usage of the game was plotted throughout the test run.GRID 2

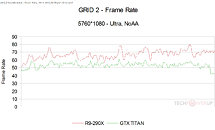

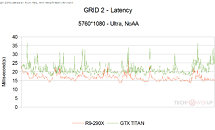

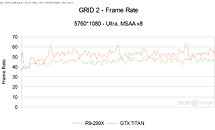

GRID 2 is a surprise package for the R9 290X. The chip puts out significantly, and consistently higher frame-rates than the GTX TITAN at no-AA, and offers lower frame-latencies. Even with MSAA cranked all the way up to 8x, the R9 290X holds out pretty well on the frame-rate front, but not frame-latency.Crysis 3

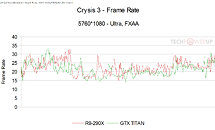

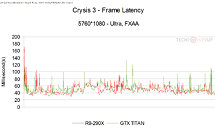

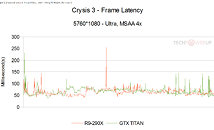

This Cryengine 3-based game offers MSAA and FXAA anti-aliasing methods, and so it wasn't tested without either enabled. With 4x MSAA, both chips offer similar levels of frame-rates and frame-latencies. With FXAA enabled, the R9 290X offers higher frame-rates on average, and lower latencies.Battlefield 3

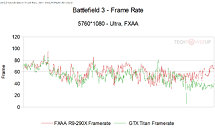

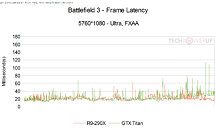

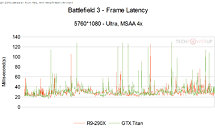

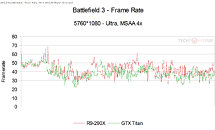

That leaves us with Battlefield 3, which like Crysis 3, supports MSAA and FXAA. At 4x MSAA, the R9 290X offers higher frame-rates on average, and lower frame-latencies. It gets better for AMD's chip with FXAA on both fronts.Overall, at 1050 MHz (core) and 4.50 GHz (memory), it's advantage-AMD, looking at these graphs. Then again, we must remind you that this is 5760 x 1080 we're talking about. Many Thanks to Grant Kim.

Among the tests run on the card include frame-rates and frame-latency for Aliens vs. Predators, Battlefield 3, Crysis 3, GRID 2, Tomb Raider (2013), RAGE, and TESV: Skyrim, in no-antialiasing, FXAA, and MSAA modes; at 5760 x 1080 pixels resolution. An NVIDIA GeForce GTX TITAN was pitted against it, running the latest WHQL driver. We must remind you that at that resolution, AMD and NVIDIA GPUs tend to behave a little differently due to the way they handle multi-display, and so it may be an apples-to-coconuts comparison. In Tomb Raider (2013), the R9 290X romps ahead of the GTX TITAN, with higher average, maximum, and minimum frame rates in most tests.RAGE

The OpenGL-based RAGE is a different beast. With AA turned off, the R9 290X puts out an overall lower frame-rates, and higher frame latency (lower the better). It gets even more inconsistent with AA cranked up to 4x MSAA. Without AA, frame-latencies of both chips remain under 30 ms, with the GTX TITAN looking more consistent, and lower. At 4x MSAA, the R9 290X is all over the place with frame latency.TESV: Skyrim

The tester somehow got the game to work at 5760 x 1080. With no AA, both chips put out similar frame-rates, with the GTX TITAN having a higher mean, and the R9 290X spiking more often. In the frame-latency graph, the R9 290X has a bigger skyline than the GTX TITAN, which is not something to be proud of. As an added bonus, the VRAM usage of the game was plotted throughout the test run.GRID 2

GRID 2 is a surprise package for the R9 290X. The chip puts out significantly, and consistently higher frame-rates than the GTX TITAN at no-AA, and offers lower frame-latencies. Even with MSAA cranked all the way up to 8x, the R9 290X holds out pretty well on the frame-rate front, but not frame-latency.Crysis 3

This Cryengine 3-based game offers MSAA and FXAA anti-aliasing methods, and so it wasn't tested without either enabled. With 4x MSAA, both chips offer similar levels of frame-rates and frame-latencies. With FXAA enabled, the R9 290X offers higher frame-rates on average, and lower latencies.Battlefield 3

That leaves us with Battlefield 3, which like Crysis 3, supports MSAA and FXAA. At 4x MSAA, the R9 290X offers higher frame-rates on average, and lower frame-latencies. It gets better for AMD's chip with FXAA on both fronts.Overall, at 1050 MHz (core) and 4.50 GHz (memory), it's advantage-AMD, looking at these graphs. Then again, we must remind you that this is 5760 x 1080 we're talking about. Many Thanks to Grant Kim.

100 Comments on Radeon R9 290X Clock Speeds Surface, Benchmarked

And we are not talking about exponential increases, but percentage of raw output standardized.

So on he 7970 tests the increase was directly tied to core and memory speed, a 10% increase on both resulted in a 10% increase in performance. Same with Titan.

I am aware of the boost speeds Titan uses, and so it does make it a apples to coconut comparison, if this test card is stuck at 800Mhz and is still hand in hand with Titan that is boosting to 1Ghz speeds, what happens when the memory is ramped up and the core is capable of reaching the same clocks as the 7970?

290X @ 1050mhz vs Titan boosted to 1000mhz

Let's wait for real benchmarks instead of trying to continue to speculate with values that really cannot be used to achieve any greater accuracy than guessing in the first place. :)

W1zz: I got some spare coke and an extra hooker, you interested? :p

:peace:

I heard they are going to fix that problem with some magic blue powder, that's right, add crushed Viagra to the coke for a better pecker picker upper.

This crap going to bubble up... and there's less inference to draw form it because we don't know how old it is, or what the drivers were.

So keep the seat belt on it's going to get bumpy, while in this holding pattern.

Of course this is a multi-display setup and things might look different on a 1440P monitor.

All of this shouldn't matter at this point. We need to see how efficient Mantle is. If Mantle does deliver on the 9x promise, which is quite likely given that console developers have managed to squeeze folds more in performance out of those console chips using low level coding that interfaces semi-directly with the hardware.

Truly what matters at this point to me as a PC gamer is getting next gen PC games; a revolution in PC games. We need games with better mechanics, graphics and physics.

All these old games just don't cut it for PC gamers like myself. The crappy game mechanics and physics especially are no longer bearable.

TrueAudio is neat, don't get me wrong, but this was a GPU conference, and they spent more time talking about that than touting their new flagship. Maybe they learned from Bulldozer, but I doubt it.

As a side note, I have no doubt they will beat nVidia's offerings at price/performance.

www.overclockers.com/nvidia-gtx-titan-video-card-review

W1zzard only found slightly below 1000Mhz on his referance review, but it ran there alot as it's a dark diamond.

www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_Titan/34.html

Here is a paragraph from the article talking about what you are though...Is adjusting the fan profile overclocking? Overclocking is adjusting the clock speeds in my head. He adjusted the fan profile in the second graph which was then overclocked and hit 1100+ Mhz. The first is stock. ;)

So, with temps in order and not using the overly 'quiet' stock fan settings, this card will boost 1006 Mhz all day long. I guess we are splitting hairs? lOl!

min being 29 makes more sense 35 avg... would fall about same % behind amd...

I want to see how this will fold...

or in the very least...

FAHbench

proteneer.com/blog/?page_id=1671