Monday, June 18th 2018

Latest 4K 144 Hz Monitors use Blurry Chroma Subsampling

Just a while ago the first 4K 144 Hz monitors became available with the ASUS PG27UQ and Acer X27. These $2,000 monitors no longer force gamers to pick between high-refresh rate or high resolution, since they support 3840x2160 and refresh rates up to 144 Hz. However, reviews of early-adopters report a noticeable degradation in image quality when these monitors are running at 144 Hz. Surprisingly refresh rates of 120 Hz and below look perfectly sharp.The underlying reason for that is the DisplayPort 1.4 interface, which provides 26 Gbits/s of bandwidth, just enough for full 4K at 120 Hz. So monitor vendors had to get creative to achieve the magic 144 Hz that they were shooting for. The solution comes from old television technology in form of chroma subsampling (YCbCr), which, in the case of these monitors, transmits the grayscale portion of the image at full resolution (3840x2160) and the color information at half the horizontal resolution (1920x2160).

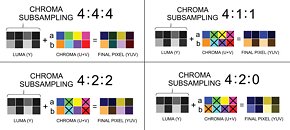

This approach is called 4:2:2 and works particularly well for the movie industry, where it is pretty much standard to ship the post-processed content to cinemas and TV using subsampling of 4:2:0 or 4:1:1. For computer generated content like text and the operating system interface, chroma subsampling has a serious effect on quality, especially text-readability, which is why it is not used here at all. In games chroma sub-sampling might be an acceptable approach, with negligible quality effects on the 3D game world, and slightly loss of sharpness for the HUD.

The images below describe how chroma subsampling works and how it affects image quality. Note how high-contrast edges look noticeably worse while low-constrast surfaces look nearly identical.Considering that gamers have been demanding 144 Hz on their 4K monitors for quite a while, and NVIDIA has been pushing for it, too, I'm not surprised that monitor vendors simply went with this compromise. The bigger issue here is that they have been completely quiet about this and make no mention about it in their specification documents.

Technical solutions that can avoid subsampling include HDMI 2.1 and DisplayPort's DSC data compression scheme. HDMI 2.1, was just specified in December last year, so controller vendors are just figuring out how to implement it, which means it is still far away from getting used in actual monitors. DisplayPort 1.4 introduced DSC (Display Stream Compression), which is particularly optimized for this task. Even though it is not completely lossless compression, its quality is much better than chroma subsampling. The issue here seems that not a lot of display controller support exists for DSC at this time.

Given that there is not a lot of difference between 120 Hz and 144 Hz gaming, the best approach to get highest visual quality is to run these monitors at up to 120 Hz only, where they will send the full unmodified RGB image over the wire.

Source:

Reddit

This approach is called 4:2:2 and works particularly well for the movie industry, where it is pretty much standard to ship the post-processed content to cinemas and TV using subsampling of 4:2:0 or 4:1:1. For computer generated content like text and the operating system interface, chroma subsampling has a serious effect on quality, especially text-readability, which is why it is not used here at all. In games chroma sub-sampling might be an acceptable approach, with negligible quality effects on the 3D game world, and slightly loss of sharpness for the HUD.

The images below describe how chroma subsampling works and how it affects image quality. Note how high-contrast edges look noticeably worse while low-constrast surfaces look nearly identical.Considering that gamers have been demanding 144 Hz on their 4K monitors for quite a while, and NVIDIA has been pushing for it, too, I'm not surprised that monitor vendors simply went with this compromise. The bigger issue here is that they have been completely quiet about this and make no mention about it in their specification documents.

Technical solutions that can avoid subsampling include HDMI 2.1 and DisplayPort's DSC data compression scheme. HDMI 2.1, was just specified in December last year, so controller vendors are just figuring out how to implement it, which means it is still far away from getting used in actual monitors. DisplayPort 1.4 introduced DSC (Display Stream Compression), which is particularly optimized for this task. Even though it is not completely lossless compression, its quality is much better than chroma subsampling. The issue here seems that not a lot of display controller support exists for DSC at this time.

Given that there is not a lot of difference between 120 Hz and 144 Hz gaming, the best approach to get highest visual quality is to run these monitors at up to 120 Hz only, where they will send the full unmodified RGB image over the wire.

50 Comments on Latest 4K 144 Hz Monitors use Blurry Chroma Subsampling

Nothing new there then, as consumers are continuing to be used as guinea pigs for new technology.

I know these monitors have a fan that turns on inside as well, because they run so hot. Seems so dangerous to me, lol... really going to take a hard pass on these new monitors, and any that require a fan... can't be good for longevity.

For bonus points, it seems like not even DP1.4 is enough to drive HDR content (10 bits per channel) at high refresh rates :(

why even bother with 144hz because in LCDs you wont be able to get the smoothness and fluidity of CRTs no matter what. i remember when i was doing 165hz at my old CRT. darn wide screens!! :(

fortunately (or unfortunately ) mine is at 60-75hz (can tolerate 30hz but not for games) and i considere 1440p to be the ideal resolution up to 32" and just as for refresh rate, up to 1620p is nice (3K) but above is gimmick (fortunately was maybe because i can find decent price around these specs :laugh: )

4k 120hz is a joke and 144hz even more ... maybe in one or two more GPU gen...

actually i rather hate the high res small size screen tendency .... 1440p/4K 24"? seriously? even at 1440/1620p 32" sitting at a adequate distance i can't see the pixels or any aliasing (never using FxAA or any AA since i swapped for that screen ) :laugh: even worse with smartphone ... i have a 1440p 5.2", previously i had a 720p 5.6", 1080p 5.5" and 1200p 8" (luckily the 1440p 5.6 was at a price lower than the other 3 ... otherwise i would never take it)

I guess it looks hyper-real when it refreshes that fast, with very little motion blur, while in everyday life, we see motion blur all the time. Anything that eliminates it, for me, is great as I can see so much more. Note that everyone else can see so much more too, which is why it feels odd to them.

CRT: no image to speak of, just a dot moving at huge speeds to trick your eyes into seeing one.

LCD: image is always there, but you can't refresh it as fast.

When CRTs were everywhere, virtually everybody in their late thirties who used one at their job, had to wear glasses.

I think quantum dot could bring back that insanely fast refresh speeds while maintaining LCD's advantages. But it would take real quantum dot, not that contraption Samsung sells these days. Then again, Samsung's XL20 was also promising back in the day and the tech never took off...

Edit: Looks like Asus also has the fan...

Acer could not take the time to make a design without fan then they are not worthy of my money.

This is what you get when you jump on trends as they surface.

...that's not an excuse for manufacturers though.

...just like abandoning 16:10 :banghead: