Monday, September 3rd 2018

NVIDIA GeForce RTX 2080 Ti Benchmarks Allegedly Leaked- Twice

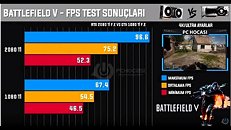

Caveat emptor, take this with a grain of salt, and the usual warnings when dealing with rumors about hardware performance come to mind here foremost. That said, a Turkish YouTuber, PC Hocasi TV, put up and then quickly took back down a video going through his benchmark results for the new NVIDIA GPU flagship, the GeForce RTX 2080 Ti across a plethora of game titles. The results, which you can see by clicking to read the whole story, are not out of line but some of the game titles involve a beta stage (Battlefield 5) and an online shooter (PUBG) so there is a second grain of salt needed to season this gravy.

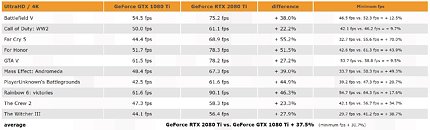

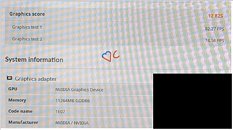

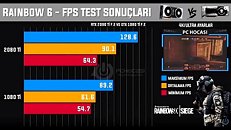

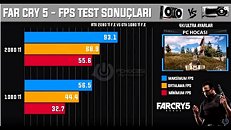

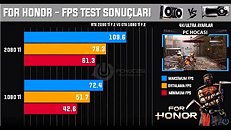

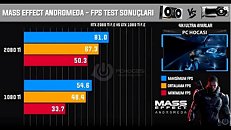

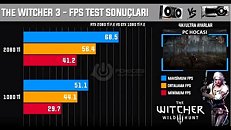

As it stands, 3DCenter.org put together a nice summary of the relative performance of the RTX 2080 Ti compared to the GeForce GTX 1080 Ti from last generation. Based on these results, the RTX 20T0 Ti is approximately 37.5% better than the GTX 1080 Ti as far as average FPS goes and ~30% better on minimum FPS. These are in line with expectations from hardware analysts and the timing of these results tying in to when the GPU launches does lead some credence to the numbers. Adding to this leak is yet another, this time based off a 3DMark Time Spy benchmark, which we will see past the break.The second leak in question is from an anonymous source to VideoCardz.com that sent a photograph of a monitor displaying a 3DMark Time Spy result for a generic NVIDIA graphics device with code name 1E07 and 11 GB of VRAM on board. With a graphics score of 12, 825, this is approximately 35% higher than the average score of ~9500 for the GeForce GTX 1080 Ti Founders Edition. This increase in performance matches up closely to the average increase in game benchmarks seen before and, if these stand with release drivers as well, then the RTX 2080 Ti brings with it a decent but not overwhelming performance increase compared to the previous generation in titles that do not make use of in-game real-time ray tracing. As always, look out for a detailed review on TechPowerUp before making up your minds on whether this is the GPU for you.For those interested, screenshots of the first set of benchmarks are attached below (taken from Joker Productions on YouTube):

As it stands, 3DCenter.org put together a nice summary of the relative performance of the RTX 2080 Ti compared to the GeForce GTX 1080 Ti from last generation. Based on these results, the RTX 20T0 Ti is approximately 37.5% better than the GTX 1080 Ti as far as average FPS goes and ~30% better on minimum FPS. These are in line with expectations from hardware analysts and the timing of these results tying in to when the GPU launches does lead some credence to the numbers. Adding to this leak is yet another, this time based off a 3DMark Time Spy benchmark, which we will see past the break.The second leak in question is from an anonymous source to VideoCardz.com that sent a photograph of a monitor displaying a 3DMark Time Spy result for a generic NVIDIA graphics device with code name 1E07 and 11 GB of VRAM on board. With a graphics score of 12, 825, this is approximately 35% higher than the average score of ~9500 for the GeForce GTX 1080 Ti Founders Edition. This increase in performance matches up closely to the average increase in game benchmarks seen before and, if these stand with release drivers as well, then the RTX 2080 Ti brings with it a decent but not overwhelming performance increase compared to the previous generation in titles that do not make use of in-game real-time ray tracing. As always, look out for a detailed review on TechPowerUp before making up your minds on whether this is the GPU for you.For those interested, screenshots of the first set of benchmarks are attached below (taken from Joker Productions on YouTube):

86 Comments on NVIDIA GeForce RTX 2080 Ti Benchmarks Allegedly Leaked- Twice

People were spoiled by nvidia doing a good job of creating faster architectures, not from a single arch change. If RTX 2000 is indeed just a small jump over pascal, that will be a disappointment on par with the 400 series.If you need an upgrade? Once the 2000s come out, it will be 6 months before you can buy one, and signs point to it not being all that much faster.

Getting 85% of that future performance today for 50% of the price is a good deal. Its not like that old tech will just stop working.

Not optimizing new software for older arches is not gimping. Read a dictionary sometime. gimping is retroactively LOWERING performance of a previous product, which has been proven time and time again to be completely false with nvidia.

They just no longer optimize for previous generations. AMD did this too, and I'll bet you were not complaining when they stopped optimizing for the 4000 series, or evergreen, or the 9000 series, ece. The only reason they continue to optimize for GCN is because they have no choice, they are still selling that arch.

The moment GCN is either replaced or updates to a point where it no longer functions similarly to GCN 1, optimization for GCN will stop completely. It's SOP for GPU makers, has been since the beginning of time.

otw the avg difference would be something like 15-20% max

just gotta wait til the price is less than three kidneys

Strix 1080 OC Vs 2070/2080

if nvidia will get away with this (sell well). trust me - next rtx 3070 will cost FE edition 899$ and potential BP 799$ (that in market == Null), and guess what - yes, AMD will come around, but they wont bie like "lets sell our rtx 3070 eqv for -400$ less", they be like "lets adjust to market and give -50$, maybe -100$". so thx to all proud preorderers and "+30% for +80% price jump is not that bad, dont hate, if you have money - go for it" - we might have 1000$ midrange gpus as soon as next gen even though, no mining craze

Sure, the price is Titan-level, but... the card is very Titan-like in other aspects as well. Tensor cores, RT cores...

Maybe it's a shift in product lineup? RTX could replace Titan and we may still get a GTX2080. Dropping GTX brand seemed very fishy from the beginning.

Honestly, I hoped for Tensor and RT cores in budget cards in this generation. But having read a bit more about this tech and alleged R&D put into it, an RTX 2060 suddenly seems much less possible... RTX 3060 as well... Damn. :-/

There's nothing wrong with gaming at 1080p.Wut!

All rumours say it runs hotter, hence the dual fan set up up.

You an Nvidia shill sent here to dispel that rumour?

As far as radiated heat goes, it's going to be close enough to 1080Ti with the same 250W (or 10W more at 260W for clock bump) of power consumption.

Gimping is lowering the performance of older hardware with new drivers, in comparison to older drivers. Perhaps you should read a dictionary sometimes.

Optimising new software for old hardware has nothing to do with it, they should be at least capable of running at prior levels of performance, not lower, which has been shown many times through forums like this one.