Monday, February 18th 2019

NVIDIA: Image Quality for DLSS in Metro Exodus to Be Improved in Further Updates, and the Nature of the Beast

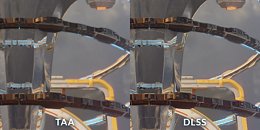

NVIDIA, in a blog post/Q&A on its DLSS technology, promised implementation and image quality improvements on its Metro Exodus rendition of the technology. If you'll remember, AMD recently vouched for other, non-proprietary ways of achieving desired quality of AA technology across resolutions such as TAA and SMAA, saying that DLSS introduces "(...) image artefacts caused by the upscaling and harsh sharpening." NVIDIA in its blog post has dissected DLSS in its implementation, also clarifying some lingering questions on the technology and its resolution limitations that some us here at TPU had already wondered about.

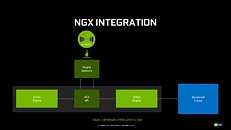

The blog post describes some of the limitations in DLSS technology, and why exactly image quality issues might be popping out here and there in titles. As we knew from NVIDIA's initial RTX press briefing, DLSS basically works on top of an NVIDIA neural network. Titled the NGX, it processes millions of frames from a single game at varying resolutions, with DLSS, and compares it to a given "ground truth image" - the highest quality possible output sans any shenanigans, generated from just pure raw processing power. The objective is to train the network towards generating this image without the performance cost. This DLSS model is then made available for NVIDIA's client to download and to be run at your local RTX graphics card level, which is why DLSS image quality can be improved with time. And it also helps explain why closed implementations of the technology, such as 3D Mark's Port Royal benchmark, show such incredible image quality scenarios compared to, say, Metro Exodus - there is a very, very limited number of frames that the neural network needs to process towards achieving the best image quality.Forumites: This is an Editorial

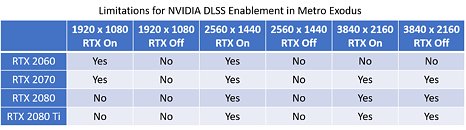

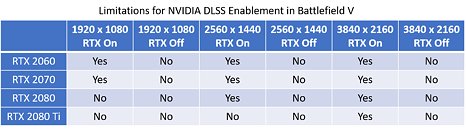

The nature of DLSS means that the network needs to be trained for every conceivable resolution, since different rendering resolutions will require different processing for the image to resemble the ground truth we're looking for. This is the reason for Metro Exodus' limits in DLSS - it's likely that NVIDIA didn't actually choose not to enable it at 1080p with RTX off, it was just a case of the rendering time on its NGX cluster not being enough, in time for launch, to cover all of the most popular resolutions, with or without RTX, across its three available settings. So NVIDIA mated both settings, to allow the greatest image quality and performance improvements for those gamers that want to use RTX effects, and didn't train the network for non-RTX scenarios.This brings with it a whole lot of questions - how long exactly does NVIDIA's neural network take to train an entire game's worth of DLSS integration? With linear titles, this is likely a great technology - but apply this to an open-world setting (oh hey, like Metro Exodus) and this seems like an incredibly daunting task. NVIDIA had this to say on its blog post:

Source:

NVIDIA

The blog post describes some of the limitations in DLSS technology, and why exactly image quality issues might be popping out here and there in titles. As we knew from NVIDIA's initial RTX press briefing, DLSS basically works on top of an NVIDIA neural network. Titled the NGX, it processes millions of frames from a single game at varying resolutions, with DLSS, and compares it to a given "ground truth image" - the highest quality possible output sans any shenanigans, generated from just pure raw processing power. The objective is to train the network towards generating this image without the performance cost. This DLSS model is then made available for NVIDIA's client to download and to be run at your local RTX graphics card level, which is why DLSS image quality can be improved with time. And it also helps explain why closed implementations of the technology, such as 3D Mark's Port Royal benchmark, show such incredible image quality scenarios compared to, say, Metro Exodus - there is a very, very limited number of frames that the neural network needs to process towards achieving the best image quality.Forumites: This is an Editorial

The nature of DLSS means that the network needs to be trained for every conceivable resolution, since different rendering resolutions will require different processing for the image to resemble the ground truth we're looking for. This is the reason for Metro Exodus' limits in DLSS - it's likely that NVIDIA didn't actually choose not to enable it at 1080p with RTX off, it was just a case of the rendering time on its NGX cluster not being enough, in time for launch, to cover all of the most popular resolutions, with or without RTX, across its three available settings. So NVIDIA mated both settings, to allow the greatest image quality and performance improvements for those gamers that want to use RTX effects, and didn't train the network for non-RTX scenarios.This brings with it a whole lot of questions - how long exactly does NVIDIA's neural network take to train an entire game's worth of DLSS integration? With linear titles, this is likely a great technology - but apply this to an open-world setting (oh hey, like Metro Exodus) and this seems like an incredibly daunting task. NVIDIA had this to say on its blog post:

For Metro Exodus, we've got an update coming that improves DLSS sharpness and overall image quality across all resolutions that didn't make it into day of launch. We're also training DLSS on a larger cross section of the game, and once these updates are ready you will see another increase in quality. Lastly, we are looking into a few other reported issues, such as with HDR, and will update as soon as we have fixes.So this not only speaks to NVIDIA recognizing that DLSS image quality isn't at the level it's supposed to be (which implies it can actually degrade image quality, giving further credence to AMD's remarks on the matter), but also confirms that they're constantly working on improving DLSS' performance and image quality - and more interestingly, that this is something they can always change, server-side. I'd question the sustainability of DLSS' usage, though; the number of DLSS-enabled games is low enough as it is - and yet NVIDIA seems to be having difficuly in keeping up even when it comes to AAA releases. Imagine if DLSS picked up like NVIDIA would like it (or would they?) and expand to most of the launched games. Looking at what we know, I don't even think that scenario of support would be possible - NVIDIA's neural network would be bottlenecked with all the processing time required for these games, their different rendering resolutions and RTX settings.DLSS really is a very interesting technology that empowers the RTX graphics card of every user with the power of the cloud, as NVIDIA said it did. However, there are certainly some quirks that require more processing time than they've been given, and there are limits to how much processing power NVIDIA can and will dedicate to each title. That the network needs to be trained again and again and again for every new title out there bodes well for a controlled, NVIDIA-fed games development environment, but that's not the real world - especially not with an AMD-led console market. I'd question DLSS' longevity and success on these factors alone, whilst praising its technology and forward-thinking design immensely.

112 Comments on NVIDIA: Image Quality for DLSS in Metro Exodus to Be Improved in Further Updates, and the Nature of the Beast

There is one certainty: it will not be predictable and thus not quite as consistent as many people would want or expect. Keep in mind we're just talking about an alternative to AA, but its being sold as a 'performance improvement with unnoticeable IQ loss'. Well, its as unnoticeable as a 192kbps MP3 is lossy - sounds like absolute shit.

There is just no way this investment will ever pay off, neither for gamers or for Nvidia. It is exactly as Aquinus says, you've got the hardware, why not utilize it. Which once again supports my stance on RTX: afterthought material. I'm not sure how many big fat warning signs people need to realize that...

1. That you use the correct encoder and not one sloppily put together by nitwits.

2. That the audio in question being encoded is of enough dynamic acoustic range to need more than 192kbps.

3. That the listener can actually hear the difference between 192kbps and 256kbps or 320kbps.

See if you use a crap encoder it doesn't matter what bitrate you use because it's going to sound like crap anyway. If you use a good encoder but will be playing the music back in a low quality device or in a car, the encoder/bitrate still won't matter. And if the music is hard rock or dialog, there will be very little benefit to using much over 192kbps because the differences will be hard to make out. If you're encoding high dynamic range audio(such as classical music) and will be playing back on high quality equipment and have good encoder than yes, you'll hear the difference between 192kbps and 320kbps.My point is; The reason your logic concerning RTX is flawed and incorrect is that you fail to see the points that count and matter. RTRT is going to replace the currently used imperfect and unrealistic lighting/rendering methods because ray-tracing is far more realistic. It's also got decades of proven use and learning behind it. The current lighting methods have reached the limit of what they can do and how far they can be taken before they literally become a form of ray-tracing. RTRT is the natural and logical progression development needs to go. The nay-saying you and others are continuing to regurgitate falls flat on it face becuase it's based on feelings instead of fact and merit based logic. RTRT is the future, the writing is on the wall. NVidia knows it, AMD knows it, Intel knows it, why don't you?

My logic is the market, and so far its not moving, despite Nvidia's constant push. There is no buzz. People don't give a shit. And neither do I. Games look fine and games with RTRT are absolutely not objectively better looking, in fact, in terms of playability they are occasionally objectively worse. AMD knows it? Nah, AMD is waiting for the dust to settle - a very wise choice, albeit one out of pure necessity. Don't mistake a push from the industry with popularity. There have been many innovations that simply didn't get picked up and are now, at the very best, a niche, if they even still exist. You can look at VR for that as a recent example.

I understand your stance and I recognize it, its the same enthusiasm as we saw with VR. 'This is the future of gaming' people said. Both VR and RTRT are technologies that require a very high penetration rate to actually gain traction, because the initial expense is high and the competition (regular games) is extremely strong and can make competitive product at a much lower price.

Don't mistake 'points that count and matter' - to you - as points that are applicable to everyone. The market determines what technologies live or die, and we all represent an equal, tiny portion of that market.

The vaseline effect is there along with the loss of texture detail the games are experiencing in FF, BFV and Metro.

If I didn't have a 9900K die on me and tested through 3 boards and endless configs today.. I would test it.

The something else is (unless i'm missing the explanation) kind of edge reflects and grain you don't see in any of the games. Its like the RT settings of roughness is highlighted on the edges causing them to be reflective. Maybe thats the intent or a by product of RT object roughness reflective but it just looks off.

Raytracing is the past, present and future. RTRT is the present and future. Why? Because it mimics nature and looks great when done right. And for similar reasons DLSS is going to be the future of pixel-edge-blending. Anti-aliasing was good for it's time, but it's time has passed.

I want to make it clear that I don't think that this is bad, it's just disingenuous to call it in any way anti-aliasing, because it's not. It's really just smart scaling. It's the opposite of nVidia's DSR.

CEO is telling us it will take 3x times longer to sell cards and here your saying they are the fastest sellers in its GPU history.