Saturday, September 19th 2020

NVIDIA Readies RTX 3060 8GB and RTX 3080 20GB Models

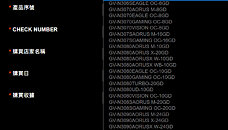

A GIGABYTE webpage meant for redeeming the RTX 30-series Watch Dogs Legion + GeForce NOW bundle, lists out eligible graphics cards for the offer, including a large selection of those based on unannounced RTX 30-series GPUs. Among these are references to a "GeForce RTX 3060" with 8 GB of memory, and more interestingly, a 20 GB variant of the RTX 3080. The list also confirms the RTX 3070S with 16 GB of memory.

The RTX 3080 launched last week comes with 10 GB of memory across a 320-bit memory interface, using 8 Gbit memory chips, while the RTX 3090 achieves its 24 GB memory amount by piggy-backing two of these chips per 32-bit channel (chips on either side of the PCB). It's conceivable that the the RTX 3080 20 GB will adopt the same method. There exists a vast price-gap between the RTX 3080 10 GB and the RTX 3090, which NVIDIA could look to fill with the 20 GB variant of the RTX 3080. The question on whether you should wait for the 20 GB variant of the RTX 3080 or pick up th 10 GB variant right now, will depend on the performance gap between the RTX 3080 and RTX 3090. We'll answer this question next week.

Source:

VideoCardz

The RTX 3080 launched last week comes with 10 GB of memory across a 320-bit memory interface, using 8 Gbit memory chips, while the RTX 3090 achieves its 24 GB memory amount by piggy-backing two of these chips per 32-bit channel (chips on either side of the PCB). It's conceivable that the the RTX 3080 20 GB will adopt the same method. There exists a vast price-gap between the RTX 3080 10 GB and the RTX 3090, which NVIDIA could look to fill with the 20 GB variant of the RTX 3080. The question on whether you should wait for the 20 GB variant of the RTX 3080 or pick up th 10 GB variant right now, will depend on the performance gap between the RTX 3080 and RTX 3090. We'll answer this question next week.

157 Comments on NVIDIA Readies RTX 3060 8GB and RTX 3080 20GB Models

2080 performance is drastically affected, when increasing texture quality so that it doesn't fit into 8GB (but fits into 3080's 10GB, thus making it look better)

www.techpowerup.com/273007/crysis-3-installed-on-and-run-directly-from-rtx-3090-24-gb-gddr6x-vramI'd wait till we see the performance comparisons ....not what MSI Afterburner or anything else says is being

usedallocated .... I wanna see how fps is impacted. So far with 6xx, 7xx, 9xxx, there's been little observable impacts between cards with different RAM amounts when performance was above 30 fps . We have seen an effect w/ poor console ports and see illogical effects where the % difference was larger at 1080 than 1440.... which makes no sense. But every comparison I have read has not been able to show a significant fps impact.In instances where a card comes in a X RAM version and a 2X RAM version, we have seen various utilies say that VRAM between 1X abd 2X is being

usedallocated, but we have not seen a significant difference in fps when fps > 30 . We have even seen games refuse to install with the X RAM, but after intsalling the game with the 2X RAm card, it plays at the same fps wiyth no impact on the user experience when you switch out to the X RAM version afterwards.GTX 770 4GB vs 2GB Showdown - Page 3 of 4 - AlienBabelTechThe 1060 3GB was purposely gimped with 11% less shaders because, otherwise, it showed no impact with half the VRAM. VRAM usage increases with resolution, so the 6% fps advangage that the 6GB card with 11% more shders had should have widened significantly at 1440p. It did not thereby proving that it was of no consequence. of the 2 games that had more significant impacts, on one of those two, the performance was closer between the 3 GB and 6 GB cards that at 1080 .... clearly this definace of logic points to the fact that other factors were at play in this instance. The 1060 was a 1080p card ... if 3 GB was inadequate at 1080p, then it should hev been a complete washout at 1440p and it wasn't ....

tpucdn.com/review/msi-gtx-1060-gaming-x-3-gb/images/perfrel_1920_1080.png

tpucdn.com/review/msi-gtx-1060-gaming-x-3-gb/images/perfrel_2560_1440.pngThey have to cut the GPU down, otherwise it will be obvious that the VRAM has no impact on fps when within the playable range.RAM Allocation is much different than RAM usage

Video Card Performance: 2GB vs 4GB Memory - Puget Custom Computers

GTX 770 4GB vs 2GB Showdown - Page 3 of 4 - AlienBabelTech

Is 4GB of VRAM enough? AMD’s Fury X faces off with Nvidia’s GTX 980 Ti, Titan X | ExtremeTech

From 2nd link

"There is one last thing to note with Max Payne 3: It would not normally allow one to set 4xAA at 5760×1080 with any 2GB card as it claims to require 2750MB. However, when we replaced the 4GB GTX 770 with the 2GB version, the game allowed the setting. And there were no slowdowns, stuttering, nor any performance differences that we could find between the two GTX 770s. "