Thursday, June 3rd 2021

Intel 12th Gen Core Alder Lake to Launch Alongside Next-Gen Windows This Halloween

Intel is likely targeting a Halloween (October 2021) launch for its 12th Generation Core "Alder Lake-S" desktop processors, along the sidelines of the next-generation Windows PC operating system, which is being referred to in the press as "Windows 11," according to "Moore's Law is Dead," a reliable source of tech leaks. This launch timing is key, as the next-gen operating system is said to feature significant changes to its scheduler, to make the most of hybrid processors (processors with two kinds of CPU cores).

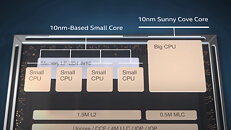

The two CPU core types on "Alder Lake-S," the performance "Golden Cove," and the low-power "Gracemont" ones, operate in two entirely different performance/Watt bands, and come with different ISA feature-sets. The OS needs to be aware of these, so it knows exactly when to wake up performance cores, or what kind of processing traffic to send to which kind of core. Microsoft is expected to unveil this new-gen Windows OS on June 24, with RTX (retail) availability expected in Q4-2021.

Source:

Moore's Law is Dead (Twitter)

The two CPU core types on "Alder Lake-S," the performance "Golden Cove," and the low-power "Gracemont" ones, operate in two entirely different performance/Watt bands, and come with different ISA feature-sets. The OS needs to be aware of these, so it knows exactly when to wake up performance cores, or what kind of processing traffic to send to which kind of core. Microsoft is expected to unveil this new-gen Windows OS on June 24, with RTX (retail) availability expected in Q4-2021.

38 Comments on Intel 12th Gen Core Alder Lake to Launch Alongside Next-Gen Windows This Halloween

I wonder if Open Shell will support it..

Considering MS' track record, who would want to be their "beta testers" for the first 6-12 months?

Trick or treat seems appropriate for any thing from either of them but reality is always trickery lol

Probably not a big improvement, but nevertheless, it's not all bad as you claim to be.

And not everything in games is synchronized.

Network threads per example are very likely not to be, with a little core being enough to do most network tasks.

Nor is a lot of tasks like graphics. Ever seen assets pooping up?He said for mobile, which is certainly true, as high efficiency cores will mean that mobile will enjoy a much better experience with higher battery life.

Windows schedule for big/little cores would only work when you have those obviously, so absolutely no clue to what you are talking about when saying it would 'sabotage the performance'.There's some hardware in the chips to help with that. How it works though hasn't been revealed and might never be.

However, this problem is already known and has already has some solutions. Linux has supported BIG.little in their scheduler for a long time and there are information that the OS has of the tasks and it's enough to make some heuristics out of it.

Very likely the hardware that Intel put is just that, more task statistics to guide the OS into making the right choice.They might actually be quite decent. The previous generation of the little cores, Tremont turbos to 3.3 GHz, and the rumours are that Gracemont will have a similar IPC to Skylake.

Very possibly that they will turbo higher than Tremont as enhanced version of 3.3 GHz and higher tdp in desktop, etc etc. Though 3.3GHz is already on par with the original Skylake, i.e. the i5 6500 had an all-core turbo of 3.3GHz.

The L2 is shared among a cluster of 4 small cores and not all. Plus, 2 MB of L2, so basically 512 kb/core. Very likely that it's multi-ported.

Obviously that they aren't going to be high power, but if Intel manages to get them to turbo to 3.5+GHz or so, it would be more than good enough and could be pretty similar to an i7 from 2015~2016(original Skylake). If they manage to make it clock better, then well, that's good.

The minimum would likely be around a i5-6500, considering that Tremont is already pretty close to it and the new atom architecture might bring it to IPC parity to it or close enough.

That is, assuming that the rumor are sort of true. If they hold no truth whatsoever then we have no clue.Little cores would be a pretty small part of the die anyway. The L3 cache already takes most of the die, so that isn't an issue.

But in any user application and especially games, all threads will have medium or high priority, even if the load is low. So just using statistics to determine where to run a thread will risk causing serious latencies.Most things in a game is synchronized, some with the game simulation, some with rendering etc. If one thread is causing delays, it will have cascading effects ultimately causing increased frame times (stutter) or even worse delays to the game tick which may cause game breaking bugs.

Networking may or may not be a big deal, it will at least risk having higher latencies.

Graphics are super sensitive. Most people will be able to spot small fluctuations in frame times.I see fairly little poop on my screens ;)

Asset popping is mostly a result of "poor" engine design, since many engines relies feedback from the GPU to determine which higher detail textures or meshes to load, which will inevitably lead to several frames of latency. This is of course more noticeable if the asset loading is slower, but it's still there, no matter how fast your SSD and CPU may be. The only proper way to solve this is to pre-cache assets, which a well tailored engine easily can do, but the GPU will not be able to predict this.Don't forget that most apps in Android is laggy anyway, and it's impossible to the end-user to know what causes the individual cases of stutter or misinterpreted user input.

So I wouldn't say that this is a good case study that hybrid CPUs work well.

Heuristics helps the average, but does little for the worst case, and the worst case is usually what causes latency.There is a very key detail that you are missing. Even if the small cores have IPC comparable to Skylake, it's important to understand that IPC does not equate performance, and especially when multiple cores may be sharing resources. If they are sharing L2, then the real world impact of that will vary a lot, especially since L2 is very closely tied to the pipeline, so any delays here is way more costly than a delay in e.g. L3.

Volatile memory is a finite resource and it's less than what HDDs/SSDs have, even a 'well tailored engine' might not have all assets that it needs cached.Not talking about android specifically. Android is the worst case scenario since the architecture is really made to support a vast array of devices, with each OEM providing the HALs needed to support the devices. Per example, audio was something that had an unacceptable high latency because of those abstraction. Per example, just check this article superpowered.com/androidaudiopathlatency

Linux however is doing a good job at it though. They have energy aware scheduling and a lot of features really

www.kernel.org/doc/html/latest/scheduler/sched-energy.html

or patches like this

lore.kernel.org/patchwork/patch/933988/It all depends. If the scheduler has a flag that says like 'this task cannot be put into a little core', then it shouldn't cause the worst case scenario. That of course was just an example, we don't know how exactly the scheduler and the hardware that Intel will put into the chip to help with it, actually works.Of course a delay in L2 is way more costly than one in L3, they have pretty different latencies. And no, L2 generally aren't super tied to the pipeline, L1I and L1D would be more tied to it.

Now about sharing resources, yes that's true. But also keep in mind that the L2 is very big with it having 2 MB per 4 Gracemont cores, more than what Skylake(and it's optimizations like Comet Lake) had per core, which was 256 kb/core. I find it unlikely that it would cause an issue as assuming that all 4 cores are competing for resources, they will have 512kb for each. The worrying part isn't resource starving, as it has more than enough to feed 4 little cores, it's issues like how a big L2 slice like that acts in question of latency. It will obviously be considerably bigger than 512kb of L2, but then, those aren't high performance cores, so might not end up being noticeable.

Another thing that reduces a little strain on the L2 is that each little core has 96 kb of L1, 64kb being L1I and 32kb being L1D.

Anyway, we can't know for sure until Intel releases Alder Lake, this is all unsupported speculation until then. And it all depends on how good 10 nm ESF ends up being and how they clock those little cores. Knowing Intel, they might do it pretty aggressively for desktop parts, so really, 3.6GHz or more could have a chance of happening, or they could just clock it Tremont and keep it at 3.3 GHz. Nobody knows.