- Joined

- Jul 10, 2015

- Messages

- 755 (0.21/day)

- Location

- Sokovia

| System Name | Alienation from family |

|---|---|

| Processor | i7 7700k |

| Motherboard | Hero VIII |

| Cooling | Macho revB |

| Memory | 16gb Hyperx |

| Video Card(s) | Asus 1080ti Strix OC |

| Storage | 960evo 500gb |

| Display(s) | AOC 4k |

| Case | Define R2 XL |

| Power Supply | Be f*ing Quiet 600W M Gold |

| Mouse | NoName |

| Keyboard | NoNameless HP |

| Software | You have nothing on me |

| Benchmark Scores | Personal record 100m sprint: 60m |

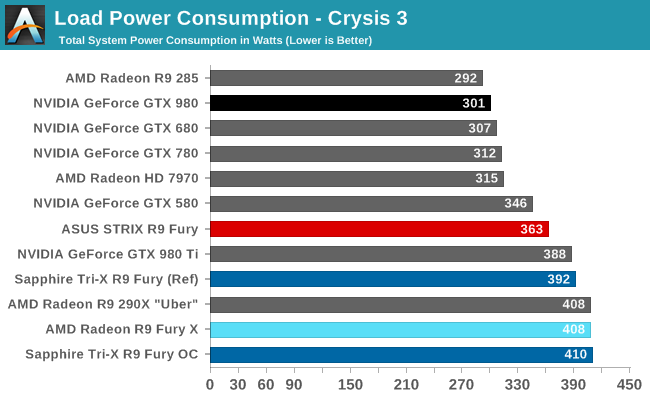

the strix 980 is strix version and so is strix Fury strix version, if they could or couldnt factory overclock it is not our problem, it is just a shame that Wizzard wont add at least geforce 980 strix in comparison as i believe it would be very helpful for all of us who are buying card in that 550-600$ rangethe STRIX GTX 980 is OC version, the STRIX Fury is running at stock clocks. Still +50Mhz OC 980 wont change much tbh.

I am grateful for the review but anyway

he's a Amd fanboy

he's a Amd fanboy