This one would be $2 and I’m sure there are cheaper options available. You just pass through the cc pins to it and it handles the power delivery independently from the other communication stuff already present with any usb3.x host controller. 5A max. Ddr5 and pcie4+ already make extra layers necessary, but which are not really needed in the IO area, giving us much needed real estate to apply the extra chips and power planes. It would cost a bit extra, but not that much. Itx would be a problem space wise, if you would wish to have anything but a few usb-c ports at the back.You're forgetting that USB-PD is also 15V. But even if we agree to also ditch 15V, limiting ourself to 60W max, how many amps can those selector chips handle? If they are doing the job of switching between voltage planes they also need to be capable of handling the maximum current passing through them. I kind of doubt a $1 chip is built to handle 5A or more continuously , but I could of course be wrong. (Also remember it can't just be a dumb PD negotiation chip, it also needs to handle passing through at least 10GBPs data and USB alt modes like DP). I've used a few of those dirt cheap PD receiver boards for DIY projects (so handy! And so cheap!), but those don't work for data transfer, just negotiate power delivery, so you'd need a much more advanced chip than that. Also, where would the extra voltage plane go on today's crowded motherboards? Remember, even a single added layer can drive up board costs dramatically. And remember how competitive the motherboard market is - even saving a dollar or two on the BOM is worth a lot to manufacturers. Not to mention that even ATX boards these days are stuffed to the gills, which will only get worse with DDR5 and PCIe 4.0/5.0. Fitting another voltage plane capable of handling a handful of amps, one or more powerful voltage regulators and a selector chip per port? All behind the rear I/O, where the CPU VRM usually lives? That sounds both expensive and very cramped. Would probably work well for a couple of ports on an add-in card like the one you shared, but it won't become standard any time soon. Also, any standard feature would need to scale down to ITX size, which... well, just no. Not happening.

-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

FSP at CES 2020: A Next-Gen Pure 12V PSU, and a UPS That Wants to See the World

- Thread starter btarunr

- Start date

FordGT90Concept

"I go fast!1!11!1!"

- Joined

- Oct 13, 2008

- Messages

- 26,259 (4.63/day)

- Location

- IA, USA

| System Name | BY-2021 |

|---|---|

| Processor | AMD Ryzen 7 5800X (65w eco profile) |

| Motherboard | MSI B550 Gaming Plus |

| Cooling | Scythe Mugen (rev 5) |

| Memory | 2 x Kingston HyperX DDR4-3200 32 GiB |

| Video Card(s) | AMD Radeon RX 7900 XT |

| Storage | Samsung 980 Pro, Seagate Exos X20 TB 7200 RPM |

| Display(s) | Nixeus NX-EDG274K (3840x2160@144 DP) + Samsung SyncMaster 906BW (1440x900@60 HDMI-DVI) |

| Case | Coolermaster HAF 932 w/ USB 3.0 5.25" bay + USB 3.2 (A+C) 3.5" bay |

| Audio Device(s) | Realtek ALC1150, Micca OriGen+ |

| Power Supply | Enermax Platimax 850w |

| Mouse | Nixeus REVEL-X |

| Keyboard | Tesoro Excalibur |

| Software | Windows 10 Home 64-bit |

| Benchmark Scores | Faster than the tortoise; slower than the hare. |

You swap the unit in 15 minutes and you're done. Motherboard VRM dies, you pray to the silicon gods the CPU, RAM, and peripherals didn't fry with it. You have to undo 6+ screws holding it in, potentially change I/O shield, remove expansion cards, and the list goes on and on. It's easily a two hour job assuming you don't have to start from scratch with the operating system because you were able to find a 1:1 replacement. And let's not forget that PSU might not sense a motherboard VRM is on fire...so it gets throwing juice at it until it burns down the house.It's not like it's an easy job to replace a failed VRM in your PSU either.

But they don't leave it 12V. They drop it down to much less. What I'm saying is get rid of the obsolete voltages (especially 3.3v) and add circuits that closer match what that hardware requires. Keep the power complexity in the PSU and simplify motherboards/AIBs.Remember, modern PCs power everything important off 12V already, with both the CPU, PCIe and anything else power hungry using it.

Doesn't matter who is fixing it, PSU is always going to take less time to test and replace.and when a psu fails, the average consumer can fix it?? Its rma time for both, bud.

USB can't change from 5v. Too many peripherals would be obsoleted.I"m thinking the alternative would be to change USB standards to use 12v and force attached devices become responsible for converting whats needed for 5v or 3.3v or whatever. Thats still assuming this all changes to a pure 12v system.

Last edited:

- Joined

- Dec 31, 2009

- Messages

- 19,366 (3.70/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

ya swap the parts man... both parts. I don't care how many screws or how many times you hokie pokie... you RMA the shits and replace it.You swap the unit in 15 minutes and you're done. Motherboard VRM dies, you pray to the silicon gods the CPU, RAM, and peripherals didn't fry with it. You have to undo 6+ screws holding it in, potentially change I/O shield, remove expansion cards, and the list goes on and on. It's easily a two hour job assuming you don't have to start from scratch with the operating system because you were able to find a 1:1 replacement. And let's not forget that PSU might not sense a motherboard VRM is on fire...so it gets throwing juice at it until it burns down the house.

But they don't leave it 12V. They drop it down to much less. What I'm saying is get rid of the obsolete voltages (especially 3.3v) and add circuits that closer match what that hardware requires. Keep the power complexity in the PSU and simplify motherboards/AIBs.

Doesn't matter who is fixing it, PSU is always going to take less time to test and replace.

I don't want those parts moved to the PSU... at least for that listed reason.

Can you go back to correcting storm-chaser please? LOLOLOLOLOL

24-pin free ?

Something that's already a reality on server boards :

www.asrockrack.com

www.asrockrack.com

So helpful to clean up a SFF build.

Something that's already a reality on server boards :

ASRock Rack > C246 WSI

X11SSV-Q | Motherboards | Products | Supermicro

www.supermicro.com

So helpful to clean up a SFF build.

- Joined

- May 2, 2017

- Messages

- 7,762 (3.04/day)

- Location

- Back in Norway

| System Name | Hotbox |

|---|---|

| Processor | AMD Ryzen 7 5800X, 110/95/110, PBO +150Mhz, CO -7,-7,-20(x6), |

| Motherboard | ASRock Phantom Gaming B550 ITX/ax |

| Cooling | LOBO + Laing DDC 1T Plus PWM + Corsair XR5 280mm + 2x Arctic P14 |

| Memory | 32GB G.Skill FlareX 3200c14 @3800c15 |

| Video Card(s) | PowerColor Radeon 6900XT Liquid Devil Ultimate, UC@2250MHz max @~200W |

| Storage | 2TB Adata SX8200 Pro |

| Display(s) | Dell U2711 main, AOC 24P2C secondary |

| Case | SSUPD Meshlicious |

| Audio Device(s) | Optoma Nuforce μDAC 3 |

| Power Supply | Corsair SF750 Platinum |

| Mouse | Logitech G603 |

| Keyboard | Keychron K3/Cooler Master MasterKeys Pro M w/DSA profile caps |

| Software | Windows 10 Pro |

Hm, that's not bad. It's a 7x7mm package though, so not an insubstantial size once you're looking at more than 1-2 ports (though this could likely all be made into a stand-alone block/tower of 2-3 ports with an integrated daughterboard for compact rear I/O implementations, but it would be expensive). Nonetheless I guess exactly that - 1-2 ports - would be feasible without driving up costs too much. The next challenge is bigger though: I think the real use case for something like this would be for front panel ports (who charges their phone from the rear I/O of their desktop PC?), which would require another new standard for front panel headers to handle the amperage, beyond the 3A rating of the current 20-pin USB 3.1g2 header, and that's with the trickle-slow adoption of the newest standard fresh in mind. On the other hand it shouldn't be a problem at all to implement >15W front panel ports simply by implementing motherboard support for >5V through this port (the standard doesn't mention voltage at all). Even just adding the option for 5V or 12V would make this very useful, but going beyond 36W would be a major hurdle. The question then becomes if you would need a new negotiator chip for this to account for the option of splitting the 20-pin header into two legacy ports, though I can't imagine that being a problem.This one would be $2 and I’m sure there are cheaper options available. You just pass through the cc pins to it and it handles the power delivery independently from the other communication stuff already present with any usb3.x host controller. 5A max. Ddr5 and pcie4+ already make extra layers necessary, but which are not really needed in the IO area, giving us much needed real estate to apply the extra chips and power planes. It would cost a bit extra, but not that much. Itx would be a problem space wise, if you would wish to have anything but a few usb-c ports at the back.

Nonetheless, full PD compatibility in host ports isn't coming any time soon, if ever.

Legacy ports don’t have the cc pin used to communicate power delivery config to host, so the split would work without a hitch (the pd chip would just default to 5V and stay dormant). TI also has a two port variant of the same chip in the same size as far as I know, so only one extra chip per two ports. But yeah, good catch regarding the 3A limit on the internal connector meaning that unless the pd chip is integrated to the front panel itself with it’s own power connector, we would indeed be realistically limited to 3A 12V for the front panel usb-c, although implementing that wouldn’t cost more than a couple of dollars per board. Odd that no-one has done that yet.Hm, that's not bad. It's a 7x7mm package though, so not an insubstantial size once you're looking at more than 1-2 ports (though this could likely all be made into a stand-alone block/tower of 2-3 ports with an integrated daughterboard for compact rear I/O implementations, but it would be expensive). Nonetheless I guess exactly that - 1-2 ports - would be feasible without driving up costs too much. The next challenge is bigger though: I think the real use case for something like this would be for front panel ports (who charges their phone from the rear I/O of their desktop PC?), which would require another new standard for front panel headers to handle the amperage, beyond the 3A rating of the current 20-pin USB 3.1g2 header, and that's with the trickle-slow adoption of the newest standard fresh in mind. On the other hand it shouldn't be a problem at all to implement >15W front panel ports simply by implementing motherboard support for >5V through this port (the standard doesn't mention voltage at all). Even just adding the option for 5V or 12V would make this very useful, but going beyond 36W would be a major hurdle. The question then becomes if you would need a new negotiator chip for this to account for the option of splitting the 20-pin header into two legacy ports, though I can't imagine that being a problem.

Nonetheless, full PD compatibility in host ports isn't coming any time soon, if ever.

- Joined

- May 2, 2017

- Messages

- 7,762 (3.04/day)

- Location

- Back in Norway

| System Name | Hotbox |

|---|---|

| Processor | AMD Ryzen 7 5800X, 110/95/110, PBO +150Mhz, CO -7,-7,-20(x6), |

| Motherboard | ASRock Phantom Gaming B550 ITX/ax |

| Cooling | LOBO + Laing DDC 1T Plus PWM + Corsair XR5 280mm + 2x Arctic P14 |

| Memory | 32GB G.Skill FlareX 3200c14 @3800c15 |

| Video Card(s) | PowerColor Radeon 6900XT Liquid Devil Ultimate, UC@2250MHz max @~200W |

| Storage | 2TB Adata SX8200 Pro |

| Display(s) | Dell U2711 main, AOC 24P2C secondary |

| Case | SSUPD Meshlicious |

| Audio Device(s) | Optoma Nuforce μDAC 3 |

| Power Supply | Corsair SF750 Platinum |

| Mouse | Logitech G603 |

| Keyboard | Keychron K3/Cooler Master MasterKeys Pro M w/DSA profile caps |

| Software | Windows 10 Pro |

[/quote]You swap the unit in 15 minutes and you're done. Motherboard VRM dies, you pray to the silicon gods the CPU, RAM, and peripherals didn't fry with it. You have to undo 6+ screws holding it in, potentially change I/O shield, remove expansion cards, and the list goes on and on. It's easily a two hour job assuming you don't have to start from scratch with the operating system because you were able to find a 1:1 replacement.

...if you get the same model of PSU and it's fully modular, or if you don't care whatsoever about cable management. Otherwise, replacing a motherboard is far less time consuming than a clean cabling layout, particularly in a cramped case.

Beyond that, it's quite common for dying PSUs to take motherboards and components with them, no? So moving these parts from the PSU to the motherboard technically reduces the number of vulnerable components.

...but the motherboard would sense it and shut down, which also shuts down the PSU. Problem solved.And let's not forget that PSU might not sense a motherboard VRM is on fire...so it gets throwing juice at it until it burns down the house.

Unless you're talking about IC level power, pretty much everything runs off 12V these days. Or are you suggesting adding a 0.9-1.5V rail for CPUs to the PSU? That would be lunacy - just imagine the cabling needed to provide >150A of clean power with no drops over 50cm or more. Converting power as close to where it's needed as possible is the far superior solution both for efficiency, stability and quality.But they don't leave it 12V. They drop it down to much less. What I'm saying is get rid of the obsolete voltages (especially 3.3v) and add circuits that closer match what that hardware requires. Keep the power complexity in the PSU and simplify motherboards/AIBs.

Beyond that, what you're saying is exactly what they are doing - killing off legacy voltages - but instead of mandating one-size-fits-all lower voltages like current ATX PSUs they are letting hardware convert its own power to suit its needs (which, frankly, is a requirement for current variable voltage ICs anyhow). This is already what all power hungry components do, so this is extremely sensible. It's also by far the most flexible (and therefore future-proof) solution, it reduces the need for space-intensive wiring and connectors, increases efficiency, reduces cable losses and connector heat buildup, and really has no drawbacks.

FordGT90Concept

"I go fast!1!11!1!"

- Joined

- Oct 13, 2008

- Messages

- 26,259 (4.63/day)

- Location

- IA, USA

| System Name | BY-2021 |

|---|---|

| Processor | AMD Ryzen 7 5800X (65w eco profile) |

| Motherboard | MSI B550 Gaming Plus |

| Cooling | Scythe Mugen (rev 5) |

| Memory | 2 x Kingston HyperX DDR4-3200 32 GiB |

| Video Card(s) | AMD Radeon RX 7900 XT |

| Storage | Samsung 980 Pro, Seagate Exos X20 TB 7200 RPM |

| Display(s) | Nixeus NX-EDG274K (3840x2160@144 DP) + Samsung SyncMaster 906BW (1440x900@60 HDMI-DVI) |

| Case | Coolermaster HAF 932 w/ USB 3.0 5.25" bay + USB 3.2 (A+C) 3.5" bay |

| Audio Device(s) | Realtek ALC1150, Micca OriGen+ |

| Power Supply | Enermax Platimax 850w |

| Mouse | Nixeus REVEL-X |

| Keyboard | Tesoro Excalibur |

| Software | Windows 10 Home 64-bit |

| Benchmark Scores | Faster than the tortoise; slower than the hare. |

In the time it takes just to expose the motherboard (HSF removed, expansion cards pulled, power connectors pulled, FP connectors removed, USB headers disconnected, SATA drives disconnected, etc.) you could have already had the PSU disconnected and out of the computer. I'm not even talking the 6+ screws unscrewed yet either. Then you might have a really cramped case where optical drives have to be slid forward just to maneuver the unscrewed motherboard to get it out of the case. It's a big job...the biggest in computer repair....if you get the same model of PSU and it's fully modular, or if you don't care whatsoever about cable management. Otherwise, replacing a motherboard is far less time consuming than a clean cabling layout, particularly in a cramped case.

Just look at what OEMs have where repair is a chief concern of theirs in engineering: I have an HP Ultra-Sim here and the PSU can literally be removed from the computer without any tools in less than a minute. A new one replacing it in less than a minute, also without tools.

Replace the motherboard? At least a thirty minute job and doing so invalidates all of the identification stickers on the machine. Better off recycling it and just moving the equipment to a different, machine of the same model.

I've never seen a motherboard designed to be serviceable. PSUs almost always are, fully modular especially so.

Quality PSUs have overcurrent protection so they *can't.* I've yet to have a PSU kill a motherboard but I've never skimped on power supplies either.Beyond that, it's quite common for dying PSUs to take motherboards and components with them, no?

PSUs always have better overcurrent protection than motherboards.So moving these parts from the PSU to the motherboard technically reduces the number of vulnerable components.

PSUs shunt the power to ground. The quicker and closer the circuit is closed to the AC source, the less damage is possible down the line....but the motherboard would sense it and shut down, which also shuts down the PSU. Problem solved.

I think the best solution is the return of the slot processor. An AIB gets power directly from the PSU at a given voltage and handles the power delivery to the socket. Part of the PSU, therefore, becomes part of the motherboard but still allowing for replacement and higher quality control of defective part. This also allows any motherboard to be adjusted according to the power demands of the processor installed. Graphics cards pretty much already do this. The same concept should apply to CPUs.Unless you're talking about IC level power, pretty much everything runs off 12V these days. Or are you suggesting adding a 0.9-1.5V rail for CPUs to the PSU? That would be lunacy - just imagine the cabling needed to provide >150A of clean power with no drops over 50cm or more. Converting power as close to where it's needed as possible is the far superior solution both for efficiency, stability and quality.

Slotting CPU also gives it its own thermal domain by nature. The problem is the DIMMs really need to be on the AIB too because of the sheer number of electrical connections they require.

Increases the cost and complexity of motherboards.It's also by far the most flexible (and therefore future-proof) solution, it reduces the need for space-intensive wiring and connectors, increases efficiency, reduces cable losses and connector heat buildup, and really has no drawbacks.

TL;DR: This idea is great for trying to establish a power supply standard for SFF computers but it's not the best path forward for replacing ATX in regards to power supply.

Last edited:

- Joined

- May 2, 2017

- Messages

- 7,762 (3.04/day)

- Location

- Back in Norway

| System Name | Hotbox |

|---|---|

| Processor | AMD Ryzen 7 5800X, 110/95/110, PBO +150Mhz, CO -7,-7,-20(x6), |

| Motherboard | ASRock Phantom Gaming B550 ITX/ax |

| Cooling | LOBO + Laing DDC 1T Plus PWM + Corsair XR5 280mm + 2x Arctic P14 |

| Memory | 32GB G.Skill FlareX 3200c14 @3800c15 |

| Video Card(s) | PowerColor Radeon 6900XT Liquid Devil Ultimate, UC@2250MHz max @~200W |

| Storage | 2TB Adata SX8200 Pro |

| Display(s) | Dell U2711 main, AOC 24P2C secondary |

| Case | SSUPD Meshlicious |

| Audio Device(s) | Optoma Nuforce μDAC 3 |

| Power Supply | Corsair SF750 Platinum |

| Mouse | Logitech G603 |

| Keyboard | Keychron K3/Cooler Master MasterKeys Pro M w/DSA profile caps |

| Software | Windows 10 Pro |

Again, this depends entirely on the build. If your PSU is fully modular and there's room to move it around/get at the connectors it is indeed trivial to replace; the same would be true for a non-modular unit if the cabling is the last thing installed in a relatively open space and not tied down. If you need to remove and re-run cabling, especially if this is zip-tied in place or you need to be tidy to fit a tight-fitting side panel on, this can take a long, long time unless you enjoy pinching cables and bending side panels. Replacing the motherboard doesn't require you to remove any cabling whatsoever in any modern ATX case with decent cutouts: just disconnect it from the board (relatively easy outside of tight 24-pins), unplug any AICs (usually easy, 1-2 screws), and pull the board (4-9 screws). Sure, there are some screws involved, but there are 4 screws holding standard PSUs in place too. The cooler is best left on until the board is out unless it's an AIO or otherwise mounted to the case. In an average semi-budget build (air cooled CPU, one GPU, semi-modular PSU, case without lots of room for cable routing) I would say a motherboard replacement can be a lot quicker than a PSU replacement.In the time it takes just to expose the motherboard (HSF removed, expansion cards pulled, power connectors pulled, FP connectors removed, USB headers disconnected, SATA drives disconnected, etc.) you could have already had the PSU disconnected and out of the computer. I'm not even talking the 6+ screws unscrewed yet either. Then you might have a really cramped case where optical drives have to be slid forward just to maneuver the unscrewed motherboard to get it out of the case. It's a big job...the biggest in computer repair.

Absolutely. The problem is those PSUs (and motherboards!) are tailor made for each other with cable runs and everything else made to fit that exact use case perfectly, so you're comparing apples to oranges. Standard form factor builds don't work like this. Beyond that, these OEM SFF PCs often ignore standards too - while I'm only familiar with Dells of this form factor, they have long since abandoned the ATX PSU voltage output standard and ditched the legacy voltages. IIRC modern Dell SFF PCs use 8- or 10-pin PSU connectors, and generate 3.3V and 5V for SATA power on the motherboard with the PSU only providing 12V and 5VSB.Just look at what OEMs have where repair is a chief concern of theirs in engineering: I have an HP Ultra-Sim here and the PSU can literally be removed from the computer without any tools in less than a minute. A new one replacing it in less than a minute, also without tools.

Well, yes, because that's how those machines are designed. Saying "the part that's designed to go in first is the takes the longest to replace" as if it is an argument for anything is ... odd. That would be true whatever that component was. If the PC was designed so that the PSU was the first part installed it would also be the slowest part to replace.Replace the motherboard? At least a thirty minute job and doing so invalidates all of the identification stickers on the machine. Better off recycling it and just moving the equipment to a different, machine of the same model.

...guess that depends on your definition of "serviceable". Sure, less SMDs in a PSU, easier to solder by hand and the components used are more widely available, but neither of these parts are really serviceable outside of professional repair centres. (That doesn't mean a dedicated and knowledgeable hobbyist can't repair a PSU, but hot air soldering/desoldering of VRM components on a motherboard isn't that much more advanced than repairing a PSU. Parts availability is more complicated, though.)I've never seen a motherboard designed to be serviceable. PSUs almost always are, fully modular especially so.

I haven't either, but there are plenty of horror stories out there. The majority of those are probably either old or use terrible PSUs, but wouldn't simplifying PSU design then help in this regard?Quality PSUs have overcurrent protection so they *can't.* I've yet to have a PSU kill a motherboard but I've never skimped on power supplies either.

...but we're talking about a fundamental design change here. Don't you think good OCP would be a desirable feature for motherboards featuring these onboard voltage regulators?PSUs always have better overcurrent protection than motherboards.

Doesn't that depend on where the failure happens? Also, aren't PC cases - and thus motherboards - grounded, and wouldn't any onboard voltage converter then also be designed to shunt power to ground? Beyond that, the only difference between this (12V out from PSU, various lower voltages generated on the motherboard) and current high-end PSUs (12V generated in the PSU, converted internally to 5V and 3.3V) is where conversion happens, and that one locks you into a given set of voltages while the other allows for whatever is needed, including ditching unnecessary voltages. IMO the latter is far superior.PSUs shunt the power to ground. The quicker and closer the circuit is closed to the AC source, the less damage is possible down the line.

I don't entirely disagree here, but it would be very very inefficient in terms of space usage (the CPU+VRM+RAM AIC would need to be close to the size of an ITX board, and you'd lose a lot of freedom in terms of putting components where they fit best) and you'd introduce an extra connector in the PCIe signal path while also increasing its length. Cooler compatibility with a slot-in card would also be problematic, though a lot of this could be solved by using a parallel rather than perpendicular daughter board (stacked boards are space efficient and you'd avoid the problem of having tower coolers hanging off a 90-degree angled AIC and colliding with the board below). You'd still have a lot of issues with I/O, though, and would likely drive up prices due to needing more external controllers (or needing a bunch of pins for all the internal controllers on a modern CPU).I think the best solution is the return of the slot processor. An AIB gets power directly from the PSU at a given voltage and handles the power delivery to the socket. Part of the PSU, therefore, becomes part of the motherboard but still allowing for replacement and higher quality control of defective part. This also allows any motherboard to be adjusted according to the power demands of the processor installed. Graphics cards pretty much already do this. The same concept should apply to CPUs.

Slotting CPU also gives it its own thermal domain by nature. The problem is the DIMMs really need to be on the AIB too because of the sheer number of electrical connections they require.

Nowhere near as much as high bandwidth buses like DDR5 or PCIe 4.0/5.0. In comparison the cost addition would be negligible, and the total cost might even drop due to removing unnecessary components. This should make PSUs significantly cheaper and more efficient at the same time.Increases the cost and complexity of motherboards.

Have to disagree with you here. The ATX PSU standard stems from a time when DC-DC voltage conversion wasn't as trivial and efficient as it is today and the PSU thus created three major voltages directly from AC. The majority of desktop PCs today are also some form of SFF (as in "not an ATX mid-tower or bigger"). And the brilliance of simplifying the PSU like this is that one size fits all - if you have a huge PC with lots of weird AICs needing weird voltages you can convert them all from 12V, but you don't need to waste space on those voltage converters unless you actually need them. On the other hand a design trying to be everything for everyone - like the current ATX standard - will inevitably be more than what's necessary for the vast majority of use cases.TL;DR: This idea is great for trying to establish a power supply standard for SFF computers but it's not the best path forward for replacing ATX in regards to power supply.

FordGT90Concept

"I go fast!1!11!1!"

- Joined

- Oct 13, 2008

- Messages

- 26,259 (4.63/day)

- Location

- IA, USA

| System Name | BY-2021 |

|---|---|

| Processor | AMD Ryzen 7 5800X (65w eco profile) |

| Motherboard | MSI B550 Gaming Plus |

| Cooling | Scythe Mugen (rev 5) |

| Memory | 2 x Kingston HyperX DDR4-3200 32 GiB |

| Video Card(s) | AMD Radeon RX 7900 XT |

| Storage | Samsung 980 Pro, Seagate Exos X20 TB 7200 RPM |

| Display(s) | Nixeus NX-EDG274K (3840x2160@144 DP) + Samsung SyncMaster 906BW (1440x900@60 HDMI-DVI) |

| Case | Coolermaster HAF 932 w/ USB 3.0 5.25" bay + USB 3.2 (A+C) 3.5" bay |

| Audio Device(s) | Realtek ALC1150, Micca OriGen+ |

| Power Supply | Enermax Platimax 850w |

| Mouse | Nixeus REVEL-X |

| Keyboard | Tesoro Excalibur |

| Software | Windows 10 Home 64-bit |

| Benchmark Scores | Faster than the tortoise; slower than the hare. |

You know they won't. Cost pressures dictate that less is more (profit). PSU manufacturers differentiate themselves through efficiency and protection. Motherboard manufacturers differentiate themselves through features (e.g. stereo versus 8-channel audio chips). Electrical protection isn't going to rate highly among the feature list....but we're talking about a fundamental design change here. Don't you think good OCP would be a desirable feature for motherboards featuring these onboard voltage regulators?

Motherboards don't even try to reach 94% efficiency in their designs. PSUs do to get 80+ Titanium certification.Beyond that, the only difference between this (12V out from PSU, various lower voltages generated on the motherboard) and current high-end PSUs (12V generated in the PSU, converted internally to 5V and 3.3V) is where conversion happens, and that one locks you into a given set of voltages while the other allows for whatever is needed, including ditching unnecessary voltages. IMO the latter is far superior.

It would require a new, standardized northbridge bus and connector. Perhaps something Thunderbolt-esque.I don't entirely disagree here, but it would be very very inefficient in terms of space usage (the CPU+VRM+RAM AIC would need to be close to the size of an ITX board, and you'd lose a lot of freedom in terms of putting components where they fit best) and you'd introduce an extra connector in the PCIe signal path while also increasing its length. Cooler compatibility with a slot-in card would also be problematic, though a lot of this could be solved by using a parallel rather than perpendicular daughter board (stacked boards are space efficient and you'd avoid the problem of having tower coolers hanging off a 90-degree angled AIC and colliding with the board below). You'd still have a lot of issues with I/O, though, and would likely drive up prices due to needing more external controllers (or needing a bunch of pins for all the internal controllers on a modern CPU).

Except design pressures have lead to a custom PSU for every single one of these SFF applications. I don't think OEMs have any interest in a new PSU design. Hell, most of have even left ATX/BTX behind in their bigger offerings. Why? Because, like Apple, they'd prefer you come crawling back to them to support the hardware. They make a lot of bank on servicing contracts.The majority of desktop PCs today are also some form of SFF (as in "not an ATX mid-tower or bigger"). And the brilliance of simplifying the PSU like this is that one size fits all...

For that reason, I don't see anything changing. FSP doesn't have enough market presence to build a coalition for a new standard. This will likely be a one-off experiment and everyone will go back to custom.

Look at the server board example given above--how dense it is with ICs:...if you have a huge PC with lots of weird AICs needing weird voltages you can convert them all from 12V, but you don't need to waste space on those voltage converters unless you actually need them.

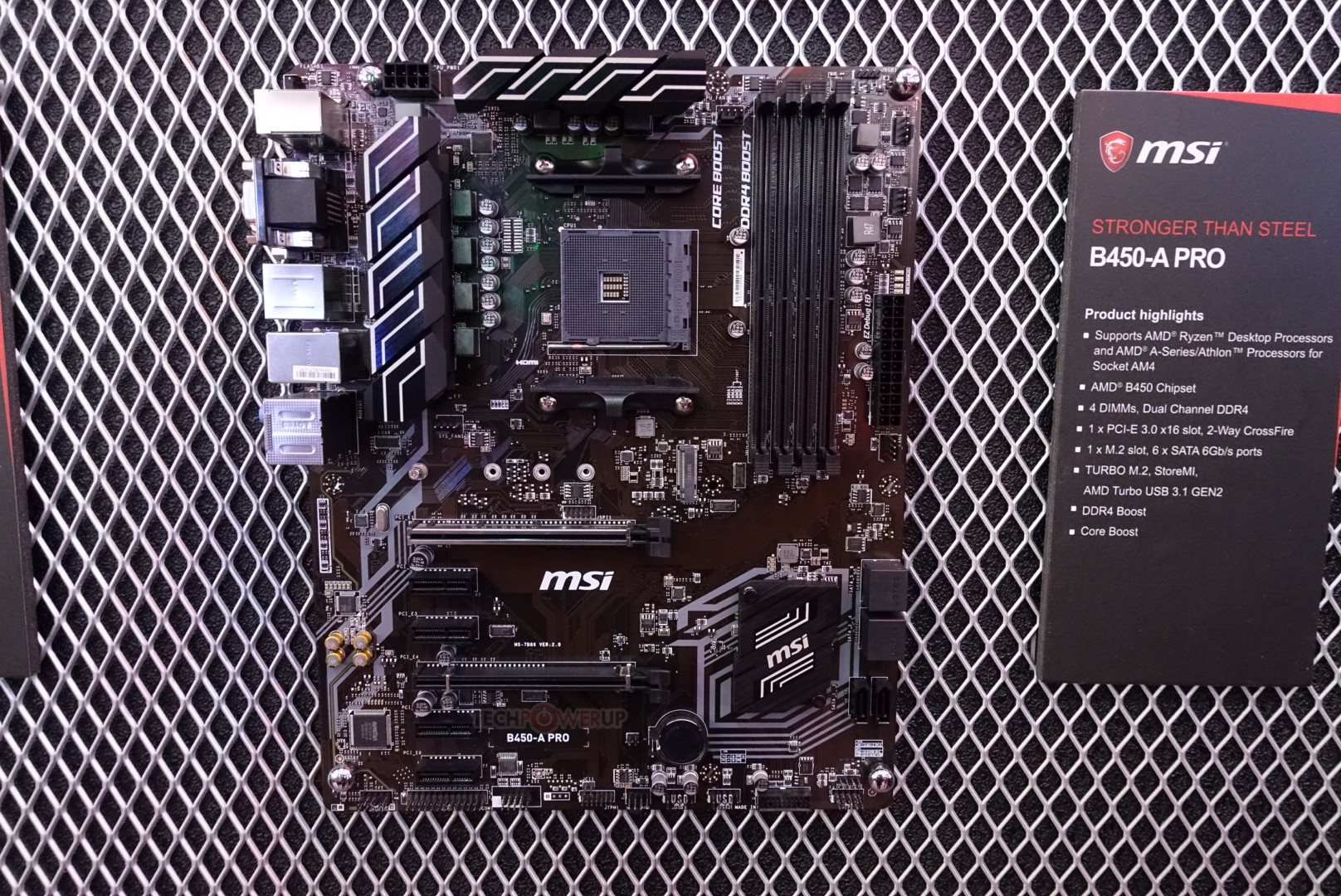

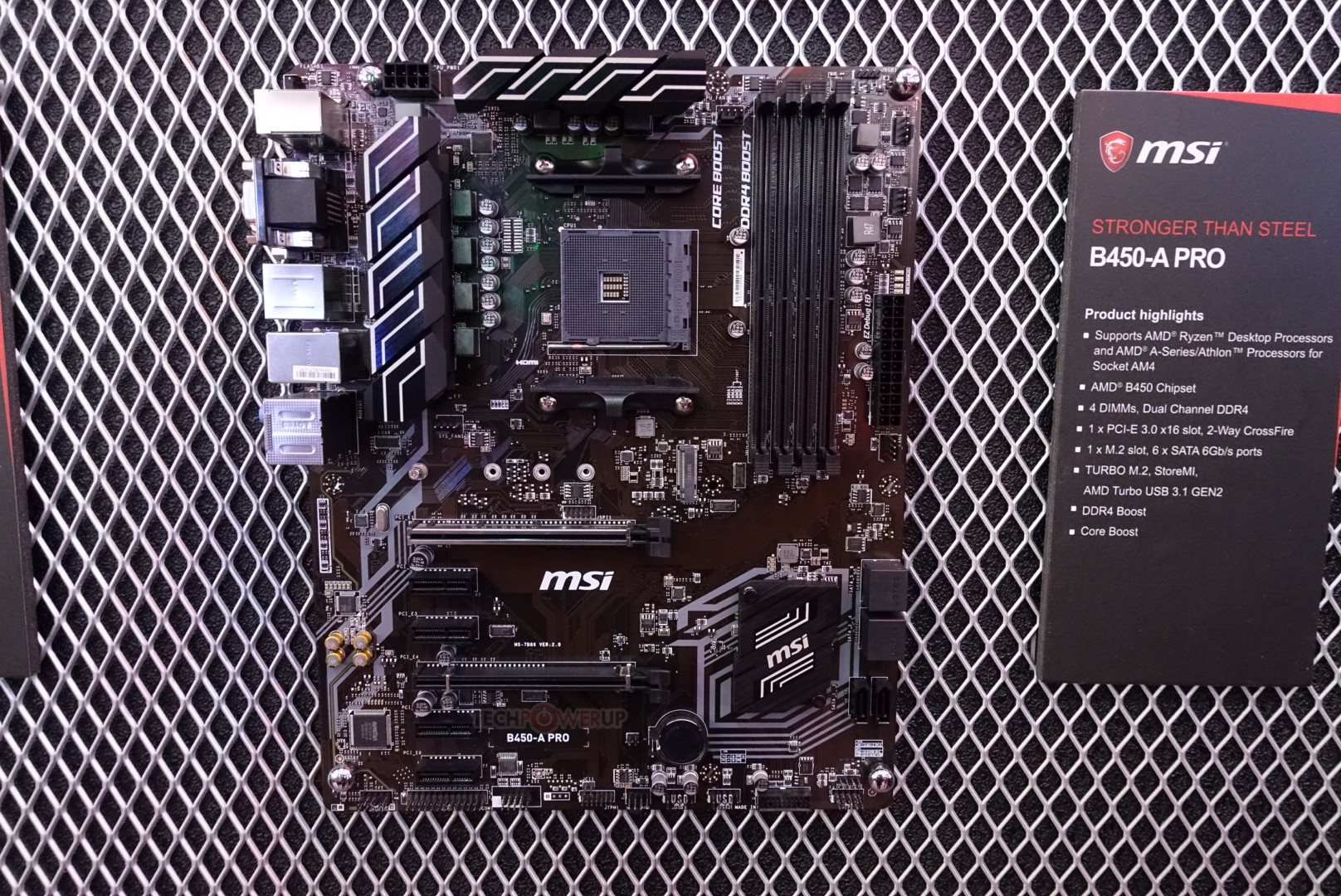

Compare that to a modern ATX motherboard--it strikes as being barren:

Even when you shrink it down to ITX, it's still has large swaths of the PCB that are barren:

...that's because a lot of those circuits are in the power supply.

TL;DR: FSP is advocating for lower PSU costs and much higher motherboard design and manufacturing costs. I'm not okay with that especially when PSUs really don't functionally obsolete but motherboards do.

Last edited:

- Joined

- May 2, 2017

- Messages

- 7,762 (3.04/day)

- Location

- Back in Norway

| System Name | Hotbox |

|---|---|

| Processor | AMD Ryzen 7 5800X, 110/95/110, PBO +150Mhz, CO -7,-7,-20(x6), |

| Motherboard | ASRock Phantom Gaming B550 ITX/ax |

| Cooling | LOBO + Laing DDC 1T Plus PWM + Corsair XR5 280mm + 2x Arctic P14 |

| Memory | 32GB G.Skill FlareX 3200c14 @3800c15 |

| Video Card(s) | PowerColor Radeon 6900XT Liquid Devil Ultimate, UC@2250MHz max @~200W |

| Storage | 2TB Adata SX8200 Pro |

| Display(s) | Dell U2711 main, AOC 24P2C secondary |

| Case | SSUPD Meshlicious |

| Audio Device(s) | Optoma Nuforce μDAC 3 |

| Power Supply | Corsair SF750 Platinum |

| Mouse | Logitech G603 |

| Keyboard | Keychron K3/Cooler Master MasterKeys Pro M w/DSA profile caps |

| Software | Windows 10 Pro |

You need to read news items more carefully:For that reason, I don't see anything changing. FSP doesn't have enough market presence to build a coalition for a new standard. This will likely be a one-off experiment and everyone will go back to custom.

This is not FSP's effort. It's a preview of an update or successor to the ATX standard, it would seem (though it might just be for their new modular NUCs).btarunr said:This PSU is being designed for Intel's upcoming PC spec that does away with the 5 V, 5 Vsb, and 3.3 V power domains altogether.

Sure, but even midrange boards these days advertise how many VRM phases they have, so it's not like relatively obscure specs mattering only to a handful don't turn into hyped marketing bullet points. Also, don't you think a standard requiring this would mandate on-board protections? I seriously doubt such a standard would be allowed to enter the more tightly regulated markets of the world (the EU especially) given how much effort is put into all other kinds of protections against getting cancer/burning your house down/cooking the planet. There will no doubt be a minimum requirement for OCP as well as a spec requirement for safe ground shunting in case of failure.You know they won't. Cost pressures dictate that less is more (profit). PSU manufacturers differentiate themselves through efficiency and protection. Motherboard manufacturers differentiate themselves through features (e.g. stereo versus 8-channel audio chips). Electrical protection isn't going to rate highly among the feature list.

There's no standard for VRM efficiency, so it's hard to market. But high-end boards do tend to be very efficient. Besides, a voltage regulator suited to the load at hand and closer to the load will always be less lossy than an oversized one placed further away. Moving these out of the PSU inherently increases efficiency through eliminating cable losses, and while there's a balance in terms of the number of converters (many discrete ones will mostly be less efficient than one shared), this will generally suit modern loads better, particularly as these voltages fall further out of use. I'd wager most motherboards would ditch 3.3V entirely, which is an indisputable efficiency gain compared to even an idle 3.3V circuit in the PSU.Motherboards don't even try to reach 94% efficiency in their designs. PSUs do to get 80+ Titanium certification.

That's not a terrible idea. Sounds very expensive, though. It would for sure be a throwback to the days of the chipset of your motherboard really making a big difference for its featureset.It would require a new, standardized northbridge bus and connector. Perhaps something Thunderbolt-esque.

You're confusing physical form factors with power delivery standards here. We're discussing the latter mainly, not the former. At least I am. I don't see much of an issue with custom form factor PSUs for OEM SFF PCs, but I do take issue when they are no longer ATX-compatible in terms of power. Switching to only 12V on the other hand would open the doors to the world of small, efficient AC-12VDC PSUs, of which there are many great ones in the 100-400W range. Adding 12VSB should be possible through a simple in-line circuit board which would make third-party PSU replacements for OEM SFF desktops relatively easy, unlike now.Except design pressures have lead to a custom PSU for every single one of these SFF applications. I don't think OEMs have any interest in a new PSU design. Hell, most of have even left ATX/BTX behind in their bigger offerings. Why? Because, like Apple, they'd prefer you come crawling back to them to support the hardware. They make a lot of bank on servicing contracts.

I guess good job at finding two low-end boards with relatively sparse featuresets? Look at an ITX X570 or Z390 board and come back. Also, as far as I can tell most of the power delivery circuitry on that Asrock Rack board is above the RAM slots except for the CPU VRM and some around the southbridge. Then there's two Ethernet controllers, a management controller, and a heap of headers taking up a lot of board space that would be free if not used for server-specific use cases. Then there's the fact that server boards are overbuilt, mostly built to run at 100% load continuously (which consumer boards are definitely not, nor should they be), and generally have prices including years of enterprise support etc.Look at the server board example given above--how dense it is with ICs:

Compare that to a modern ATX motherboard--it strikes as being barren:

Even when you shrink it down to ITX, it's still has large swaths of the PCB that are barren:

...that's because a lot of those circuits are in the power supply.

For comparison, here's an ASRock X570 Phantom Gaming ITX/TB3 without its heatsinks and other cosmetics:

No. FSP is showing off a PSU based on an updated Intel power delivery standard - possibly a successor to the ATX power delivery standard? - which aligns much better with current hardware and hardware design trends than the old ATX standard and its legacy featureset and relatively low power delivery capability.TL;DR: FSP is advocating for lower PSU costs and much higher motherboard design and manufacturing costs. I'm not okay with that especially when PSUs really don't functionally obsolete but motherboards do.