Think about system memory with latency vs bandwidth from latency tightening vs frequency scaling. I think that's going to come into play here quite a bit with the infinity cache situation it has to. I believe AMD tried to get the design well balanced and efficient for certain with minimal oddball compromising imbalances in the design of it. We can already glean a fair amount with what AMD's shown however, but we'll know more for certain with further data naturally. As I said I'd like to see the 1080p results. What you're saying though is fair we need to know more about Ampere and RDNA2 before we can more easily conclude exactly which parts of the design are leading to which performance differences and their impact based on resolution scaling. It's safe to say though there appears to be sweeping differences in design between RNDA2/Ampere to do with the resolution scaling.

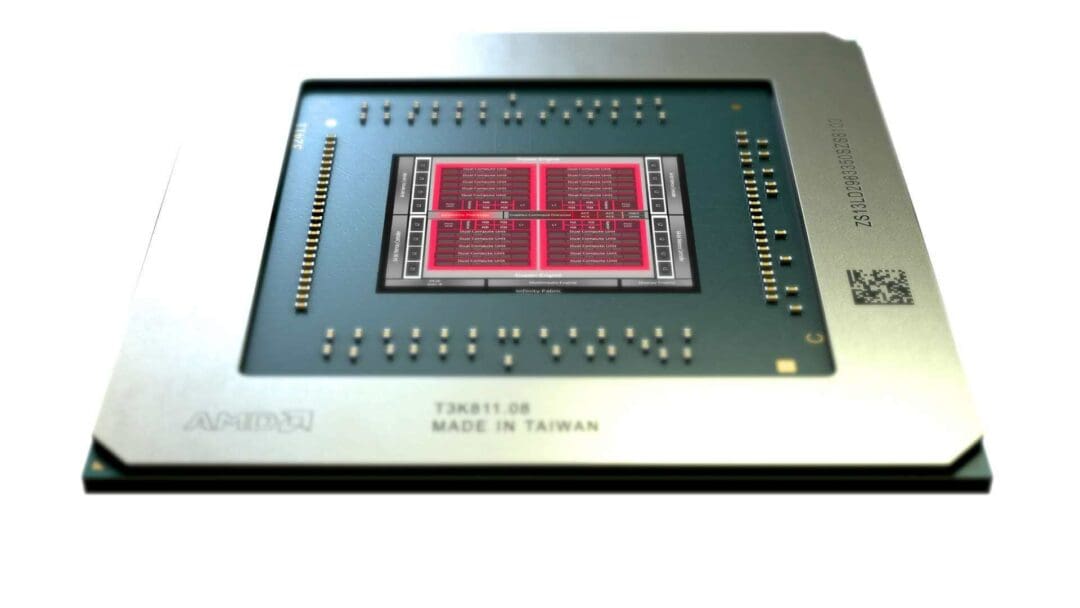

If PCIE 4.0 doubled the bandwidth and cut the I/O bottleneck in half and this infinity cache is doing similarly that's a big deal for Crossfire. Mantle/Vulkan,DX12, VRS, Direct Storage API, Infinity Fabric, Infinity Cache, PCIE 4.0 and other things all make mGPU easier if anything the only real barrier developers.

I feel like AMD should just do a quincunx socket setup. Sounds a bit crazy, but they could have 4 APU's and a central processor. Infinity fabric and infinity cache between the 4-APU's and the central processor. A shared quad channel memory for the central processor with shared dual channel access to it from the surrounding APU's. The APU's would have 2 cores each to communicate with the adjacent APU's and the rest could be GPU design. The central processor would probably be a pure CPU design high IPC high frequency perhaps a bigLITTLE design a beastly single core central design the heart of the unit and 8-smaller surrounding physical cores handling odd and ends. There could be a lot of on the fly compression/decompression involved as well to maximize bandwidth and increase I/O. The chipset would be gone entirely and just integrated into the CPU design through the socketed chips involved. Lots of bandwidth, processing, single core performance along with multi-core performance and load balancing and head distribution and quick and efficient data transfer between different parts. It's a fortress of sorts, but it could probably fit within a ATX design reasonably well. You might start out with dual channel/quad channel with two socketed chips the socketed heart/brain and along with a APU and build it up down the road for scalable performance improvements. They could integrate FPGA tech into the equation, but that's another matter and cyborg matter we probably shouldn't speak of right now though the cyborg is coming.

If PCIE 4.0 doubled the bandwidth and cut the I/O bottleneck in half and this infinity cache is doing similarly that's a big deal for Crossfire. Mantle/Vulkan,DX12, VRS, Direct Storage API, Infinity Fabric, Infinity Cache, PCIE 4.0 and other things all make mGPU easier if anything the only real barrier developers.

I feel like AMD should just do a quincunx socket setup. Sounds a bit crazy, but they could have 4 APU's and a central processor. Infinity fabric and infinity cache between the 4-APU's and the central processor. A shared quad channel memory for the central processor with shared dual channel access to it from the surrounding APU's. The APU's would have 2 cores each to communicate with the adjacent APU's and the rest could be GPU design. The central processor would probably be a pure CPU design high IPC high frequency perhaps a bigLITTLE design a beastly single core central design the heart of the unit and 8-smaller surrounding physical cores handling odd and ends. There could be a lot of on the fly compression/decompression involved as well to maximize bandwidth and increase I/O. The chipset would be gone entirely and just integrated into the CPU design through the socketed chips involved. Lots of bandwidth, processing, single core performance along with multi-core performance and load balancing and head distribution and quick and efficient data transfer between different parts. It's a fortress of sorts, but it could probably fit within a ATX design reasonably well. You might start out with dual channel/quad channel with two socketed chips the socketed heart/brain and along with a APU and build it up down the road for scalable performance improvements. They could integrate FPGA tech into the equation, but that's another matter and cyborg matter we probably shouldn't speak of right now though the cyborg is coming.

If I'm not mistaken RNDA transitioned to some form of twin CU design task scheduling work groups that allows for kind of a serial and/or parallel performance flexibility within them. I could be wrong on my interpretation of them, but I think it allows them double down for a single task or split up and each handle two smaller tasks within the same twin CU grouping. Basically a working smarter not harder hardware design technique. Granular is where it is at more neurons. I think ideally you want a brute force single core that occupies the most die space and scale downward by like 50% with twice the core count. So like 4chips 1c/2c/4c/8c chips the performance per core would scale downward as core count increases, but the efficiency per core would increase and provided it can perform the task quickly enough that's a thing it saves power even if it doesn't perform the task as fast though it doesn't always need to either. The 4c/8c chips wouldn't be real ideal for gaming frame rates or anything overall, but they would probably be good for handling and calculating different AI within a game as opposed to pure rendering the AI animations and such don't have to be as quick and efficient as scene rendering for example in general it's just not as vital. I wonder if the variable rate shading will help make better use of core assignments across more cores in theory it should if they are assignable.I think this also encapsulates the gist of it somewhat.

Prior to this, AMD struggled with instruction pipeline functions. Successively, they streamlined the pipeline operation flow, dropped instruction latency to 1 and started implementing dual issued operations. That, or I don't know how they can increase shader speed by 7.9x folds implementing simple progressions to the same architecture.

And remember, this is only because they had previously experimented with it, otherwise there would be no chance that they know first hand how much power budget it would cost them. Sram has a narrow efficiency window.

There used to be a past notice which compared AMD and Intel's cell to transistor ratios, with the summary being AMD had integrated higher and more efficient transistor count units. All because of available die space.

Last edited: