So what we have learned from the 5000 series CPU and the 6000 series GPU launch is that

-FHD results in CPU benchmarks are not relevant any more (in fact they really weren't earlier too, but for some reason it was important for the blue team)

-NV cards can be UVd to get better power consumption

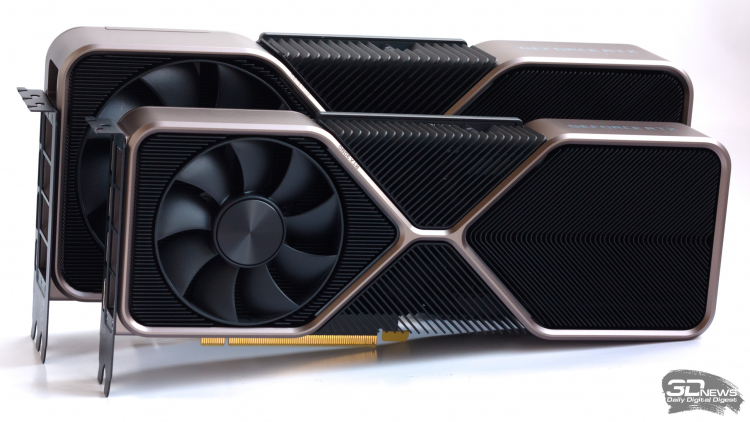

-NV fans hold on to the very last point they can (RT with only a few games available), when a rival generation supporting RT for the first time is able to match the last gen (first gen RT) flagship green card

Love it, really.

-FHD results in CPU benchmarks are not relevant any more (in fact they really weren't earlier too, but for some reason it was important for the blue team)

-NV cards can be UVd to get better power consumption

-NV fans hold on to the very last point they can (RT with only a few games available), when a rival generation supporting RT for the first time is able to match the last gen (first gen RT) flagship green card

Love it, really.