You're assuming that reported data allocated to VRAM is actually in active use (or inevitably will be), which isn't actually the case for any game that streams data in any way. Those asset loading techniques always pre-cache aggressively and thus end up using a lot more VRAM than is actually made use of as gameplay progresses - especially as they for the most part still assume HDD loading speeds (i.e. a maximum of ~200MB/s, likely much less, of compressed data). Of course, cutting this allocation means you either need better predictions (nearly impossible) or faster ways of streaming in data. The latter is what DirectStorage will do, but also what developers themselves can do if they start actually designing for SSD loading speeds. Of course they shouldn't be riding the line on necessary textures, as that will always lead to judder as something is mispredicted, but there are a lot of improvements that can be done.

Even without changes in game code and engines, this still means that with your current <1GB of "free" VRAM, you could likely increase the

actual VRAM usage of any game quite significantly without seeing any effect on performance. This is easily illustrated in how many games will show astronomical VRAM "use" figures on GPUs with tons of VRAM, yet show no dramatic performance deficiencies on GPUs with much less VRAM (even from the same vendor).

One sample is of course not generally applicable, but

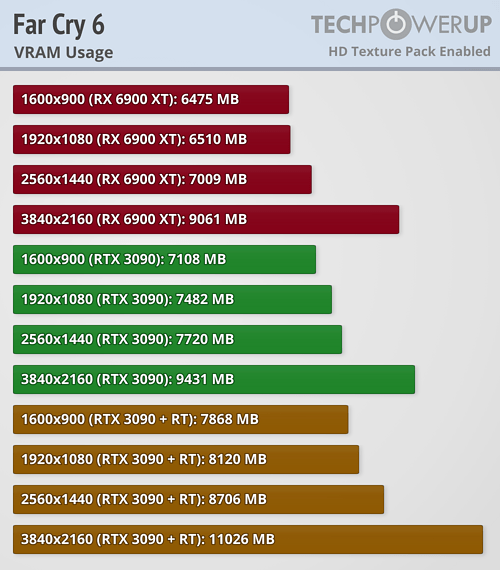

the recent Far Cry 6 performance benchmark is a decent example of this is reality:

>9GB of VRAM usage on cards from both vendors at 2160p, yet when we look at 2160p performance?

No visible correlation between VRAM amount and performance for the vast majority of GPUs. The 8GB 6600 XT performs the same as the 12GB 3060. The 12GB 6700 XT is soundly beaten by the 8GB 3070. There are three GPUs that show uncharacteristic performance regressions compared to previously tested games, and all at 2160p: the 4GB 5500 XT, the 6GB 1660 Ti, and the 6GB 5600 XT. The 6GB cards show much smaller drops than the 4GB card, but still clearly noticeable. So, for Far Cry 6, while reported VRAM usage at 2160p is in the 9-10GB range,

actual VRAM usage is in the >6GB <8GB range.

This could of course be interpreted as 8GB of VRAM becoming too little in the near future, but, a) we don't know where in the 6-8GB range that usage sits; b) there are technologies incoming that will alleviate this; c) this is only at 2160p, which these GPUs can barely handle at these settings levels even today. Thus, it's far more likely for compute to be a bottleneck in the future than VRAM, outside of a handful of poorly balanced SKUs - and there is no indication of the 3060 Ti being one of those.

and we'r still just year in the current gen consoles, it'll increase as the year go on. why you still in denial...

and we'r still just year in the current gen consoles, it'll increase as the year go on. why you still in denial...