Countless leaks have also been wrong.

Smartphone leaks have become so common in recent years that the big players in the industry, including Apple and Samsung, rarely have anything to actually …

bgr.com

Chinese poster Article here: https://hothardware.com/news/geforce-rtx-3080-ti-20gb-specs-benchmarks-leaked Another thread here over at PCMag. https://www.pcgamer.com/nvidia-rtx-3080-ti-specifications-alleged/ Although Toms's is claiming its fake...

forums.anandtech.com

Dont allow survival-ship bias to cloud your judgement.

You remember all the hay made about rDNA3, all the performance claims and huge increases in RT performance?

None of it was true. Core for core, clock for clock, rDNA3 is no faster then rDNA2. Same as ampere VS ada. AMD claimed their new shader design would boost performance. Went nowhere.

There is no reason to believe that rDNA4 will be any different.

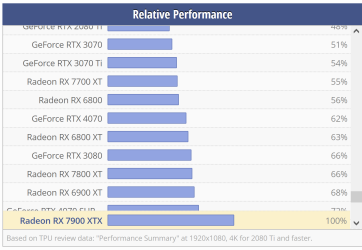

On performance: the 7900 gre keeps coming up in conversation over the 9070 series, and given how AMD marketing has overestimated performance on their cards for the last decade, it's safe to assume the 7900 gre was their target. If we go by the leaks you swear up and down are legit, the 9070xt is shaping up to have 4096 cores, with 64 RT cores and 96 ROPs.

The 7900 GRE has 5,120 cores, 80 RT cores and 160 ROPs. The 7900 xt 5376, 84, and 192 respectively.

You'd be putting a LOT of faith in that 2.97 ghz clock speed to make up such a gulf in hardware difference. There's an alleged 30% clock speed bump, and a 25% reduction in hardware compared to the 7900xt. Somehow, the 7900xtx doesnt look to be anywhere in sight.

People have, for over 15 years, hyped up AMD's performance only to be massively disappointed when the cards finally release. There's no evidence that AMD has changed their ways here.

Here's the thing: if AMD has such efficiency gains, performance gains, ece in store.....why be so coy about the cards? Why not show them off as intended back in January?

The only reason to do this is if the performance leaks are wildly inaccurate. Otherwise, this would be a great opportunity to crush Nvidia by the balls with the disaster that is blackwell.