TheLostSwede

News Editor

- Joined

- Nov 11, 2004

- Messages

- 16,067 (2.26/day)

- Location

- Sweden

| System Name | Overlord Mk MLI |

|---|---|

| Processor | AMD Ryzen 7 7800X3D |

| Motherboard | Gigabyte X670E Aorus Master |

| Cooling | Noctua NH-D15 SE with offsets |

| Memory | 32GB Team T-Create Expert DDR5 6000 MHz @ CL30-34-34-68 |

| Video Card(s) | Gainward GeForce RTX 4080 Phantom GS |

| Storage | 1TB Solidigm P44 Pro, 2 TB Corsair MP600 Pro, 2TB Kingston KC3000 |

| Display(s) | Acer XV272K LVbmiipruzx 4K@160Hz |

| Case | Fractal Design Torrent Compact |

| Audio Device(s) | Corsair Virtuoso SE |

| Power Supply | be quiet! Pure Power 12 M 850 W |

| Mouse | Logitech G502 Lightspeed |

| Keyboard | Corsair K70 Max |

| Software | Windows 10 Pro |

| Benchmark Scores | https://valid.x86.fr/5za05v |

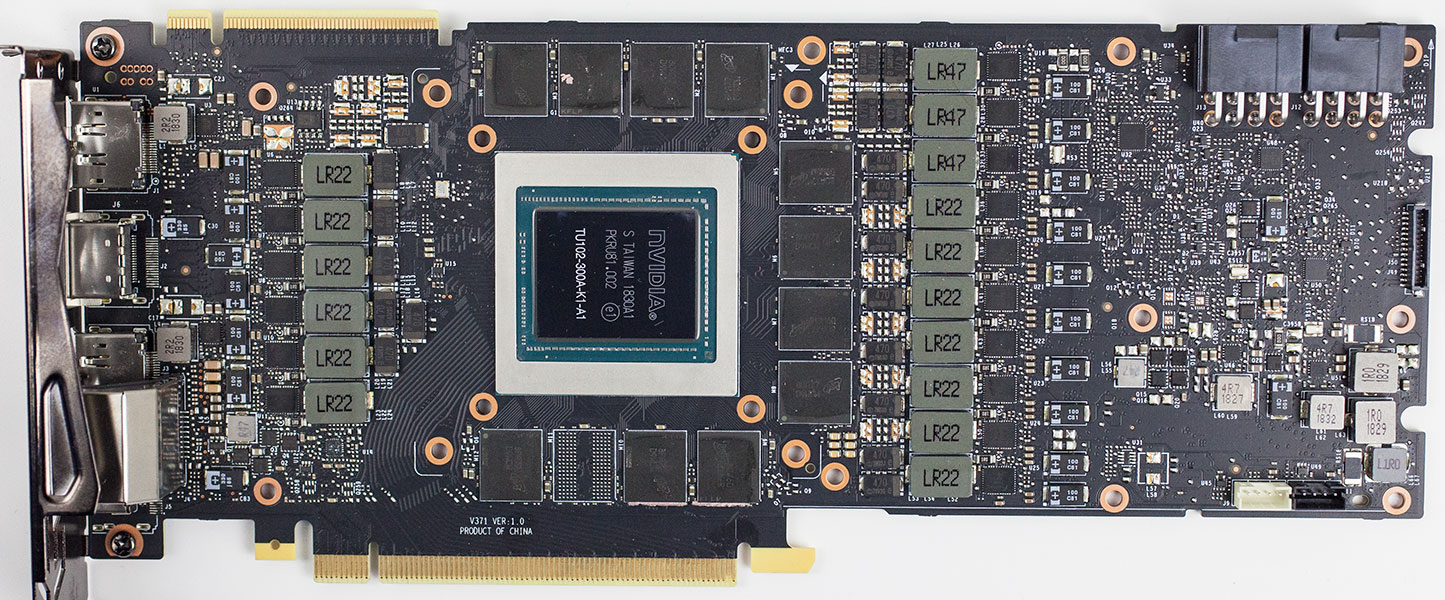

Looking at that first picture, what the hell was NVIDIA thinking?

As so often happens in this industry, they let the engineers do the thinking, but clearly didn't take the time to optimise the design.

It's also a rather power hungry chip, so it needs good power delivery, which means a more expensive board.

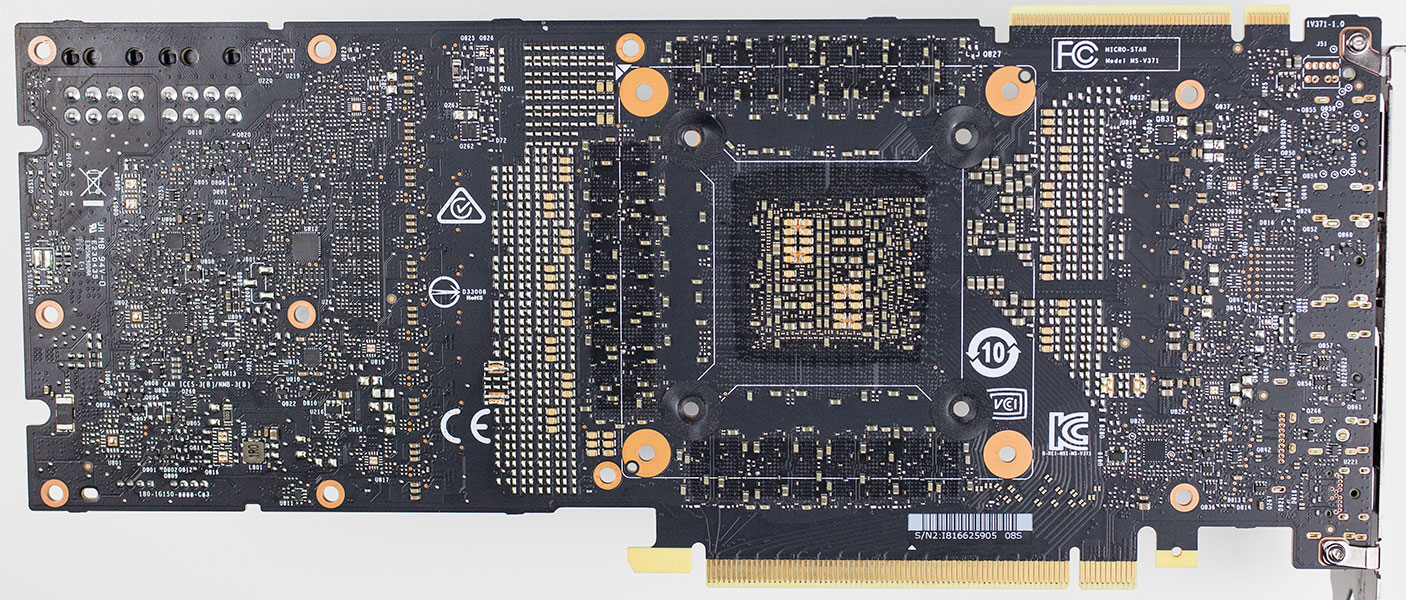

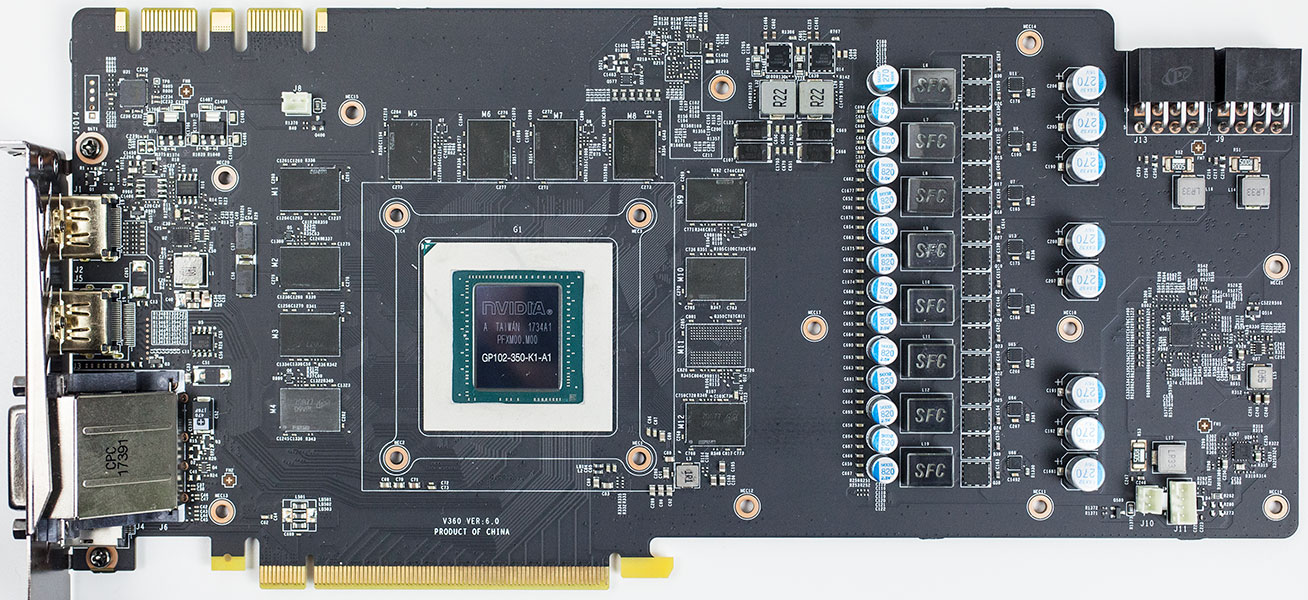

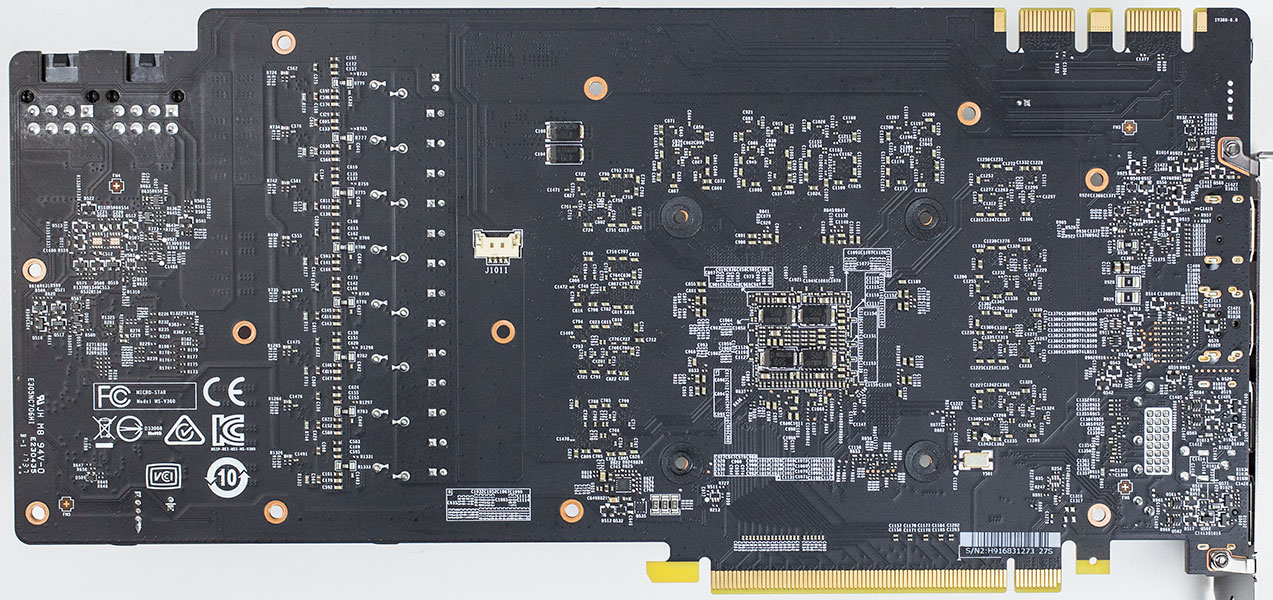

I did a rough count and it looks like the RTX 2080Ti has 3x as many tantalum capacitors as the GTX 1080Ti, both being MSI cards in this case.

Even the Titan X (Pascal) looks simple in comparison, which makes the RTX 2080Ti look like a huge failure from a design standpoint.

I wouldn't be surprised if this was the most complex consumer card that Nvidia has made to date and I'm not talking about the GPU itself, but rather the PCB design.