And that is exactly why I post. Only when there's a bitter AMD fanboy that doesn't have a clue what he's saying.

As for your "that was my only statement", let me recap:

Sad reacts only, all those "RTX 3060 as fast as a 2080ti" seem out of this world right know.

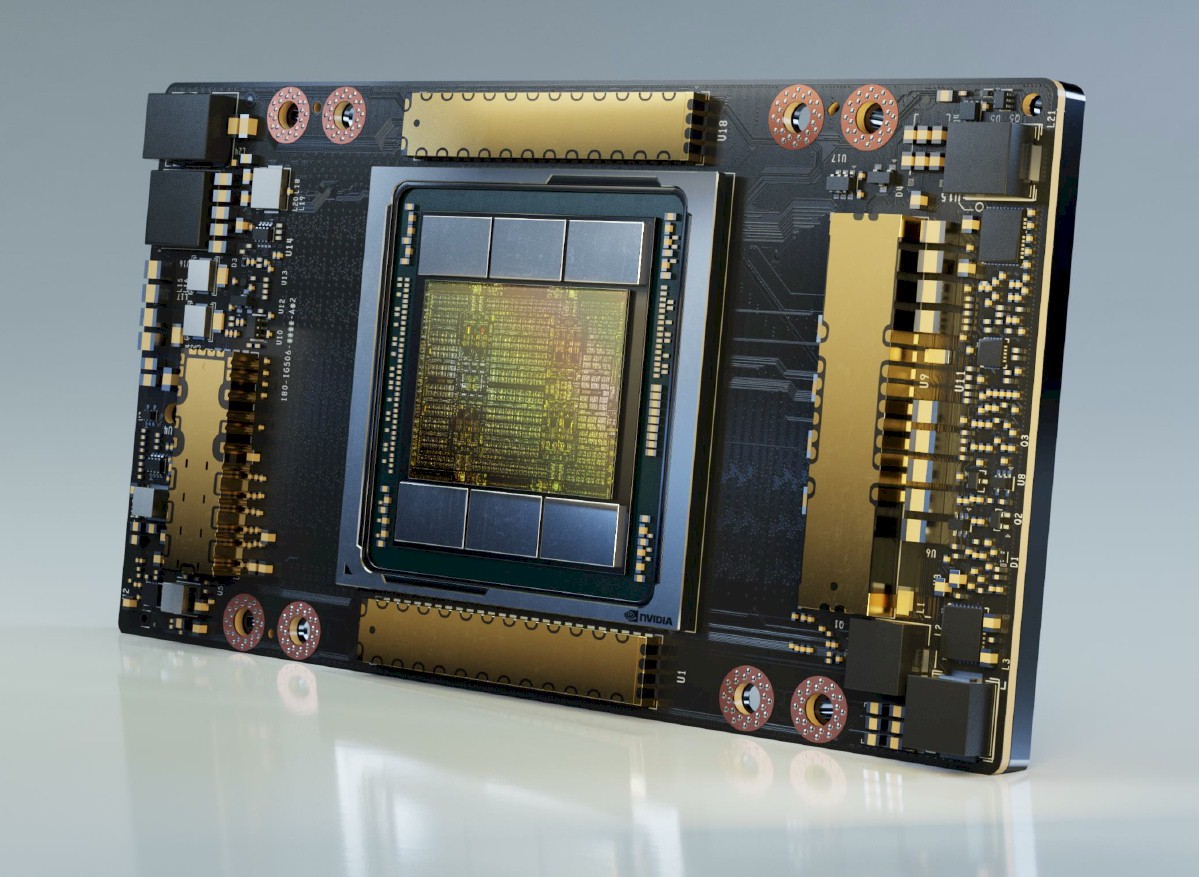

Here you're missing the fact that GA100 is focused entirely on AI (it even has it in the full name - Nvidia A100 Tensor Core GPU) and it's performance has nothing to do with how games are going to perform on other Ampere chips.

But this one has an entire GPC disabled due to horrendous yields, I presume, and probably because it would throw even that eye watering 400W TDP out the window.

Here you fail to understand that the large chips are actually designed with fabrication errors in mind from the start and where you miss that the 400W TDP still translates to 55% increase in energy efficiency compared to Volta.

Comparing SM counts and power is a totally legit way of inferring efficiency, how else would you do it?

By actually measuring the performance and the dividing that by power consumption. As has been done here on TPU for years. But you're the expert. You tell the big boss here that all his reviews were wrong and that he should've inferred efficiency from SM counts and all those perf/power measurements were useless. Go ahead.

In other words if let's say we have a GPU with N/2 shaders at 2 Ghz it will generally consume more power than a GPU with N shaders at 1 Ghz.

Looking at Pascal vs Polaris/Vega/GCN in general - Pascal with much smaller chips and much higher frequencies did have lower power consumption.

Vega 64 balanced with standard BIOS @

1274 MHz - 292W, 1080Ti standard @

1481 MHz 231W

Our AMD Radeon RX Vega 64 review confirms that the company achieved major performance improvements over their last-generation Polaris and Fiji cards: Vega is faster than the GTX 1080. We tested six different performance configurations of the Vega 64, with surprising results.

www.techpowerup.com

While at the same time 1080ti has +30% to +40% more performance, 1080ti is a 471 mm² chip at 16nm, while Vega 64 is a 486 mm² chip at 14nm. AMD = larger, slower, power hungry. And it's been the same story throughout the Polaris/Vega/Maxwell/Pascal/Turing generations. So I'm curious - what kind of data are you basing your statement on?

GA100 has 20% more shaders compared to V100 but also consumes 60% more power. It doesn't take much to see that efficiency isn't that great. It's not that hard to infer these things, don't overestimate their complexity.

Here you are comparing general compute unit performance, ignoring the fact the GA100 chip design invested heavily into fixed function units (tensor cores) and actually achieves 2.5x raw performance increase, with +55% energy efficiency compared to Volta.

Those "FP64" units you see in the SM diagram don't do tensor operation, they just do scalar ops. Different units, for different workloads.

This is perhaps the most startling showcase of how you have no clue what you're talking about. Of course it is possible to do tensor operations on general compute units (SMs). And in fact that is what Volta was doing for anything else besides FP16 tensor ops and it is what even AMD GPUs are doing. Radeons do not have tensor cores, yet it's no problem to run let's say Google's TensorFlow on that hardware. Why? 3D graphics is actually mostly about matrix and vector multiplications, dot products etc., so general compute units are quite good at it - much better than CPUs, not as good as fixed function units like Tensor Cores.

I wrote enough CUDA and OpenCL to know my way around.

It's very obvious to anyone by now that you have not. You are lacking the very essentials required to do that.

To quote your own evaluation of the other guy, "I am convinced you can't be educated, you are missing both the will and the capacity to understand this." It fits you better that it fits him.

.

.