- Joined

- Oct 9, 2007

- Messages

- 46,356 (7.68/day)

- Location

- Hyderabad, India

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | ASUS ROG Strix B450-E Gaming |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 8GB G.Skill Sniper X |

| Video Card(s) | Palit GeForce RTX 2080 SUPER GameRock |

| Storage | Western Digital Black NVMe 512GB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

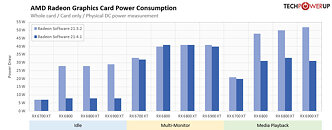

AMD's recently released Radeon Software Adrenalin 21.4.1 WHQL drivers lower non-gaming power consumption, our testing finds. AMD did not mention these reductions in the changelog of its new driver release. We did a round of testing, comparing the previous 21.3.2 drivers, with 21.4.1, using Radeon RX 6000 series SKUs, namely the RX 6700 XT, RX 6800, RX 6800 XT, and RX 6900 XT. Our results show significant power-consumption improvements in certain non-gaming scenarios, such as system idle and media playback.

The Radeon RX 6700 XT shows no idle power draw reduction; but the RX 6800, RX 6800 XT, and RX 6900 XT posted big drops in idle power consumption, at 1440p, going down from 25 W to 5 W (down by about 72%). There are no changes with multi-monitor. Media playback power draw sees up to 30% lower power consumption for the RX 6800, RX 6800 XT, and RX 6900 XT. This is a huge improvement for builders of media PC systems, as not only power is affected, but heat and noise, too.

Why AMD didn't mention these huge improvements is anyone's guess, but a closer look at the numbers could drop some hints. Even with media playback power draw dropping from roughly 50 W to 35 W, the RX 6800/6900 series chips still end up using more power than competing NVIDIA GeForce RTX 30-series SKUs. The RTX 3070 pulls 18 W, while the RTX 3080 does 27 W, both of which are lower. We tested the driver on the older-generation RX 5700 XT, and saw no changes. Radeon RX 6700 XT already had very decent power consumption in these states, so our theory is that for the Navi 22 GPU on the RX 6700 XT AMD improved certain power consumption shortcomings that were found after RX 6800 release. Since those turned out to be stable, they were backported to the Navi 21-based RX 6800/6900 series, too.

View at TechPowerUp Main Site

The Radeon RX 6700 XT shows no idle power draw reduction; but the RX 6800, RX 6800 XT, and RX 6900 XT posted big drops in idle power consumption, at 1440p, going down from 25 W to 5 W (down by about 72%). There are no changes with multi-monitor. Media playback power draw sees up to 30% lower power consumption for the RX 6800, RX 6800 XT, and RX 6900 XT. This is a huge improvement for builders of media PC systems, as not only power is affected, but heat and noise, too.

Why AMD didn't mention these huge improvements is anyone's guess, but a closer look at the numbers could drop some hints. Even with media playback power draw dropping from roughly 50 W to 35 W, the RX 6800/6900 series chips still end up using more power than competing NVIDIA GeForce RTX 30-series SKUs. The RTX 3070 pulls 18 W, while the RTX 3080 does 27 W, both of which are lower. We tested the driver on the older-generation RX 5700 XT, and saw no changes. Radeon RX 6700 XT already had very decent power consumption in these states, so our theory is that for the Navi 22 GPU on the RX 6700 XT AMD improved certain power consumption shortcomings that were found after RX 6800 release. Since those turned out to be stable, they were backported to the Navi 21-based RX 6800/6900 series, too.

View at TechPowerUp Main Site