You are wrong, extra vram costs money. A lot of people will gladly pay for the extra performance + double the vram. Funny how many people were just bitching about the amount of vram on Nvidia cards before AMD released their new cards. That extra $80 isn't going to be a deal breaker for anyone who wasn't already set on buying Nvidia.I must admit 3080XT and 3090 look impressive performance and pricewise. But 6800 disappoints big time. Not because of it's performance, but because of the price. 10-15% more rasterization performance for 16% more money offers slightly worse price/performance ratio than 3070 which is a BIG SHAME. 3070 would look utterly silly if AMD had chosen to price 6800 at $499. At $579, 3070 remains viable alternative. 3070 minuses are less vram, poorer standard rasterization & pluses better driver support, CUDA cores (for Adobe apps), AI upscaling (DLSS), probably better looking ray tracing. I'd say it's a draw. BUT given that Nvidia has much better brand recognition when it comes to GPUs, AMD will have hard time selling 6800 at the given MSRP IF Nvidia can actually produce enough Ampere GPUs to satisfy demand, which might not be the case in the near future.

-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Releases Even More RX 6900 XT and RX 6800 XT Benchmarks Tested on Ryzen 9 5900X

- Thread starter btarunr

- Start date

How about AMD release COD Modern Warfare, BF5, SOTR with DXR benchmark number then ? make it easier to gauge RX6000 RT capability.

XDDDD Cry cry cry.

- Joined

- Nov 11, 2016

- Messages

- 3,062 (1.13/day)

| System Name | The de-ploughminator Mk-II |

|---|---|

| Processor | i7 13700KF |

| Motherboard | MSI Z790 Carbon |

| Cooling | ID-Cooling SE-226-XT + Phanteks T30 |

| Memory | 2x16GB G.Skill DDR5 7200Cas34 |

| Video Card(s) | Asus RTX4090 TUF |

| Storage | Kingston KC3000 2TB NVME |

| Display(s) | LG OLED CX48" |

| Case | Corsair 5000D Air |

| Power Supply | Corsair HX850 |

| Mouse | Razor Viper Ultimate |

| Keyboard | Corsair K75 |

| Software | win11 |

XDDDD Cry cry cry.

Oh wow who could have thought that RX 6000 can run RT right

Benchmark Results Radeon RX 6800 XT Show Good RT scores and Excellent Time Spy GPU score

We've seen AMD post some numbers already showing that we can expect raytracing performance at a GeForce RTX 3070 level with the new 6800 XT. However, the first user based benchmarks are now surfacing...

- Joined

- Dec 31, 2009

- Messages

- 19,366 (3.71/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

lol, wat? How delusional are you? This is not a crime.This is fraud and reviewer sites channels should warn people and condemn nvidia for this but very few does.

- Joined

- Jun 2, 2017

- Messages

- 7,905 (3.15/day)

| System Name | Best AMD Computer |

|---|---|

| Processor | AMD 7900X3D |

| Motherboard | Asus X670E E Strix |

| Cooling | In Win SR36 |

| Memory | GSKILL DDR5 32GB 5200 30 |

| Video Card(s) | Sapphire Pulse 7900XT (Watercooled) |

| Storage | Corsair MP 700, Seagate 530 2Tb, Adata SX8200 2TBx2, Kingston 2 TBx2, Micron 8 TB, WD AN 1500 |

| Display(s) | GIGABYTE FV43U |

| Case | Corsair 7000D Airflow |

| Audio Device(s) | Corsair Void Pro, Logitch Z523 5.1 |

| Power Supply | Deepcool 1000M |

| Mouse | Logitech g7 gaming mouse |

| Keyboard | Logitech G510 |

| Software | Windows 11 Pro 64 Steam. GOG, Uplay, Origin |

| Benchmark Scores | Firestrike: 46183 Time Spy: 25121 |

The point of getting a next generation GPU has always been about high FPS and great visual quality. With my GPU I always prefer high settings but my AMD driver is pretty good at setting for each Game.Do you play the single player or multi player version of the Division 2 ?

Like I said for competitive game, like the multiplayer version of Div2, then I would use Low Settings to get the highest FPS I can get.

Now tell me which do you prefer with your current GPU:

RDR2 High setting ~60fps or 144fps with low settings

AC O High Setting ~60 fps or 144fps with low settings

Horizon Zero Dawn High Setting ~60fps or 144fps with low settings

Well to be clear when I said 60FPS, it's for the minimum FPS.

Yeah sure if you count auto-overclocking and proprietary feature (SAM) that make 6900XT as being equal to 3090, see the hypocrisy there ? Also I can find higher benchmark numbers for 3080/3090 online, so trust AMD numbers with a grain of salt.

It's 1440P Freesync2 43-165 HZ high refresh so there is nothing to lament.

You see you are still in denial. There is no bias because of SAM. It's the same thing Nvidia does with CUDA. Just because SAM mitigates NVME doesn't mean that it is somehow cheating. As far as trusting AMD you can look at my history and know that I have always had confidence in the leadership and honesty of Lisa Su. No one at AMD confirms or leaks anything substantive (except her) since Ryzen was announced. I know that it is difficult in a world amplified by social media that it is sometimes hard to understand that AMD GPUs are faster than Nvidia GPUs (apparently) period this generation. Announcing/leaking a 3080TI card while in the midst (weeks) of launch issues with current 3000 cards is actually laughable in it's desperation. Just imagine if AMD announces that 3000 series CPUs will support SAM.........period, point, blank. The future is indeed bright and X570/B550 has proven to be a great investment.

D

Deleted member 185088

Guest

I think no hardware is good enough for RayTracing. And it doesn't bring anything special, games need more polygons and way better textures.How about AMD release COD Modern Warfare, BF5, SOTR with DXR benchmark number then ? make it easier to gauge RX6000 RT capability.

Control PC: a vision for the future of real-time rendering?

Last week, we posed the question: has ray tracing finally found its killer app? While Minecraft RTX and Quake 2 RTX hav…

- Joined

- Dec 31, 2009

- Messages

- 19,366 (3.71/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

The difference is in games. This thread is about gaming benchmarks.You see you are still in denial. There is no bias because of SAM. It's the same thing Nvidia does with CUDA. Just because SAM mitigates NVME doesn't mean that it is somehow cheating.

In order to get the full performance of these cards, you need to be balls deep in the AMD ecosystem. This means the latest mobos and processors. Most people aren't there and that will take time to get there. That said, the real curiosity to me how this works on intel and non 5000 series/b550/x570 setups. From the looks of the charts, that knocks things down a peg.

just imagine....................as that all it will ever be....Just imagine if AMD announces that 3000 series CPUs will support SAM.

- Joined

- Sep 15, 2016

- Messages

- 475 (0.17/day)

So serious question: If a 6800 will get you 80-100 FPS at 4k, is there any incentive other than "future-proofing" to purchase anything higher specced? I know some people have 120hz+ 4k panels but for the 144hz/1440p and 60hz/4k crowd (i.e., the vast majority) it doesn't seem to make a lot of sense to dump extra heat into your chassis.

6800 is a different hardware class. Rdna2 has less code bubbles. If anything it will lessen your heat impact, compared to your runner up gpu.So serious question: If a 6800 will get you 80-100 FPS at 4k, is there any incentive other than "future-proofing" to purchase anything higher specced? I know some people have 120hz+ 4k panels but for the 144hz/1440p and 60hz/4k crowd (i.e., the vast majority) it doesn't seem to make a lot of sense to dump extra heat into your chassis.

I don't want to be a teamster, but this is how the green team thinks. These gpus aren't mobile. They aren't for the same kinds of workloads.

Beating yesteryears competition to the punch is something only Intel gpu goals could imagine, so set your goals high, not to become a laughing stock, imo.

- Joined

- Apr 10, 2020

- Messages

- 480 (0.33/day)

1 Gbyte GDDR6, 3,500 MHz, 15 Gbps (MT61K256M32JE-14: A TR )costs $7.01 at the moment if you order up to a million pieces. You can negotiate much lower prices if you order more. That's 56 bucks for 8 gigs in worse case scenario (around 40 is more realistic). AMD is likely making nice profit selling you additional vram for 80 bucks.You are wrong, extra vram costs money. A lot of people will gladly pay for the extra performance + double the vram. Funny how many people were just bitching about the amount of vram on Nvidia cards before AMD released their new cards. That extra $80 isn't going to be a deal breaker for anyone who wasn't already set on buying Nvidia.

- Joined

- Oct 17, 2013

- Messages

- 121 (0.03/day)

Do you play the single player or multi player version of the Division 2 ?

Like I said for competitive game, like the multiplayer version of Div2, then I would use Low Settings to get the highest FPS I can get.

Now tell me which do you prefer with your current GPU:

RDR2 High setting ~60fps or 144fps with low settings

AC O High Setting ~60 fps or 144fps with low settings

Horizon Zero Dawn High Setting ~60fps or 144fps with low settings

Well to be clear when I said 60FPS, it's for the minimum FPS.

Yeah sure if you count auto-overclocking and proprietary feature (SAM) that make 6900XT as being equal to 3090, see the hypocrisy there ? Also I can find higher benchmark numbers for 3080/3090 online, so trust AMD numbers with a grain of salt.

I completely agree with this guy. Refresh rate does not matter as much after 60 fps. I own a 60hz, 120hz, and a 144hz monitor. One of which is a laptop with horrid gray to gray scale of 45ms. All three of them perform similarly. They feel the same with vsync on the the 60hz monitor. If you were to turn vsync off then the difference shows. That is it. If the game is producing frames higher than a monitor can handle. That is the only time these higher refresh rate monitors come into play. However, with implementations like vsync this becomes less of a deal. In my opinion response time, gray to gray scale performance, brightness, and color accuracy are hands down the most important aspects to monitors. I want to be lost in a new world and not reminded that I have to work in the morning.

- Joined

- Jan 25, 2006

- Messages

- 1,470 (0.22/day)

| Processor | Ryzen 1600AF @4.2Ghz 1.35v |

|---|---|

| Motherboard | MSI B450M PRO-A-MAX |

| Cooling | Deepcool Gammaxx L120t |

| Memory | 16GB Team Group Dark Pro Sammy-B-die 3400mhz 14.15.14.30-1.4v |

| Video Card(s) | XFX RX 5600 XT THICC II PRO |

| Storage | 240GB Brave eagle SSD/ 2TB Seagate Barracuda |

| Display(s) | Dell SE2719HR |

| Case | MSI Mag Vampiric 011C AMD Ryzen Edition |

| Power Supply | EVGA 600W 80+ |

| Software | Windows 10 Pro |

How dare AMD price their cards in line with nvidia cards /performance, how dare they turn a profit, says all the ones who were praising nvidia 2 weeks ago for selling a 2080ti performance gpu for 500, which are also vapourware

- Joined

- Nov 11, 2016

- Messages

- 3,062 (1.13/day)

| System Name | The de-ploughminator Mk-II |

|---|---|

| Processor | i7 13700KF |

| Motherboard | MSI Z790 Carbon |

| Cooling | ID-Cooling SE-226-XT + Phanteks T30 |

| Memory | 2x16GB G.Skill DDR5 7200Cas34 |

| Video Card(s) | Asus RTX4090 TUF |

| Storage | Kingston KC3000 2TB NVME |

| Display(s) | LG OLED CX48" |

| Case | Corsair 5000D Air |

| Power Supply | Corsair HX850 |

| Mouse | Razor Viper Ultimate |

| Keyboard | Corsair K75 |

| Software | win11 |

The point of getting a next generation GPU has always been about high FPS and great visual quality. With my GPU I always prefer high settings but my AMD driver is pretty good at setting for each Game.

It's 1440P Freesync2 43-165 HZ high refresh so there is nothing to lament.

You see you are still in denial. There is no bias because of SAM. It's the same thing Nvidia does with CUDA. Just because SAM mitigates NVME doesn't mean that it is somehow cheating. As far as trusting AMD you can look at my history and know that I have always had confidence in the leadership and honesty of Lisa Su. No one at AMD confirms or leaks anything substantive (except her) since Ryzen was announced. I know that it is difficult in a world amplified by social media that it is sometimes hard to understand that AMD GPUs are faster than Nvidia GPUs (apparently) period this generation. Announcing/leaking a 3080TI card while in the midst (weeks) of launch issues with current 3000 cards is actually laughable in it's desperation. Just imagine if AMD announces that 3000 series CPUs will support SAM.........period, point, blank. The future is indeed bright and X570/B550 has proven to be a great investment.

So you agree that you are not using Low settings just to get 144fps then ?

New games are designed push next gen GPU to their knee all the same, it's all a scheme to sell you GPU you know, or you could just play CSGO for eternity and not care about brand new GPU.

Well I am just as happy when AMD is competitive as you are, because now the retailers can't charge cut throat price Ampere as before

, though at this point I'm just gonna wait for 3080 Ti.

, though at this point I'm just gonna wait for 3080 Ti.- Joined

- Apr 10, 2020

- Messages

- 480 (0.33/day)

xx70 class GPU should never cost more than 400 bucks. I blame FOMOs, fanboys and AMD not being competitive in the high end retail GPU market segment in the last 7 years, enabling Ngreedia to hike prices as they please. Let's face it, 3090 is nothing more than "3080TI" with additional vram for 500 bucks more than 2080TI which was 300 more ($500 in real life) than 1080TI. Praised 3070 is the most expensive xx70 class GPU besides 2070(S) being ever released, yet it was the best selling RTX in the Turing line, go figure.How dare AMD price their cards in line with nvidia cards /performance, how dare they turn a profit, says all the ones who were praising nvidia 2 weeks ago for selling a 2080ti performance gpu for 500, which are also vapourware

What really pisses me off is now is AMD deciding to get along with Ngreedia price hikes, not even bothering to compete with them on bettering price to performance ratio anymore. The way things stand today, GPU prices will only go up as AMD has obviously chosen higher profit margins over trying to increasing GPU market share (by offering consumers substantially more for less). DIY PC builders are getting milked by both companies and they seem not to care. That's why I decided to get out of the market. I'm keeping 1080TI and will wait till RDNA3 to buy 3080XT on 2nd hand market for 300 bucks.

Last edited:

- Joined

- Sep 3, 2019

- Messages

- 2,978 (1.76/day)

- Location

- Thessaloniki, Greece

| System Name | PC on since Aug 2019, 1st CPU R5 3600 + ASUS ROG RX580 8GB >> MSI Gaming X RX5700XT (Jan 2020) |

|---|---|

| Processor | Ryzen 9 5900X (July 2022), 150W PPT limit, 79C temp limit, CO -9~14 |

| Motherboard | Gigabyte X570 Aorus Pro (Rev1.0), BIOS F37h, AGESA V2 1.2.0.B |

| Cooling | Arctic Liquid Freezer II 420mm Rev7 with off center mount for Ryzen, TIM: Kryonaut |

| Memory | 2x16GB G.Skill Trident Z Neo GTZN (July 2022) 3600MHz 1.42V CL16-16-16-16-32-48 1T, tRFC:288, B-die |

| Video Card(s) | Sapphire Nitro+ RX 7900XTX (Dec 2023) 314~465W (387W current) PowerLimit, 1060mV, Adrenalin v24.3.1 |

| Storage | Samsung NVMe: 980Pro 1TB(OS 2022), 970Pro 512GB(2019) / SATA-III: 850Pro 1TB(2015) 860Evo 1TB(2020) |

| Display(s) | Dell Alienware AW3423DW 34" QD-OLED curved (1800R), 3440x1440 144Hz (max 175Hz) HDR1000, VRR on |

| Case | None... naked on desk |

| Audio Device(s) | Astro A50 headset |

| Power Supply | Corsair HX750i, 80+ Platinum, 93% (250~700W), modular, single/dual rail (switch) |

| Mouse | Logitech MX Master (Gen1) |

| Keyboard | Logitech G15 (Gen2) w/ LCDSirReal applet |

| Software | Windows 11 Home 64bit (v23H2, OSB 22631.3155) |

Personally I do not consider 6900XT a 3090 equal. Its between 3080 and 3090. And that is without any feature enabled.

On the other hand 6800XT is a 3080 direct competitor. Even with all the extra features off.

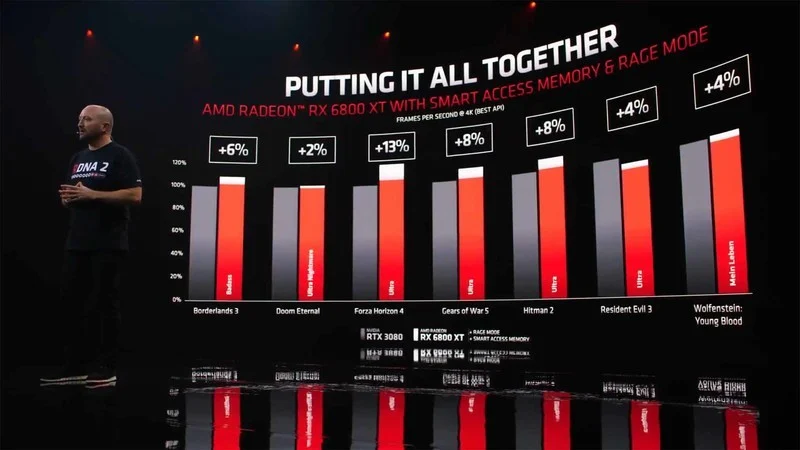

At AMD's presentation the "RageMode" OC and SAM altogether gained around a ~6.5% FPS on avg (+2~13% depending the game). We really dont know how much was it from SAM alone.

Just remove white tiles above red lines

On the other hand 6800XT is a 3080 direct competitor. Even with all the extra features off.

At AMD's presentation the "RageMode" OC and SAM altogether gained around a ~6.5% FPS on avg (+2~13% depending the game). We really dont know how much was it from SAM alone.

Just remove white tiles above red lines

Last edited:

- Joined

- Dec 31, 2009

- Messages

- 19,366 (3.71/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

I'v heard rage mode isn't much that it is mostly SAM doing this(?). I recall Linus mentioning that it isn't much more than power limit increase and fan speed increase to get more boost.At AMD's presentation the "RageMode" OC and SAM altogether gained around a ~6.5% FPS on avg (+2~13% depending the game). We really dont know how much was it from SAM alone.

Just remove wihite tiles above red lines,

This is great for the few who are balls deep in their ecosystem...but what about for the majority of users? How many, in the current landscape, are using non B550/X570 systems (an overwhelming majority, surely)? You need to upgrade your CPU and mobo to support this feature. Personally, for the majority, we need to see how these perform without. From the chart above, looks like it takes a win back to a loss and a couple of wins back to virtual ties. I'd love to see this compared to overclocked 3080's instead of whatever they have... oh and on the same API.

- Joined

- Apr 10, 2020

- Messages

- 480 (0.33/day)

Now, let's be honest RDNA2 rasterization performance is very good if AMD is not lying, pricing not so much. 1000 bucks is still A LOT of $ to pay for a gaming GPU.Personally I do not consider 6900XT a 3090 equal. Its between 3080 and 3090. And that is without any feature enabled.

On the other hand 6800XT is a 3080 direct competitor. Even with all the extra features off.

At AMD's presentation the "RageMode" OC and SAM altogether gained around a ~6.5% FPS on avg (+2~13% depending the game). We really dont know how much was it from SAM alone.

Just remove wihite tiles above red lines

View attachment 174173

- Joined

- Jun 2, 2017

- Messages

- 7,905 (3.15/day)

| System Name | Best AMD Computer |

|---|---|

| Processor | AMD 7900X3D |

| Motherboard | Asus X670E E Strix |

| Cooling | In Win SR36 |

| Memory | GSKILL DDR5 32GB 5200 30 |

| Video Card(s) | Sapphire Pulse 7900XT (Watercooled) |

| Storage | Corsair MP 700, Seagate 530 2Tb, Adata SX8200 2TBx2, Kingston 2 TBx2, Micron 8 TB, WD AN 1500 |

| Display(s) | GIGABYTE FV43U |

| Case | Corsair 7000D Airflow |

| Audio Device(s) | Corsair Void Pro, Logitch Z523 5.1 |

| Power Supply | Deepcool 1000M |

| Mouse | Logitech g7 gaming mouse |

| Keyboard | Logitech G510 |

| Software | Windows 11 Pro 64 Steam. GOG, Uplay, Origin |

| Benchmark Scores | Firestrike: 46183 Time Spy: 25121 |

Are not the purpose of Gaming benchmarks not to gauge the performance period but people also included Adobe Premiere benchmarks in AMD reviews? I do not agree that AMD has not penetrated the market and it is not like X570 or B550 (some) boards are expensive. It is not like you have to get a X670 or B650 board. X570 and now B550 are both mature platforms. If these first CPUs all support SAM then that means that the non X parts will do the same thing so the gap could be wider. I can understand as I too am intrigued to see how Intel and Nvidia cards work with either. I am going to be selfish in this though as I bought a X570 months ago specifically for this as soon as I saw the PS5 technical brief.The difference is in games. This thread is about gaming benchmarks.

In order to get the full performance of these cards, you need to be balls deep in the AMD ecosystem. This means the latest mobos and processors. Most people aren't there and that will take time to get there. That said, the real curiosity to me how this works on intel and non 5000 series/b550/x570 setups. From the looks of the charts, that knocks things down a peg.

just imagine....................as that all it will ever be....

- Joined

- Sep 3, 2019

- Messages

- 2,978 (1.76/day)

- Location

- Thessaloniki, Greece

| System Name | PC on since Aug 2019, 1st CPU R5 3600 + ASUS ROG RX580 8GB >> MSI Gaming X RX5700XT (Jan 2020) |

|---|---|

| Processor | Ryzen 9 5900X (July 2022), 150W PPT limit, 79C temp limit, CO -9~14 |

| Motherboard | Gigabyte X570 Aorus Pro (Rev1.0), BIOS F37h, AGESA V2 1.2.0.B |

| Cooling | Arctic Liquid Freezer II 420mm Rev7 with off center mount for Ryzen, TIM: Kryonaut |

| Memory | 2x16GB G.Skill Trident Z Neo GTZN (July 2022) 3600MHz 1.42V CL16-16-16-16-32-48 1T, tRFC:288, B-die |

| Video Card(s) | Sapphire Nitro+ RX 7900XTX (Dec 2023) 314~465W (387W current) PowerLimit, 1060mV, Adrenalin v24.3.1 |

| Storage | Samsung NVMe: 980Pro 1TB(OS 2022), 970Pro 512GB(2019) / SATA-III: 850Pro 1TB(2015) 860Evo 1TB(2020) |

| Display(s) | Dell Alienware AW3423DW 34" QD-OLED curved (1800R), 3440x1440 144Hz (max 175Hz) HDR1000, VRR on |

| Case | None... naked on desk |

| Audio Device(s) | Astro A50 headset |

| Power Supply | Corsair HX750i, 80+ Platinum, 93% (250~700W), modular, single/dual rail (switch) |

| Mouse | Logitech MX Master (Gen1) |

| Keyboard | Logitech G15 (Gen2) w/ LCDSirReal applet |

| Software | Windows 11 Home 64bit (v23H2, OSB 22631.3155) |

I'm not going to argue... butNow, let's be honest RDNA2 rasterization performance is very good if AMD is not lying, pricing not so much. 1000 bucks is still A LOT of $ to pay for a gaming GPU.

View attachment 174176

AMD pricing +54% more for a +5% perf GPU has somehow followed the utter stupidity of...

nVidia pricing +114% more for a +10% perf GPU

It is what it is...!

Non the less, AMDs cards have more perf/$ value.

Last edited:

- Joined

- Apr 19, 2017

- Messages

- 71 (0.03/day)

100 percent agree. People only talking this way because its AMD. I think the 6800 non xt is really good. Double vram plus 15-20% faster than 3070. lmao and people want that for no additional cost. DelusionalYou are wrong, extra vram costs money. A lot of people will gladly pay for the extra performance + double the vram. Funny how many people were just bitching about the amount of vram on Nvidia cards before AMD released their new cards. That extra $80 isn't going to be a deal breaker for anyone who wasn't already set on buying Nvidia.

- Joined

- Dec 31, 2009

- Messages

- 19,366 (3.71/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

A few sites cover that, sure. But this is in reference to gaming and following the thread here.Are not the purpose of Gaming benchmarks not to gauge the performance period but people also included Adobe Premiere benchmarks in AMD reviews? I do not agree that AMD has not penetrated the market and it is not like X570 or B550 (some) boards are expensive. It is not like you have to get a X670 or B650 board. X570 and now B550 are both mature platforms. If these first CPUs all support SAM then that means that the non X parts will do the same thing so the gap could be wider. I can understand as I too am intrigued to see how Intel and Nvidia cards work with either. I am going to be selfish in this though as I bought a X570 months ago specifically for this as soon as I saw the PS5 technical brief.

They've penetrated the market... but of those.. who owns the B550/X570? I'd guess more own X470 and lower than X570/B550. Remember, there was a large contingent pissed about 5000 series support on those 400 series boards. With that, it feels like many were just holding out. Also, not a soul has these CPUs yet. So, there is literally zero penetration on that front. So at minimum, you need to buy a new CPU. At worst, you're buying a CPU and a motherboard for this. Again, not something a majority has at this time. So IMO, it would be more than prudent to include the majority here to see how it performs on those systems until one gets the new 5000 series and a motherboard. Obviously seeing BOTH would be ideal.

- Joined

- Feb 23, 2019

- Messages

- 5,616 (2.99/day)

- Location

- Poland

| Processor | Ryzen 7 5800X3D |

|---|---|

| Motherboard | Gigabyte X570 Aorus Elite |

| Cooling | Thermalright Phantom Spirit 120 SE |

| Memory | 2x16 GB Crucial Ballistix 3600 CL16 Rev E @ 3800 CL16 |

| Video Card(s) | RTX3080 Ti FE |

| Storage | SX8200 Pro 1 TB, Plextor M6Pro 256 GB, WD Blue 2TB |

| Display(s) | LG 34GN850P-B |

| Case | SilverStone Primera PM01 RGB |

| Audio Device(s) | SoundBlaster G6 | Fidelio X2 | Sennheiser 6XX |

| Power Supply | SeaSonic Focus Plus Gold 750W |

| Mouse | Endgame Gear XM1R |

| Keyboard | Wooting Two HE |

It's funny

Next time read thoroughly.

Oh wow who could have thought that RX 6000 can run RT right

Benchmark Results Radeon RX 6800 XT Show Good RT scores and Excellent Time Spy GPU score

We've seen AMD post some numbers already showing that we can expect raytracing performance at a GeForce RTX 3070 level with the new 6800 XT. However, the first user based benchmarks are now surfacing...www.guru3d.com

That is about as fast as the RTX 3070 with ray tracing, however, with DLSS on. So if you filter out the performance benefit froM DLSS, that's not bad, really.

Next time read thoroughly.

We can't really compare T&L on the first GeForce with Ray Tracing. Ray Tracing is adding new feature to the graphic rendering where T&L was to use a fixed function on the GPU to speed up something that every game had to do and were doing it on the CPU.

So we really adding new tricks to game engine right now and not offloading or accelerating an already existing process.

The thing is we are at a point that we have to do so much trick to emulate lights and reflection that it become really close to require as much power as real ray tracing. Using ray tracing for theses things require a lot of computational power but require way less complexity than all the tricks we currently use to emulate it.

It's one of the way that we can increase graphic fidelity. But there are still other area where we need to improve like more polygons, better physics and object deformation and better texture and materials.

Ray Tracing in game is clearly the future and it will clearly improve on every generation. It's like when the first shaders were added to GPU. It was just for few effect and the performance hit was huge. When it started to be used widely, the first GPUs supporting it were totally outclassed anyway. This will be the same with these current Generation (Both Nvidia and AMD gpu).

So, yes, Ray tracing is the future and is here to stay. But no, it shouldn't be a buying decision. No one should say, i will get the 3080 instead of a 6800 XT to "Future proof due to ray tracing"

People should just buy the best GFX cards for game released right now and maybe in the next year max.

So we really adding new tricks to game engine right now and not offloading or accelerating an already existing process.

The thing is we are at a point that we have to do so much trick to emulate lights and reflection that it become really close to require as much power as real ray tracing. Using ray tracing for theses things require a lot of computational power but require way less complexity than all the tricks we currently use to emulate it.

It's one of the way that we can increase graphic fidelity. But there are still other area where we need to improve like more polygons, better physics and object deformation and better texture and materials.

Ray Tracing in game is clearly the future and it will clearly improve on every generation. It's like when the first shaders were added to GPU. It was just for few effect and the performance hit was huge. When it started to be used widely, the first GPUs supporting it were totally outclassed anyway. This will be the same with these current Generation (Both Nvidia and AMD gpu).

So, yes, Ray tracing is the future and is here to stay. But no, it shouldn't be a buying decision. No one should say, i will get the 3080 instead of a 6800 XT to "Future proof due to ray tracing"

People should just buy the best GFX cards for game released right now and maybe in the next year max.

- Joined

- Nov 11, 2016

- Messages

- 3,062 (1.13/day)

| System Name | The de-ploughminator Mk-II |

|---|---|

| Processor | i7 13700KF |

| Motherboard | MSI Z790 Carbon |

| Cooling | ID-Cooling SE-226-XT + Phanteks T30 |

| Memory | 2x16GB G.Skill DDR5 7200Cas34 |

| Video Card(s) | Asus RTX4090 TUF |

| Storage | Kingston KC3000 2TB NVME |

| Display(s) | LG OLED CX48" |

| Case | Corsair 5000D Air |

| Power Supply | Corsair HX850 |

| Mouse | Razor Viper Ultimate |

| Keyboard | Corsair K75 |

| Software | win11 |

It's funny

Next time read thoroughly.

It's funny,

I just showed that RX6000 can indeed run RTX, yet AMD did not share the numbers.

Next time read more thoroughly please.

Also I get 86fps with 2080 Ti at 1440p RTX ON DLSS OFF, I have no clue what the guy who wrote the article get their numbers from.

Last edited: