- Joined

- Mar 13, 2007

- Messages

- 856 (0.14/day)

- Location

- Eastern Tennessee

| System Name | Firebird |

|---|---|

| Processor | Ryzen 7 1700X |

| Motherboard | MSI X370 Titanium |

| Cooling | Tt Water2.0 PRO (They use the same bracket as 3.0) |

| Memory | G.Skill TridentZ 2x8GB 3200 15-15-15-35 B-Die (@3333 ATM) |

| Video Card(s) | ASUS STRIX R9 390 8GB |

| Storage | Too many. Samsung 750GB, 120GB 850 EVO, Seagate 1TB, Toshiba 1TB, OCZ 32GB Cache Drive |

| Display(s) | Samsung 46" UN46D6050 1080P HDTV |

| Case | Home-made tech bench. |

| Audio Device(s) | ASUS Xonar Essence STX (I use HDMI though *cry*) |

| Power Supply | CWT PUC1000V-S 1000W, Abee Supremer (import) 1200W currently used as primary. |

| Mouse | Razer Copperhead (circa 2005) |

| Keyboard | Tt Challenger Pro (AVOID! Keys wear super fast) |

| Software | Win 10 Pro x64 |

| Benchmark Scores | Meh. |

The Theory:

I had gotten the newer version of Afterburner, with it's accompanying stress test app based on FurMark. This one solved an issue with PostProcess FX crashing the app on my PC and when I loaded the FX the FPS were a couple hundred times more than w/. That isn't what this is about though! The relevance is I used it to test how much of an impact the clocks would make on frame rate, since a higher frame rate will show the performance impact much more precisely compared to a lower frame rate. As I had went about clocking, I noticed that there seemed to be little or no benefit to GPU clocks upwards of 50MHz over stock, compared to memory clocks which made a noticeable difference. Even more puzzling was the fact that after around 1370 to 1380MHz (I didn't peg the exact moment haha) the performance seemed to change drastically. Aside from that oddity, what I figured was given that the GPU and memory are the same speed of the 5870, that the difference in the memory bus (and perhaps lack of shader cores) had left the GPU a bit bandwidth starved. Seeing as that is what seemed to be the case with at least the FurMark app. I hadn't actually ran the benchmark feature at that point, but these were my initial findings (Basically quoting myself from a PM to Kantastic)-- 850/1200 in FurMark (the MSI logo rotates and the FPS changes based on that) w/ post processing turn on, low side is 595 FPS and the high side is 635 FPS. At 900/1200 no apparent gain. 850/1300 lows of 620s highs of 660s. 900/1300 seems to start kicking in the ECC feature. Memory clocks up to 1370 pretty much yield no gain. At 1395 the lows are in the 660s and highs of 700-705 FPS! Above that my memory starts to crap out, 1395 so far is stable.

System Config:

PhII 555BE-------3717MHz

DDR3------------1600MHz 7-7-7-30 1T

NB & HT---.------2400MHz

PCIe------.------115MHz (Irrelevant really)

Cat 10.3 Settings:

AA & AF---------Application Controlled - Box Filter

ADAA-------.----Off

ATMS -----------MSAA

MipMap----------High Quality

Cat AI-----------Normal/Low

VSync Off, Unless Application Requests

Various other minor registry tweaks, turning on Tiling options, and 2 CF settings that I haven't found impacts performance any but I do it for peace of mind lol

Overclocks Tested:

Core - 850 (Default), 860, 875, 900.

Mem - 1200 (Default), 1300, 1341, 1395. (1342 due to 1340 = 1338)

Clocks will be every combination of the 4, with the only exception being the default Core only being ran with 1200 Mem speed. Simply because it's so close to 860MHz, and 3+mins each run in just 3DM06 is quite awhile to spend on this Which is also why I'm not running the full gamut of test, which below I list those I am.

Which is also why I'm not running the full gamut of test, which below I list those I am.

For the tests, unless I specify otherwise, settings are left default. Margin of error I find for 3DMark is about +1FPS, +~50MT/s single texture and +~175MT/s multi texture. Oddly I've not really experienced any results lower than the first run's score. So any tests that do show lower, I am considering it the 5000 series GPU utilizing it's ECC feature, which if it detects graphical anomolies it will correct them. That pretty much means that any results that are lower, with a higher OC, are probably due to that function. Not many reviewer of the big sites seem to know this though [H]ard|OCP put it best when they said the OC methodology one has to use when OCing these cards (and probably future AMD GPUs) is OC until you notice a drop in performance as that is your max, then go back to you previous OC and if you feel like it you can raise it up slowly till it drops again and back off a couple MHz. With how well things take clocks these days, I generally go in increments of 10-15MHz until I know I am close to the limit and then I increase 2-5MHz.

[H]ard|OCP put it best when they said the OC methodology one has to use when OCing these cards (and probably future AMD GPUs) is OC until you notice a drop in performance as that is your max, then go back to you previous OC and if you feel like it you can raise it up slowly till it drops again and back off a couple MHz. With how well things take clocks these days, I generally go in increments of 10-15MHz until I know I am close to the limit and then I increase 2-5MHz.

GPUz couldn't communicate with the server for an authentication, so you'll have to deal with a program screen cap. I didn't do the whole list of clocks, as I took them yesterday before I decided to go more in depth with it.

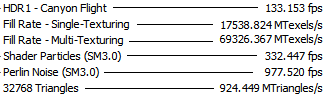

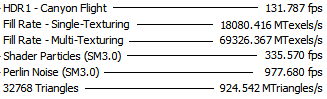

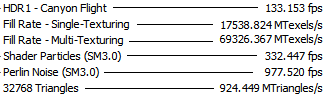

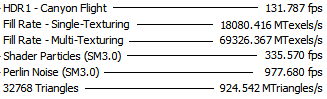

3DM06:

Graphics - Default

Tests - Canyon Flight, Fill Rate Single and Multi Texture, Shader Particles, Perlin Noise, 32768 Triangles.

Default...

860/1200

----

----

860/1300

860/1300

860/1341

----

----

860/1395

860/1395

-------------------------------------------------------------------------------------------------------------------

875/1200

----

----

875/1300

875/1300

875/1341

----

----

875/1395

875/1395

-------------------------------------------------------------------------------------------------------------------

900/1200

----

----

900/1300

900/1300

900/1341

----

----

900/1395

900/1395

Verdict: Mixed bag for me. Some of the tests show no benefit to core clocks, then again some don't speak much about memory clocks either heh The flip side is just the same. Some show a bit gain in both camps :\ I'm sure 3DM01 will show similar conclusions.

I'm thinking I'll have to figure out a game to benchmark in order to get a more clear picture, which is also a problem as the few I have don't have easy benchmark abilities or flat out don't have any.

Later tonight (or tomorrow perhaps) I will pick up with 3DM01. Yes it's old, but basically you are getting results from what I have to work with! As my Verizon Wireless Broadband's 5GB/mo cap severely hinders my downloading!

As my Verizon Wireless Broadband's 5GB/mo cap severely hinders my downloading!  I went out on a limb to get Unigen's Heaven because Sandra was trying to tell me DX11 wasn't working and I didn't have the correct shaders or some BS for OGL heh Clearly proven otherwise with that benchmark

I went out on a limb to get Unigen's Heaven because Sandra was trying to tell me DX11 wasn't working and I didn't have the correct shaders or some BS for OGL heh Clearly proven otherwise with that benchmark

3DM01 (skipping 875MHz test):

Graphics - 1680x1050, no AA, Triple Buffering, DXT1.

Tests - Nature, Fill Rate Single and Multi Texture.

I had gotten the newer version of Afterburner, with it's accompanying stress test app based on FurMark. This one solved an issue with PostProcess FX crashing the app on my PC and when I loaded the FX the FPS were a couple hundred times more than w/. That isn't what this is about though! The relevance is I used it to test how much of an impact the clocks would make on frame rate, since a higher frame rate will show the performance impact much more precisely compared to a lower frame rate. As I had went about clocking, I noticed that there seemed to be little or no benefit to GPU clocks upwards of 50MHz over stock, compared to memory clocks which made a noticeable difference. Even more puzzling was the fact that after around 1370 to 1380MHz (I didn't peg the exact moment haha) the performance seemed to change drastically. Aside from that oddity, what I figured was given that the GPU and memory are the same speed of the 5870, that the difference in the memory bus (and perhaps lack of shader cores) had left the GPU a bit bandwidth starved. Seeing as that is what seemed to be the case with at least the FurMark app. I hadn't actually ran the benchmark feature at that point, but these were my initial findings (Basically quoting myself from a PM to Kantastic)-- 850/1200 in FurMark (the MSI logo rotates and the FPS changes based on that) w/ post processing turn on, low side is 595 FPS and the high side is 635 FPS. At 900/1200 no apparent gain. 850/1300 lows of 620s highs of 660s. 900/1300 seems to start kicking in the ECC feature. Memory clocks up to 1370 pretty much yield no gain. At 1395 the lows are in the 660s and highs of 700-705 FPS! Above that my memory starts to crap out, 1395 so far is stable.

System Config:

PhII 555BE-------3717MHz

DDR3------------1600MHz 7-7-7-30 1T

NB & HT---.------2400MHz

PCIe------.------115MHz (Irrelevant really)

Cat 10.3 Settings:

AA & AF---------Application Controlled - Box Filter

ADAA-------.----Off

ATMS -----------MSAA

MipMap----------High Quality

Cat AI-----------Normal/Low

VSync Off, Unless Application Requests

Various other minor registry tweaks, turning on Tiling options, and 2 CF settings that I haven't found impacts performance any but I do it for peace of mind lol

Overclocks Tested:

Core - 850 (Default), 860, 875, 900.

Mem - 1200 (Default), 1300, 1341, 1395. (1342 due to 1340 = 1338)

Clocks will be every combination of the 4, with the only exception being the default Core only being ran with 1200 Mem speed. Simply because it's so close to 860MHz, and 3+mins each run in just 3DM06 is quite awhile to spend on this

Which is also why I'm not running the full gamut of test, which below I list those I am.

Which is also why I'm not running the full gamut of test, which below I list those I am.For the tests, unless I specify otherwise, settings are left default. Margin of error I find for 3DMark is about +1FPS, +~50MT/s single texture and +~175MT/s multi texture. Oddly I've not really experienced any results lower than the first run's score. So any tests that do show lower, I am considering it the 5000 series GPU utilizing it's ECC feature, which if it detects graphical anomolies it will correct them. That pretty much means that any results that are lower, with a higher OC, are probably due to that function. Not many reviewer of the big sites seem to know this though

[H]ard|OCP put it best when they said the OC methodology one has to use when OCing these cards (and probably future AMD GPUs) is OC until you notice a drop in performance as that is your max, then go back to you previous OC and if you feel like it you can raise it up slowly till it drops again and back off a couple MHz. With how well things take clocks these days, I generally go in increments of 10-15MHz until I know I am close to the limit and then I increase 2-5MHz.

[H]ard|OCP put it best when they said the OC methodology one has to use when OCing these cards (and probably future AMD GPUs) is OC until you notice a drop in performance as that is your max, then go back to you previous OC and if you feel like it you can raise it up slowly till it drops again and back off a couple MHz. With how well things take clocks these days, I generally go in increments of 10-15MHz until I know I am close to the limit and then I increase 2-5MHz. GPUz couldn't communicate with the server for an authentication, so you'll have to deal with a program screen cap. I didn't do the whole list of clocks, as I took them yesterday before I decided to go more in depth with it.

3DM06:

Graphics - Default

Tests - Canyon Flight, Fill Rate Single and Multi Texture, Shader Particles, Perlin Noise, 32768 Triangles.

Default...

860/1200

860/1341

-------------------------------------------------------------------------------------------------------------------

875/1200

875/1341

-------------------------------------------------------------------------------------------------------------------

900/1200

900/1341

Verdict: Mixed bag for me. Some of the tests show no benefit to core clocks, then again some don't speak much about memory clocks either heh The flip side is just the same. Some show a bit gain in both camps :\ I'm sure 3DM01 will show similar conclusions.

I'm thinking I'll have to figure out a game to benchmark in order to get a more clear picture, which is also a problem as the few I have don't have easy benchmark abilities or flat out don't have any.

Later tonight (or tomorrow perhaps) I will pick up with 3DM01. Yes it's old, but basically you are getting results from what I have to work with!

As my Verizon Wireless Broadband's 5GB/mo cap severely hinders my downloading!

As my Verizon Wireless Broadband's 5GB/mo cap severely hinders my downloading!  I went out on a limb to get Unigen's Heaven because Sandra was trying to tell me DX11 wasn't working and I didn't have the correct shaders or some BS for OGL heh Clearly proven otherwise with that benchmark

I went out on a limb to get Unigen's Heaven because Sandra was trying to tell me DX11 wasn't working and I didn't have the correct shaders or some BS for OGL heh Clearly proven otherwise with that benchmark

3DM01 (skipping 875MHz test):

Graphics - 1680x1050, no AA, Triple Buffering, DXT1.

Tests - Nature, Fill Rate Single and Multi Texture.