- Joined

- Sep 5, 2023

- Messages

- 672 (0.98/day)

- Location

- USA

| System Name | Dark Palimpsest |

|---|---|

| Processor | Intel i9 13900k with Optimus Foundation Block |

| Motherboard | EVGA z690 Classified |

| Cooling | MO-RA3 420mm Custom Loop |

| Memory | G.Skill 6000CL30, 64GB |

| Video Card(s) | Nvidia 4090 FE with Heatkiller Block |

| Storage | 3 NVMe SSDs, 2TB-each, plus a SATA SSD |

| Display(s) | Gigabyte FO32U2P (32" QD-OLED) , Asus ProArt PA248QV (24") |

| Case | Be quiet! Dark Base Pro 900 |

| Audio Device(s) | Logitech G Pro X |

| Power Supply | Be quiet! Straight Power 12 1200W |

| Mouse | Logitech G502 X |

| Keyboard | GMMK Pro + Numpad |

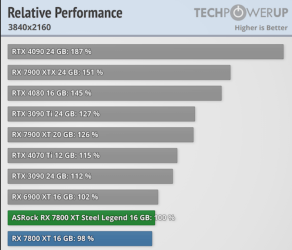

Yeah. I feel you there. The 4090 is a great card, but it's expensive and every other card this gen is even more over-priced for what you get. The only thing that makes the 4090 pricing look reasonable is if you compare it to the 3000-series...which launched during a pandemic generated manufacturing shortage and massive boom in demand due to wfh and more home-based hobbies (i.e. gaming) on top of a crypto-currency craze. So those prices were insane, but at least there was some "supply and demand" explanation. Even though none of those conditions still exist, they then based their 4000-series pricing on those prices because if people paid those prices once, Nvidia is going to assume they'll pay them again. So yeah...here we are. Unfortunately, AI demands are going to consume huge amounts of their silicon and gamers are going to play second-fiddle again. So we'll have more bad pricing for years to come. My hopes for 5000-series (and AMD 8000 series) are quite low to the point where even though I was going to skip this generation, when a 4090FE showed up available at MSRP at Best Buy a couple months ago, I caved in and bought one.This must be what getting old feels like.