NVIDIA Announces DLSS 2.0 and New GeForce 445.75 Game Ready Drivers

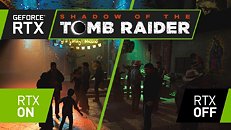

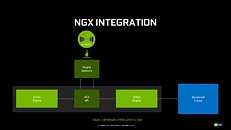

NVIDIA today announced its new Deep Learning Supersampling 2.0 (DLSS 2.0) performance enhancement feature, being distributed through the new GeForce 445.75 Game Ready drivers. DLSS 2.0 is NVIDIA's second attempt at a holy grail of performance boost at acceptable levels of quality loss (think what MP3 did to WAV). It works by rendering the 3D scene at a lower resolution than what your display is capable of, and upscaling it with deep-learning reconstructing details using a pre-trained neural network. Perhaps the two biggest differences between DLSS 2.0 and the original DLSS that made its debut with GeForce RTX 20-series in 2018, is the lack of a need for game-specific content for training the DLSS neural net; and implementation of a rendering technique called temporal feedback.

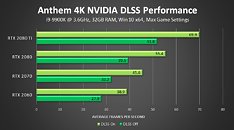

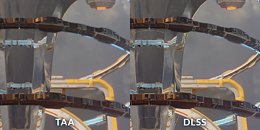

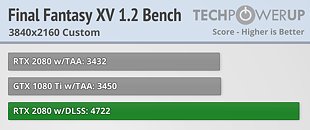

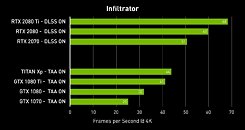

As mentioned earlier, DLSS 2.0 offers image quality comparable to original resolution while only rendering 1/4 or1/2 the pixels. It then uses new temporal feedback techniques to reconstruct details in the image. DLSS 2.0 is also able to use tensor cores on GeForce RTX GPUs "more efficiently," to execute "2x faster" than the original DLSS. Lastly, DLSS 2.0 gives users greater control over the image quality, which affects the rendering resolution of your game: quality, balanced (1:2), and performance (1:4), where the ratio denotes rendering-resolution to display resolution. Resolution scaling is a sure-shot way to gain performance, but at noticeable quality loss. DLSS uses AI to restore some of the details. The difference between performance gained from resolution scaling and AI-based image quality enhancement is the net DLSS performance uplift. In addition to DLSS 2.0, GeForce 445.75 drivers come game-ready for "Half Life: Alyx."

DOWNLOAD: NVIDIA GeForce 445.75 Game Ready Drivers with DLSS 2.0

As mentioned earlier, DLSS 2.0 offers image quality comparable to original resolution while only rendering 1/4 or1/2 the pixels. It then uses new temporal feedback techniques to reconstruct details in the image. DLSS 2.0 is also able to use tensor cores on GeForce RTX GPUs "more efficiently," to execute "2x faster" than the original DLSS. Lastly, DLSS 2.0 gives users greater control over the image quality, which affects the rendering resolution of your game: quality, balanced (1:2), and performance (1:4), where the ratio denotes rendering-resolution to display resolution. Resolution scaling is a sure-shot way to gain performance, but at noticeable quality loss. DLSS uses AI to restore some of the details. The difference between performance gained from resolution scaling and AI-based image quality enhancement is the net DLSS performance uplift. In addition to DLSS 2.0, GeForce 445.75 drivers come game-ready for "Half Life: Alyx."

DOWNLOAD: NVIDIA GeForce 445.75 Game Ready Drivers with DLSS 2.0