- Joined

- Dec 9, 2009

- Messages

- 646 (0.12/day)

- Location

- South East, United Kingdom

| System Name | Swag |

|---|---|

| Processor | Intel Xeon E3110 4GHz |

| Motherboard | GIGABYTE GA-G31M-ES2L |

| Cooling | Scythe Infinity I Push-Pull w/ AS5 |

| Memory | 2x2GB Transcend 800MHz DDR2 @ 890MHz 1.9v |

| Video Card(s) | Palit nVIDIA GTX460 1GB 800/1600/2000 |

| Storage | Crucial M4 64GB |

| Display(s) | LG Flatron M1917TM 1280x1024 @ 60Hz |

| Audio Device(s) | Realtek HD Audio |

| Power Supply | OCZ StealthXStream 2 600W |

| Software | Windows 7 Professional 64-bit |

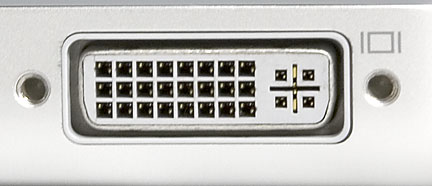

Basically I have a monitor which accepts both VGA and DVI input, but I only have a VGA cable... and I only have a card with DVI slots (this is a lol situation)

And I have an adapter which converts DVI to VGA... so I plug this onto the graphics card and put my VGA cable into it, and plug the other end of the VGA cable into the monitor.

But it does not detect any active display and goes straight into power save mode.

But if I put in an old VGA card and use the VGA cable with the same monitor, it gives a display.

The card is not the issue (X1300) as it also has a DVI output, which I have used the VGA adapter on and again it gives no display on the monitor, and it is not the VGA adapater that's the problem because I've used different ones and they all give no display.

I'm trying to get this sorted so I can put in my spare 7950GT and use that instead... but it only has 2 DVI outputs. (yes I have tried both of them before you ask)

So what exactly is the problem here? Thanks in advance

And I have an adapter which converts DVI to VGA... so I plug this onto the graphics card and put my VGA cable into it, and plug the other end of the VGA cable into the monitor.

But it does not detect any active display and goes straight into power save mode.

But if I put in an old VGA card and use the VGA cable with the same monitor, it gives a display.

The card is not the issue (X1300) as it also has a DVI output, which I have used the VGA adapter on and again it gives no display on the monitor, and it is not the VGA adapater that's the problem because I've used different ones and they all give no display.

I'm trying to get this sorted so I can put in my spare 7950GT and use that instead... but it only has 2 DVI outputs. (yes I have tried both of them before you ask)

So what exactly is the problem here? Thanks in advance