- Joined

- Oct 9, 2007

- Messages

- 46,393 (7.67/day)

- Location

- Hyderabad, India

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | ASUS ROG Strix B450-E Gaming |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 8GB G.Skill Sniper X |

| Video Card(s) | Palit GeForce RTX 2080 SUPER GameRock |

| Storage | Western Digital Black NVMe 512GB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

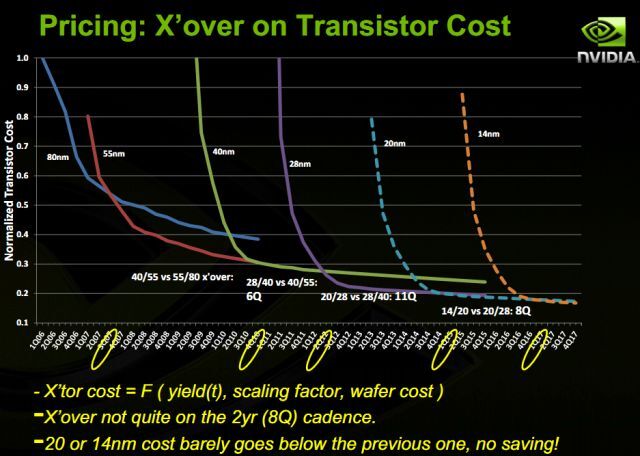

NVIDIA is planning to launch its next high performance single-GPU graphics cards, the GeForce GTX 880 and GTX 870, no later than Q4-2014, in the neighborhood of October and November, according to a SweClockers report. The two will be based on the brand new "GM204" silicon, which most reports suggest, is based on the existing 28 nm silicon fab process. Delays by NVIDIA's principal foundry partner TSMC to implement its next-generation 20 nm process has reportedly forced the company to design a new breed of "Maxwell" based GPUs on the existing 28 nm process. The architecture's good showing with efficiency on the GeForce GTX 750 series probably gave NVIDIA hope. When 20 nm is finally smooth, it wouldn't surprise us if NVIDIA optically shrinks these chips to the new process, like it did to the G92 (from 65 nm to 55 nm). The GM204 chip is rumored to feature 3,200 CUDA cores, 200 TMUs, 32 ROPs, and a 256-bit wide GDDR5 memory interface. It succeeds the company's current workhorse chip, the GK104.

View at TechPowerUp Main Site

View at TechPowerUp Main Site

(specially if Nv does the pricing "à la nVidia")

(specially if Nv does the pricing "à la nVidia")