- Joined

- May 14, 2004

- Messages

- 28,986 (3.74/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

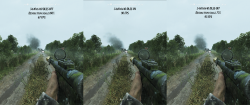

Today's Battlefield V update has brought numerous improvements to the game, including long-awaited support for NVIDIA's DLSS technology. We took a closer look at image quality with DLSS on and off and measured its performance cost.

Show full review

Show full review

Last edited: