- Joined

- Mar 31, 2023

- Messages

- 159 (0.19/day)

Recently a wave of these GPUs hit the market and there are active efforts to get them working. If you have one of these cards, and you've found this thread, congratulations because you're in the correct spot.

A more detailed set of information about the card can be found here. The short version is it's a 2x Navi 12, with 2x HBM2 8GB memory, primarily used by AWS for virtualized GPU use cases. It is similar to an RX 5600M (so Linux drivers say)

AWS does have support pages for installing the drivers, but you must be an AWS customer and setup proper permissions. Downloading should be covered by the free trial, but do be warned this is very likely not allowed by their terms and agreements, and I am not a lawyer so please behave yourself. A word of advice, you do NOT need to use their AMI instances to get these. A normal Windows powershell interface will work. For Linux I assume the same to hold true, but I have not tried. I do not recommend installing these on your main machine. Alternatively the links for each should work sufficiently and skip the entire signup process.

29-1-2025

11-2-2024

2-2-2024

A more detailed set of information about the card can be found here. The short version is it's a 2x Navi 12, with 2x HBM2 8GB memory, primarily used by AWS for virtualized GPU use cases. It is similar to an RX 5600M (so Linux drivers say)

AWS does have support pages for installing the drivers, but you must be an AWS customer and setup proper permissions. Downloading should be covered by the free trial, but do be warned this is very likely not allowed by their terms and agreements, and I am not a lawyer so please behave yourself. A word of advice, you do NOT need to use their AMI instances to get these. A normal Windows powershell interface will work. For Linux I assume the same to hold true, but I have not tried. I do not recommend installing these on your main machine. Alternatively the links for each should work sufficiently and skip the entire signup process.

- Windows https://docs.aws.amazon.com/AWSEC2/latest/WindowsGuide/install-amd-driver.html

- Linux https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/install-amd-driver.html

- Windows Server 2019

- 22.10.01.12-220930a-384126e-WHQLCert_.zip

- SHA-1: 55D4B265BA2EEF6FC56FDEA58A82C1663DF2F23C

- https://s3.amazonaws.com/ec2-amd-windows-drivers/latest/22.10.01.12-220930a-384126e-WHQLCert_.zip

- Windows Server 2016

- Windows_2019_2016-20.10.25.01-201109a-361679C-WHQL.zip

- SHA1 910c4b4e68e84ed8beef454b2f4d2d8424558ef1

- https://s3.amazonaws.com/ec2-amd-wi...019_2016-20.10.25.01-201109a-361679C-WHQL.zip

- Archive branch

- 20.10.25.04-210414a-365562C-V520-SN1000-WHQLCert.zip

- SHA1 30b2d121f5b91be0c1302280cc2be67213839675

- https://s3.amazonaws.com/ec2-amd-wi...5.04-210414a-365562C-V520-SN1000-WHQLCert.zip

- Some link from LTT forums, I can't verify if this is tampered with or not. If this goes down I'll mirror it and replace the URL below

- Wayback machine links for the AWS provided drivers

- Linux

Pending because that's a lot to go thoughOfficial driver package from AWS hasn't been saved yet. if anyone wants to link that, give me a ping- Official amdgpu driver package appears to work

- PCI Memory Controller

- Switchtec_PFX_68xG4_V17.13.48.672

- SHA-1: 2D0F4EE8B7F3D22AA10555101648ED00DF4D81E1

- https://dlcdnets.asus.com/pub/ASUS/...68xG4_V17.13.48.672.zip?model=RS720-E11-RS12U

- GPU: PCI\VEN_1002&DEV_7360&REV_C1

- Driver: PCI\VEN_1002&DEV_7362&REV_C3

- PCI Memory Controller: PCI\VEN_11F8&DEV_4052&REV_00

- The VBIOS on each GPU is different, please keep note of this

- Flashing is a pain and also not recommended

29-1-2025

- Added Wayback Machine urls I made over a year ago since the original AWS link seems dead now

11-2-2024

- mczkrt shared their work getting it working under proxmox

- Instructions can be found here https://www.techpowerup.com/forums/threads/amd-radeon-pro-v540-research-thread.308894/post-5365983

2-2-2024

- It works on linux successfully thanks to mczkrt

- Their instructions are here https://www.techpowerup.com/forums/threads/amd-radeon-pro-v540-research-thread.308894/post-5190516

- A blower fan mount has been created by @Catch2223 https://www.techpowerup.com/forums/threads/amd-radeon-pro-v540-research-thread.308894/post-5148240

- There are 2 fans available for use with this mount

- 1.8A so needs extra effort https://www.digikey.com/en/products/detail/sanyo-denki-america-inc/9BMB12G201/6192091

- 0.9A so should work off fan headers https://www.digikey.com/en/products/detail/sanyo-denki-america-inc/9BMB12P2F01/6192093

- There are 2 fans available for use with this mount

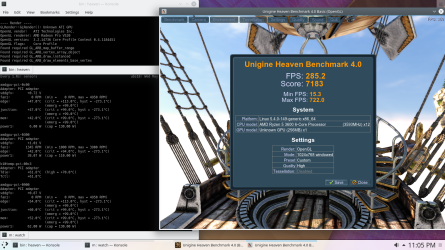

ONE successful graphical workload so farhttps://www.techpowerup.com/forums/threads/amd-radeon-pro-v540-research-thread.308894/post-5024727- Display output does not work (some have gotten some success using other drivers, but nothing usable)

- MxGPU v520 drivers will install to each individual GPU on the card but only when manually installed. They do not automatically install

- Card runs exceptionally hot, needs a lot more cooling than expected, even with high airflow setups - I burned my fingers on it, please actually be careful if it gets too hot

- Linux has very very long boot times, and depending on a number of factors tends to break or fail to work properly. The kernel supports this card, but it may need some special sauce

- It has been tried in a Mac, with no success

- Windows Driver when viewing the .inf in a text editor says Navi 10, and does not match perfectly what the Vendor/Device ID is (see above)

We have tried some PCI Bus drivers Amernine had around, none worked but they did install so it's kinda close, once installed all 3 (2 GPUs, and controller) become unusable with error code 43 and 31AMDGPIO_PciBus 20.50.0.0000 seemed to install on the PCI Memory Controller without issue. Perhaps others might?

- Virtualization efforts have not gone well either, but more success was had there vs bare metal

- Windows 11 Enterprise seems to take the drivers better than Windows Server 2019 for some reason

Last edited: