- Joined

- May 22, 2015

- Messages

- 14,421 (3.88/day)

| Processor | Intel i5-12600k |

|---|---|

| Motherboard | Asus H670 TUF |

| Cooling | Arctic Freezer 34 |

| Memory | 2x16GB DDR4 3600 G.Skill Ripjaws V |

| Video Card(s) | EVGA GTX 1060 SC |

| Storage | 500GB Samsung 970 EVO, 500GB Samsung 850 EVO, 1TB Crucial MX300 and 2TB Crucial MX500 |

| Display(s) | Dell U3219Q + HP ZR24w |

| Case | Raijintek Thetis |

| Audio Device(s) | Audioquest Dragonfly Red :D |

| Power Supply | Seasonic 620W M12 |

| Mouse | Logitech G502 Proteus Core |

| Keyboard | G.Skill KM780R |

| Software | Arch Linux + Win10 |

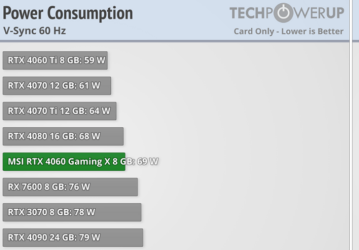

I was gonna say...Well, it's what I've been trying to say lol, these OT results aren't exactly out of line with what we already know, it's just TPU doesn't test low load at framerates higher than 60 (yet).

More efficient than RDNA3 you mean.

I'd like to also point out that people frequently ask me "why do you always recommend 4060/Ti over 6700 XT" or "muh 8 GB VRAM" (how's that working out now that 16 GB variant has no performance difference) this kind of chart is a big reason.

Twice or even three times the energy efficiency at frame capped games (of which there are many locked at 60 FPS, eg bethesda games or certain console ports etc.), or other associated low loads isn't irrelevant. People take 100% load efficiency and raw raster performance and compare the options, but there's a lot more states the GPU will be in, often more frequently. Another thing to remember is that TPU tests with maxed out CPU hardware, most people aren't rocking a 13900K, and I doubt their GPU is pegged at 100% all the time.

It's a whole other factor if you want to start comparing RT efficiency too, due to the dedicated hardware used in NV cards.

4070 and 6900 XT are roughly comparable in both price and performance, 6900 XT is 5-10% faster in raster, but 20% slower in RT.

Yet one peaks at 636 w, 130 w higher than a 4090, and the other is 235 w.

View attachment 305650

I really appreciated when you guys started adding these, I always felt running with uncapped fps is a waste of electricity.

People are really, really bad at figuring costs over time. And this is not only about GPUs. Our brain somehow puts most of the weight on the upfront cost and seemingly refuses to go past that.Yup, save some money on GPU only to spend more on PSU and electricity bill sounds good to some people I guess.

.

.