- Joined

- Oct 9, 2007

- Messages

- 47,854 (7.38/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

A little earlier this month, MSI's PR team dished out a presentation in which they claimed that Gigabyte was misleading buyers into thinking that as many as 40 of its recently-launched motherboards were "Ready for Native PCIe Gen.3". MSI tried to make its argument plausible by explaining what exactly goes into making a Gen 3-ready motherboard. The presentation caused quite some drama in the comments. Gigabyte responded with a presentation of its own, in which it counter-claimed that those making the accusations ignored some key details. Details such as "what if the Ivy Bridge CPU is wired to the first PCIe slot (lane switches won't matter)?"

In its short presentation with no more than 5 slides, Gigabyte tried to provide an explanation to its claim that most of its new motherboards are Gen 3-ready. The presentation begins with a diplomatic-sounding message on what is the agenda of the presentation, followed by a disclaimer that three of its recently-launched boards, Z68X-UD7-B3 & P67A-UD7/P67A-UD7-B3, lack Gen 3 readiness. This could be because those boards make use of a Gen 2 NVIDIA nForce 200 bridge chip, even the first PCI-E x16 slot is wired to that chip.

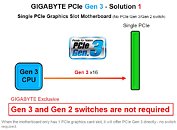

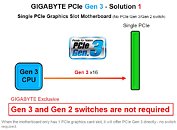

The next slide looks to form the key component of Gigabyte's rebuttal, that in motherboards with just one PCI-E x16 slot, there is no switching circuitry between the CPU's PCI-E root-complex and the slots, and so PCI-E Gen 3 will work.

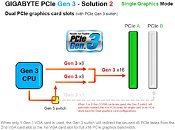

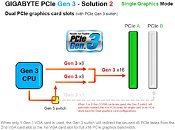

The following slides explain that in motherboards with more than one PCI-Express x16 slot wired to the CPU, a Gen 3 switch redirects the unused x8 PCIe lanes from the

second slot to the first card slot for full x16 PCIe graphics bandwidth. But then MSI already established that barring the G1.Sniper 2, none of Gigabyte's boards with more than one PCI-E x16 slot has Gen 3 switches.

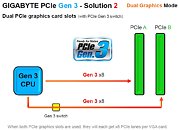

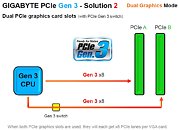

Likewise, it explained in the following slides about how Gen 3 switches handle cases in which more than one graphics card is wired to the CPU. Again we'd like to mention that barring the G1.Sniper 2, none of Gigabyte's 40 "Ready for Native PCIe Gen.3" have Gen 3 switches.

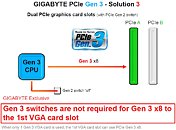

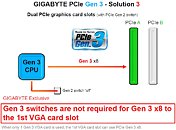

The last slide, however, successfully rebuts MSI's argument. Even in motherboards with Gen 2 switches, a Gen 3 graphics card can run in Gen 3 mode, on the first slot, albeit at electrical x8 data rate. Sure, it's not going to give you PCI-Express 3.0 x16, and sure, it's going to only work for one graphics card, but it adequately validates Gigabyte's "Ready for Native PCIe Gen.3" claim from a purely logical point of view.

Now that Gigabyte entered the debate, the onus on Gigabyte will now be to also clarify that apart from the switching argument, all its "Ready for Native PCIe Gen.3" have Gen 3-compliant electrical components, which MSI claimed Gigabyte's boards lack in this slide.

View at TechPowerUp Main Site

In its short presentation with no more than 5 slides, Gigabyte tried to provide an explanation to its claim that most of its new motherboards are Gen 3-ready. The presentation begins with a diplomatic-sounding message on what is the agenda of the presentation, followed by a disclaimer that three of its recently-launched boards, Z68X-UD7-B3 & P67A-UD7/P67A-UD7-B3, lack Gen 3 readiness. This could be because those boards make use of a Gen 2 NVIDIA nForce 200 bridge chip, even the first PCI-E x16 slot is wired to that chip.

The next slide looks to form the key component of Gigabyte's rebuttal, that in motherboards with just one PCI-E x16 slot, there is no switching circuitry between the CPU's PCI-E root-complex and the slots, and so PCI-E Gen 3 will work.

The following slides explain that in motherboards with more than one PCI-Express x16 slot wired to the CPU, a Gen 3 switch redirects the unused x8 PCIe lanes from the

second slot to the first card slot for full x16 PCIe graphics bandwidth. But then MSI already established that barring the G1.Sniper 2, none of Gigabyte's boards with more than one PCI-E x16 slot has Gen 3 switches.

Likewise, it explained in the following slides about how Gen 3 switches handle cases in which more than one graphics card is wired to the CPU. Again we'd like to mention that barring the G1.Sniper 2, none of Gigabyte's 40 "Ready for Native PCIe Gen.3" have Gen 3 switches.

The last slide, however, successfully rebuts MSI's argument. Even in motherboards with Gen 2 switches, a Gen 3 graphics card can run in Gen 3 mode, on the first slot, albeit at electrical x8 data rate. Sure, it's not going to give you PCI-Express 3.0 x16, and sure, it's going to only work for one graphics card, but it adequately validates Gigabyte's "Ready for Native PCIe Gen.3" claim from a purely logical point of view.

Now that Gigabyte entered the debate, the onus on Gigabyte will now be to also clarify that apart from the switching argument, all its "Ready for Native PCIe Gen.3" have Gen 3-compliant electrical components, which MSI claimed Gigabyte's boards lack in this slide.

View at TechPowerUp Main Site

Last edited: