- Joined

- Oct 9, 2007

- Messages

- 47,785 (7.40/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

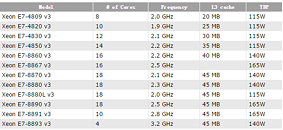

Intel's biggest enterprise CPU silicon based on its "Haswell" micro-architecture, the Haswell-EX, is a silicon monstrosity, according to its specs. Built in the 22 nm silicon fab processes, the top-spec variant of the chip physically features 18 cores, 36 logical CPUs enabled with HyperThreading, 45 MB of L3 cache, a DDR4 IMC, and TDP as high as 165W. Intel will use this chip to build its next-gen Xeon E7 v3 family, which includes 8-core, 10-core, 12-core, 14-core, 16-core, and 18-core models, with 2P-only, and 4P-capable variants spanning the E7-4000 and E7-8000 families. Clock speeds range between 1.90 GHz and 3.20 GHz.

View at TechPowerUp Main Site

View at TechPowerUp Main Site

! Seriously, these CPUs would be massive overkill for me and just about anyone, but I'd most likely buy a 16 core CPU anyway.

! Seriously, these CPUs would be massive overkill for me and just about anyone, but I'd most likely buy a 16 core CPU anyway.