- Joined

- Oct 9, 2007

- Messages

- 47,674 (7.43/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

Later today (20th August), NVIDIA will formally unveil its GeForce RTX 2000 series consumer graphics cards. This marks a major change in the brand name, triggered with the introduction of the new RT Cores, specialized components that accelerate real-time ray-tracing, a task too taxing on conventional CUDA cores. Ray-tracing and DNN acceleration requires SIMD components to crunch 4x4x4 matrix multiplication, which is what RT cores (and tensor cores) specialize at. The chips still have CUDA cores for everything else. This generation also debuts the new GDDR6 memory standard, although unlike GeForce "Pascal," the new GeForce "Turing" won't see a doubling in memory sizes.

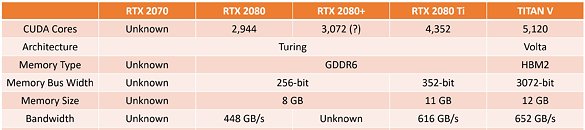

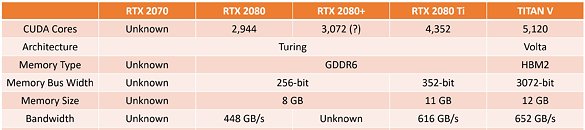

NVIDIA is expected to debut the generation with the new GeForce RTX 2080 later today, with market availability by end of Month. Going by older rumors, the company could launch the lower RTX 2070 and higher RTX 2080+ by late-September, and the mid-range RTX 2060 series in October. Apparently the high-end RTX 2080 Ti could come out sooner than expected, given that VideoCardz already has some of its specifications in hand. Not a lot is known about how "Turing" compares with "Volta" in performance, but given that the TITAN V comes with tensor cores that can [in theory] be re-purposed as RT cores; it could continue on as NVIDIA's halo SKU for the client-segment.

The RTX 2080 and RTX 2070 series will be based on the new GT104 "Turing" silicon, which physically has 3,072 CUDA cores, and a 256-bit wide GDDR6-capable memory interface. The RTX 2080 Ti is based on the larger GT102 chip. Although the maximum number of CUDA cores on this chip is unknown the RTX 2080 Ti is reportedly endowed with 2,944 of them, and has a slightly narrower 352-bit memory interface, than what the chip is capable of (384-bit). As we mentioned earlier, NVIDIA doesn't seem to be doubling memory amounts, and so we could expect 8 GB for the RTX 2070/2080 series, and 11 GB for the RTX 2080 Ti.

View at TechPowerUp Main Site

NVIDIA is expected to debut the generation with the new GeForce RTX 2080 later today, with market availability by end of Month. Going by older rumors, the company could launch the lower RTX 2070 and higher RTX 2080+ by late-September, and the mid-range RTX 2060 series in October. Apparently the high-end RTX 2080 Ti could come out sooner than expected, given that VideoCardz already has some of its specifications in hand. Not a lot is known about how "Turing" compares with "Volta" in performance, but given that the TITAN V comes with tensor cores that can [in theory] be re-purposed as RT cores; it could continue on as NVIDIA's halo SKU for the client-segment.

The RTX 2080 and RTX 2070 series will be based on the new GT104 "Turing" silicon, which physically has 3,072 CUDA cores, and a 256-bit wide GDDR6-capable memory interface. The RTX 2080 Ti is based on the larger GT102 chip. Although the maximum number of CUDA cores on this chip is unknown the RTX 2080 Ti is reportedly endowed with 2,944 of them, and has a slightly narrower 352-bit memory interface, than what the chip is capable of (384-bit). As we mentioned earlier, NVIDIA doesn't seem to be doubling memory amounts, and so we could expect 8 GB for the RTX 2070/2080 series, and 11 GB for the RTX 2080 Ti.

View at TechPowerUp Main Site