- Joined

- Oct 9, 2007

- Messages

- 47,914 (7.37/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

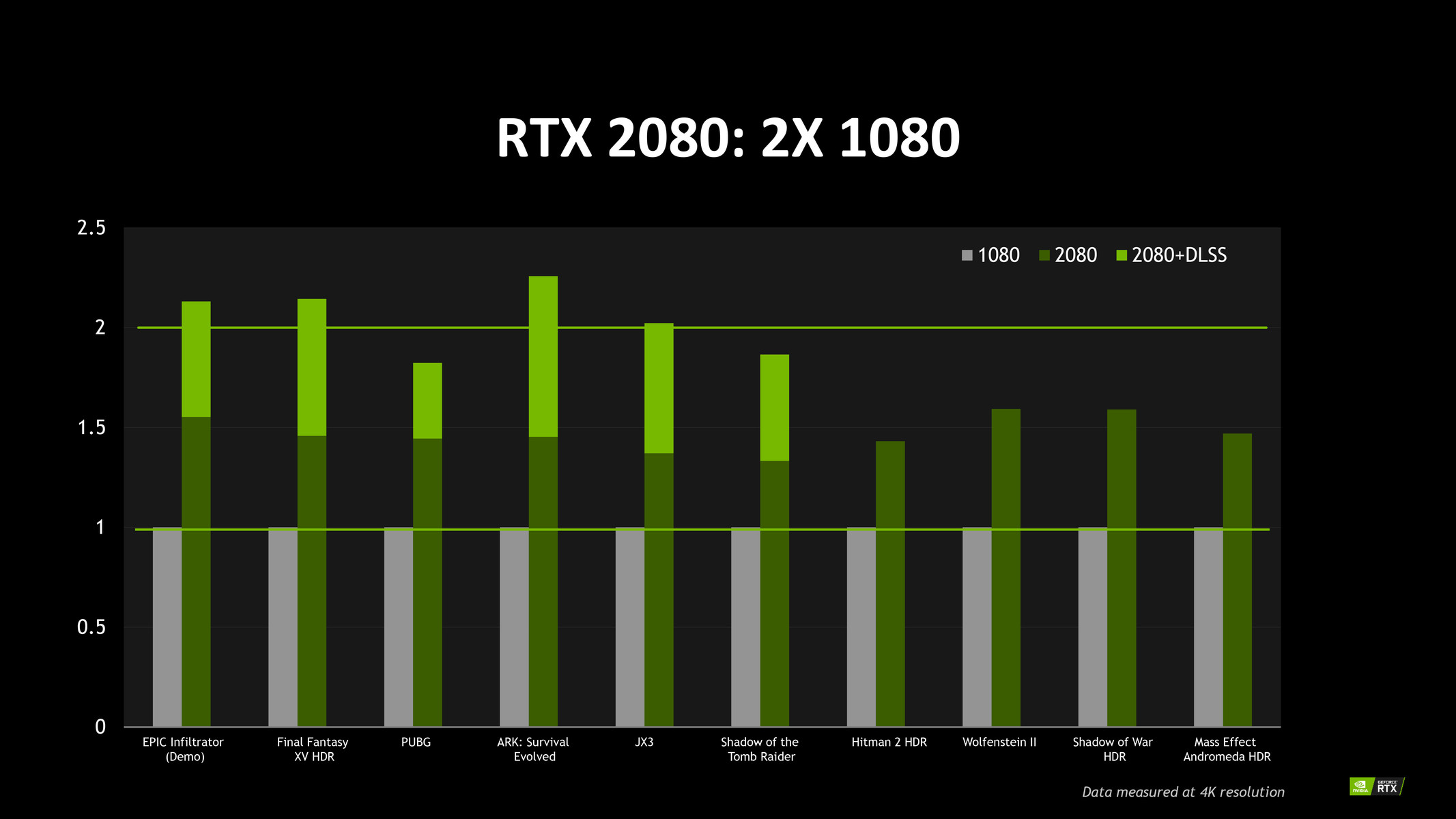

The GeForce RTX 2080 Ti is indeed based on an ASIC codenamed "TU102." NVIDIA was referring to this 775 mm² chip when talking about the 18.5 billion-transistor count in its keynote. The company also provided a breakdown of its various "cores," and a block-diagram. The GPU is still laid out like its predecessors, but each of the 72 streaming multiprocessors (SMs) packs RT cores and Tensor cores in addition to CUDA cores.

The TU102 features six GPCs (graphics processing clusters), which each pack 12 SMs. Each SM packs 64 CUDA cores, 8 Tensor cores, and 1 RT core. Each GPC packs six geometry units. The GPU also packs 288 TMUs and 96 ROPs. The TU102 supports a 384-bit wide GDDR6 memory bus, supporting 14 Gbps memory. There are also two NVLink channels, which NVIDIA plans to later launch as its next-generation multi-GPU technology.

View at TechPowerUp Main Site

The TU102 features six GPCs (graphics processing clusters), which each pack 12 SMs. Each SM packs 64 CUDA cores, 8 Tensor cores, and 1 RT core. Each GPC packs six geometry units. The GPU also packs 288 TMUs and 96 ROPs. The TU102 supports a 384-bit wide GDDR6 memory bus, supporting 14 Gbps memory. There are also two NVLink channels, which NVIDIA plans to later launch as its next-generation multi-GPU technology.

View at TechPowerUp Main Site