- Joined

- Oct 9, 2007

- Messages

- 47,853 (7.39/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

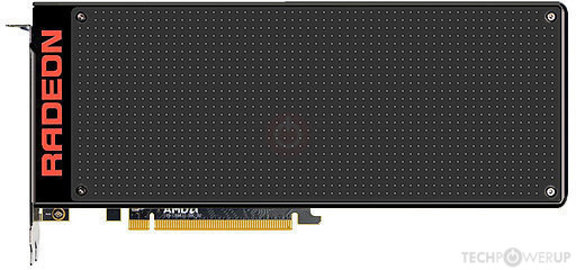

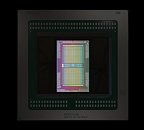

AMD today announced the Radeon Pro Vega II and Pro Vega II Duo graphics cards, making their debut with the new Apple Mac Pro workstation. Based on an enhanced 32 GB variant of the 7 nm "Vega 20" MCM, the Radeon Pro Vega II maxes out its GPU silicon, with 4,096 stream processors, 1.70 GHz peak engine clock, 32 GB of 4096-bit HBM2 memory, and 1 TB/s of memory bandwidth. The card features both PCI-Express 3.0 x16 and InfinityFabric interfaces. As its name suggests, the Pro Vega II is designed for professional workloads, and comes with certifications for nearly all professional content creation applications.

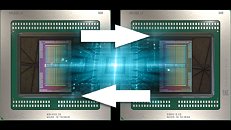

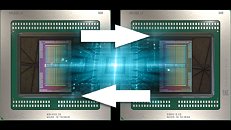

The Radeon Pro Vega II Duo is the first dual-GPU graphics card from AMD in ages. Purpose built for the Mac Pro (and available on the Apple workstation only), this card puts two fully unlocked "Vega 20" MCMs with 32 GB HBM2 memory each on a single PCB. The card uses a bridge chip to connect the two GPUs to the system bus, but in addition, has an 84.5 GB/s InfinityFabric link running between the two GPUs, for rapid memory access, GPU and memory virtualization, and interoperability between the two GPUs, bypassing the host system bus. In addition to certifications for every conceivable content creation suite for the MacOS platform, AMD dropped in heavy optimization for the Metal 3D graphics API. For now the two graphics cards are only available as options for the Apple Mac Pro. The single-GPU Pro Vega II may see standalone product availability later this year, but the Pro Vega II Duo will remain a Mac Pro-exclusive.

View at TechPowerUp Main Site

The Radeon Pro Vega II Duo is the first dual-GPU graphics card from AMD in ages. Purpose built for the Mac Pro (and available on the Apple workstation only), this card puts two fully unlocked "Vega 20" MCMs with 32 GB HBM2 memory each on a single PCB. The card uses a bridge chip to connect the two GPUs to the system bus, but in addition, has an 84.5 GB/s InfinityFabric link running between the two GPUs, for rapid memory access, GPU and memory virtualization, and interoperability between the two GPUs, bypassing the host system bus. In addition to certifications for every conceivable content creation suite for the MacOS platform, AMD dropped in heavy optimization for the Metal 3D graphics API. For now the two graphics cards are only available as options for the Apple Mac Pro. The single-GPU Pro Vega II may see standalone product availability later this year, but the Pro Vega II Duo will remain a Mac Pro-exclusive.

View at TechPowerUp Main Site