- Joined

- Oct 9, 2007

- Messages

- 47,847 (7.39/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

AMD Ryzen 3000 "Matisse" processors are multi-chip modules of two kinds of dies - one or two 7 nm 8-core "Zen 2" CPU chiplets, and an I/O controller die that packs the processor's dual-channel DDR4 memory controller, PCI-Express gen 4.0 root-complex, and an integrated southbridge that puts out some SoC I/O, such as two SATA 6 Gbps ports, four USB 3.1 Gen 2 ports, LPCIO (ISA), and SPI (for the UEFI BIOS ROM chip). It was earlier reported that while the Zen 2 CPU core chiplets are built on 7 nm process, the I/O controller is 14 nm. We have confirmation now that the I/O controller die is built on the more advanced 12 nm process, likely GlobalFoundries 12LP. This is the same process on which AMD builds its "Pinnacle Ridge" and "Polaris 30" chips. The 7 nm "Zen 2" CPU chiplets are made at TSMC.

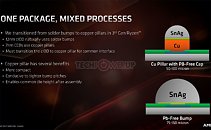

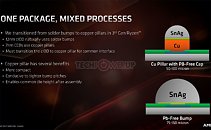

AMD also provided a fascinating technical insight to the making of the "Matisse" MCM, particularly getting three highly complex dies under the IHS of a mainstream-desktop processor package, and perfectly aligning the three for pin-compatibility with older generations of Ryzen AM4 processors that use monolithic dies, such as "Pinnacle Ridge" and "Raven Ridge." AMD innovated new copper-pillar 50µ bumps for the 8-core CPU chiplets, while leaving the I/O controller die with normal 75µ solder bumps. Unlike with its GPUs that need high-density wiring between the GPU die and HBM stacks, AMD could make do without a silicon interposer or TSVs (through-silicon-vias) to connect the three dies on "Matisse." The fiberglass substrate is now "fattened" up to 12 layers, to facilitate the inter-die wiring, as well as making sure every connection reaches the correct pin on the µPGA.

View at TechPowerUp Main Site

AMD also provided a fascinating technical insight to the making of the "Matisse" MCM, particularly getting three highly complex dies under the IHS of a mainstream-desktop processor package, and perfectly aligning the three for pin-compatibility with older generations of Ryzen AM4 processors that use monolithic dies, such as "Pinnacle Ridge" and "Raven Ridge." AMD innovated new copper-pillar 50µ bumps for the 8-core CPU chiplets, while leaving the I/O controller die with normal 75µ solder bumps. Unlike with its GPUs that need high-density wiring between the GPU die and HBM stacks, AMD could make do without a silicon interposer or TSVs (through-silicon-vias) to connect the three dies on "Matisse." The fiberglass substrate is now "fattened" up to 12 layers, to facilitate the inter-die wiring, as well as making sure every connection reaches the correct pin on the µPGA.

View at TechPowerUp Main Site