- Joined

- Aug 19, 2017

- Messages

- 3,267 (1.13/day)

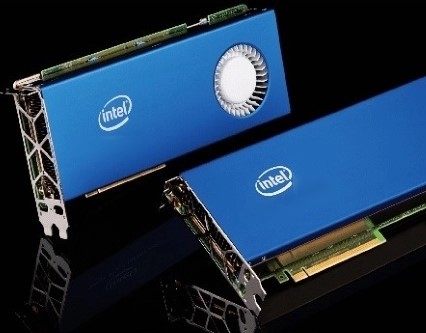

Intel is working hard to bring its first discrete GPU lineup triumphantly, after spending years with past efforts to launch the new lineup resulting in a failure. During its Q3 earnings call, some exciting news was presented, with Intel's CEO Bob Swan announcing that "This quarter we've achieved power-on exit for our first discrete GPU DG1, an important milestone." By power on exit, Mr. Swan refers to post-silicon debug techniques that involve putting a prototype chip on a custom PCB for testing and seeing if it works/boots. With a successful test, Intel now has a working product capable of running real-world workloads and software, that is almost ready for sale.

Additionally, the developer kit for the "DG1" graphics card is supposedly being sent to various developers over the world, according to European Economy Commission listings. Called the "Discrete Graphics DG1 External FRD1 Accessory Kit (Alpha) Developer Kit" this bundle is marked as a prototype in the alpha stage, meaning that the launch of discrete Xe GPUs is only a few months away. This confirming previous rumor that Xe GPUs will launch in 2020 sometime mid-year, possibly in July/August time frame.

View at TechPowerUp Main Site

Additionally, the developer kit for the "DG1" graphics card is supposedly being sent to various developers over the world, according to European Economy Commission listings. Called the "Discrete Graphics DG1 External FRD1 Accessory Kit (Alpha) Developer Kit" this bundle is marked as a prototype in the alpha stage, meaning that the launch of discrete Xe GPUs is only a few months away. This confirming previous rumor that Xe GPUs will launch in 2020 sometime mid-year, possibly in July/August time frame.

View at TechPowerUp Main Site

RIGHT SIDE UP!

RIGHT SIDE UP!