- Joined

- Aug 19, 2017

- Messages

- 3,254 (1.13/day)

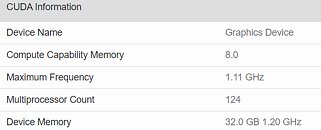

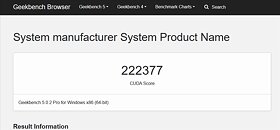

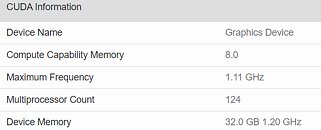

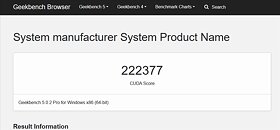

(Update, March 4th: Another NVIDIA graphics card has been discovered in the Geekbench database, this one featuring a total of 124 CUs. This could amount to some 7,936 CUDA cores, should NVIDIA keep the same 64 CUDA cores per CU - though this has changed in the past, as when NVIDIA halved the number of CUDA cores per CU from Pascal to Turing. The 124 CU graphics card is clocked at 1.1 GHz and features 32 GB of HBM2e, delivering a score of 222,377 points in the Geekbench benchmark. We again stress that these can be just engineering samples, with conservative clocks, and that final performance could be even higher).

NVIDIA is expected to launch its next-generation Ampere lineup of GPUs during the GPU Technology Conference (GTC) event happening from March 22nd to March 26th. Just a few weeks before the release of these new GPUs, a Geekbench 5 compute score measuring OpenCL performance of the unknown GPUs, which we assume are a part of the Ampere lineup, has appeared. Thanks to the twitter user "_rogame" (@_rogame) who obtained a Geekbench database entry, we have some information about the CUDA core configuration, memory, and performance of the upcoming cards.

In the database, there are two unnamed GPUs. The first GPU is a version with 7552 CUDA cores running at 1.11 GHz frequency. Equipped with 24 GB of unknown VRAM type, the GPU is configured with 118 Compute Units (CUs) and it scores an incredible score of 184096 in the OpenCL test. Compared to something like a V100 which has a score of 142837 in the same test, we can see almost 30% improvement in performance. Next up, we have a GPU with 6912 CUDA cores running at 1.01 GHz and featuring 47 GB of VRAM. This GPU is a less powerful model as it has 108 CUs and scores 141654 in the OpenCL test. Some things to note are weird memory configurations in both models like 24 GB for the more powerful model and 47 GB (which should be 48 GB) for the weaker one. The results are not the latest, as they date back to October and November, so it may be that engineering samples are in question and the clock speed and memory configuration might change until the launch happens.

View at TechPowerUp Main Site

NVIDIA is expected to launch its next-generation Ampere lineup of GPUs during the GPU Technology Conference (GTC) event happening from March 22nd to March 26th. Just a few weeks before the release of these new GPUs, a Geekbench 5 compute score measuring OpenCL performance of the unknown GPUs, which we assume are a part of the Ampere lineup, has appeared. Thanks to the twitter user "_rogame" (@_rogame) who obtained a Geekbench database entry, we have some information about the CUDA core configuration, memory, and performance of the upcoming cards.

In the database, there are two unnamed GPUs. The first GPU is a version with 7552 CUDA cores running at 1.11 GHz frequency. Equipped with 24 GB of unknown VRAM type, the GPU is configured with 118 Compute Units (CUs) and it scores an incredible score of 184096 in the OpenCL test. Compared to something like a V100 which has a score of 142837 in the same test, we can see almost 30% improvement in performance. Next up, we have a GPU with 6912 CUDA cores running at 1.01 GHz and featuring 47 GB of VRAM. This GPU is a less powerful model as it has 108 CUs and scores 141654 in the OpenCL test. Some things to note are weird memory configurations in both models like 24 GB for the more powerful model and 47 GB (which should be 48 GB) for the weaker one. The results are not the latest, as they date back to October and November, so it may be that engineering samples are in question and the clock speed and memory configuration might change until the launch happens.

View at TechPowerUp Main Site

.

.