- Joined

- Oct 9, 2007

- Messages

- 47,853 (7.38/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

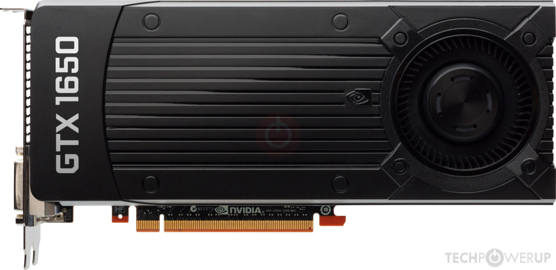

NVIDIA board partners carving out GeForce RTX 20-series and GTX 16-series SKUs from ASICs they weren't originally based on, is becoming more common, but GALAX has taken things a step further. The company just launched a GeForce GTX 1650 (GDDR6) graphics card based on the "TU106" silicon (ASIC code: TU106-125-A1). The company carved a GTX 1650 out of this chip by disabling all of its RT cores, all its tensor cores, and a whopping 61% of its CUDA cores, along with proportionate reductions in TMU- and ROP counts. The memory bus width has been halved from 256-bit down to 128-bit.

The card, however, is only listed by the Chinese regional arm of GALAX. The card's marketing name is "GALAX GeForce GTX 1650 Ultra," with "Ultra" being a GALAX brand extension, and not an NVIDIA SKU (i.e. the GPU isn't called "GTX 1650 Ultra"). The GPU clock speeds for this card is identical to those of the original GTX 1650 that's based on TU117 - 1410 MHz base, 1590 MHz GPU Boost, and 12 Gbps (GDDR6-effective) memory.

View at TechPowerUp Main Site

The card, however, is only listed by the Chinese regional arm of GALAX. The card's marketing name is "GALAX GeForce GTX 1650 Ultra," with "Ultra" being a GALAX brand extension, and not an NVIDIA SKU (i.e. the GPU isn't called "GTX 1650 Ultra"). The GPU clock speeds for this card is identical to those of the original GTX 1650 that's based on TU117 - 1410 MHz base, 1590 MHz GPU Boost, and 12 Gbps (GDDR6-effective) memory.

View at TechPowerUp Main Site