If your Intel Comet Lake furnace and your NVidia Ampere furnace are both pulling maximum amperes at the same time, you may very well need an 850-watt power supply. The 660-watt PSUs that I've used for most of my family's PCs in the past decade might not be adequate for that load.

What this means for me is that I will swap my Focus PX-850 power supply into the new PC that I'm building to house a yet-to-be-released graphics card and I'll put the spare SS-660XP2 Platinum in the old PC since it "only" has to power a Vega64.

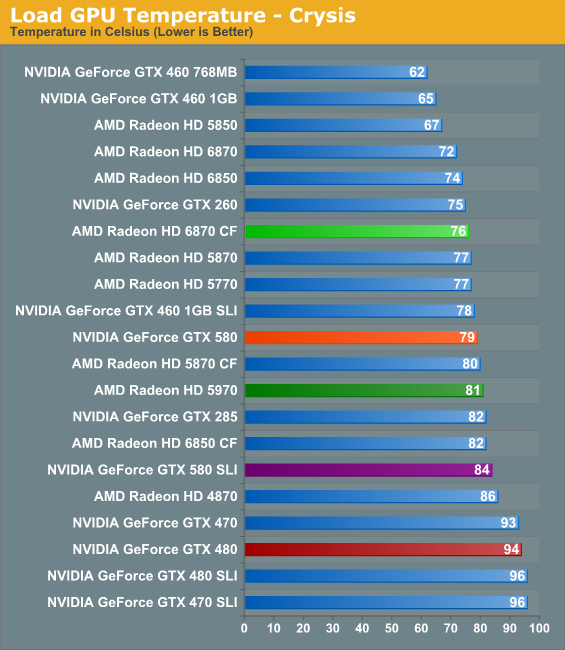

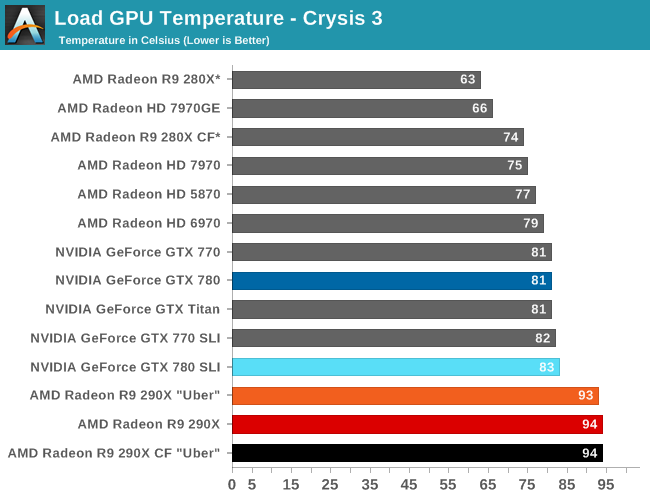

I'm willing to pay for the extra electricity for the graphics card and the air conditioning while gaming. I am much more concerned about getting adequate case ventilation to handle that much heat from a graphics card without resorting to dustbuster noise levels.

Going forward, I will also revise my current usual PSU recommendation of a Prime PX-750, which has the same compact 140 mm depth as the entire Focus series. The Prime PX-850 and 1000 take up an additional 30 mm.

I'm willing to pay for the extra electricity for the graphics card and the air conditioning while gaming. I am much more concerned about getting adequate case ventilation to handle that much heat from a graphics card without resorting to dustbuster noise levels.

I'm willing to pay for the extra electricity for the graphics card and the air conditioning while gaming. I am much more concerned about getting adequate case ventilation to handle that much heat from a graphics card without resorting to dustbuster noise levels.

It would be great if all malcontents here admited that they are complaining because they want new cards to be bad because it fits their story and state of mind.

It would be great if all malcontents here admited that they are complaining because they want new cards to be bad because it fits their story and state of mind.