- Joined

- Oct 9, 2007

- Messages

- 47,857 (7.38/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

AMD is innovating its own x86 hybrid processor technology formulated along the Arm big.LITTLE hybrid CPU core topology that inspired Hybrid processors by Intel. Under this, the processor has two kinds of CPU cores with very different performance/Watt bands—one kind focuses on performance and remains dormant under mild processing loads; while the other hand handles most lightweight processing loads that don't require powerful cores. This is easier said than done, as the two kinds of cores feature significantly different CPU core microarchitectures, and instruction sets.

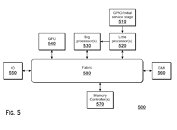

AMD has filed a patent describing a method for processing workloads to be switched between the two CPU core types, on the fly. Unlike homogenous CPU core designs where workload from one core is seamlessly picked up by another over a victim cache like the L3, there is some logic involved in handover between the two core types. According to the patent application, in an AMD hybrid processor, the two CPU core types are interfaced over the processor's main switching fabric, and not a victim cache, much in the same way as the CPU cores and integrated GPU are separated in current-gen AMD APUs.

According to the patent application, AMD's CPU core type switching logic is dictated by a number of factors, such as CPU core utilization of the low-power color, its memory utilization, the need for instruction sets only found with the performance core, and machine architecture states. The patent also briefly references a power-management mechanism that saves system power by gating the two core types based on utilization. Power savings are the primary objective of any big.LITTLE topology.

The patent description can be accessed here.

View at TechPowerUp Main Site

AMD has filed a patent describing a method for processing workloads to be switched between the two CPU core types, on the fly. Unlike homogenous CPU core designs where workload from one core is seamlessly picked up by another over a victim cache like the L3, there is some logic involved in handover between the two core types. According to the patent application, in an AMD hybrid processor, the two CPU core types are interfaced over the processor's main switching fabric, and not a victim cache, much in the same way as the CPU cores and integrated GPU are separated in current-gen AMD APUs.

According to the patent application, AMD's CPU core type switching logic is dictated by a number of factors, such as CPU core utilization of the low-power color, its memory utilization, the need for instruction sets only found with the performance core, and machine architecture states. The patent also briefly references a power-management mechanism that saves system power by gating the two core types based on utilization. Power savings are the primary objective of any big.LITTLE topology.

The patent description can be accessed here.

View at TechPowerUp Main Site