- Joined

- Aug 19, 2017

- Messages

- 2,999 (1.07/day)

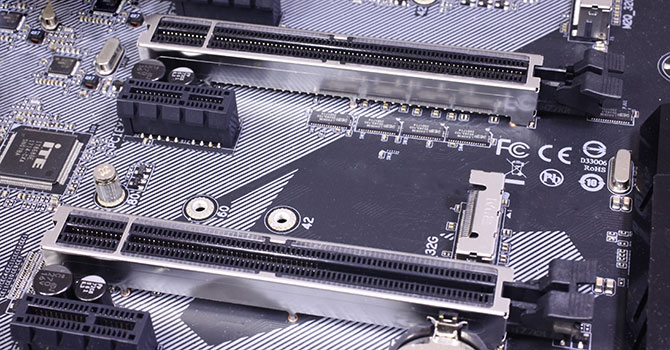

Previously, we have assumed that AMD 4700S desktop kit is based on Xbox Series X APU. Today, according to the findings of Bodnara, who managed to access one of these units, and we got some interesting discoveries. The chip powering the system is actually the PlayStation 5 SoC, which features AMD Zen 2 based system architecture, with 8 cores and 16 threads that can boost up to 3.2 GHz. The board that was tested features SK Hynix GDDR6 memory running at 14 Gbps, placed on the backside of the board. The APU is attached to AMD A77E Fusion Controller Hub (FCH), which was the one powering Xbox One "Durango" SoC, leading us to previously believe that the AMD 4700S is derived from an Xbox Series X system.

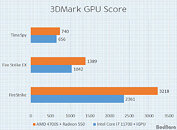

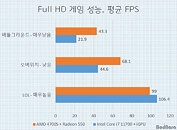

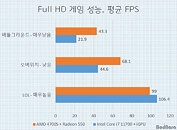

The graphics of this APU are disabled, however, it was the same variant of RDNA 2 GPU used by the PlayStation 5. Right out of the box, the system is equipped with a discrete GPU coming in a form of the Radeon 550, and this configuration was tested by the Bodnara team. You can find the images of the system and some performance results below.

Performance:

View at TechPowerUp Main Site

The graphics of this APU are disabled, however, it was the same variant of RDNA 2 GPU used by the PlayStation 5. Right out of the box, the system is equipped with a discrete GPU coming in a form of the Radeon 550, and this configuration was tested by the Bodnara team. You can find the images of the system and some performance results below.

Performance:

View at TechPowerUp Main Site