- Joined

- Oct 9, 2007

- Messages

- 47,886 (7.38/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

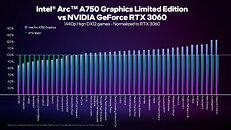

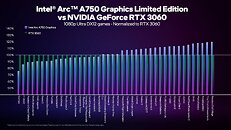

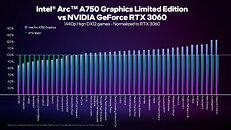

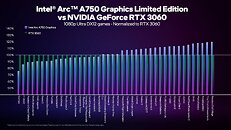

Intel earlier this week released its own performance numbers for as many as 50 benchmarks spanning the DirectX 12 and Vulkan APIs. From our testing, the Arc A380 performs sub-par with its rivals in games based on the DirectX 11 API. Intel tested the A750 in the 1080p and 1440p resolutions, and compared performance numbers with the NVIDIA GeForce RTX 3060. Broadly, the testing reveals the A750 to be 3% faster than the RTX 3060 in DirectX 12 titles at 1080p; about 5% faster at 1440p; about 4% faster in Vulkan titles at 1080p, and about 5% faster at 1440p.

All testing was done without ray tracing, performance enhancements such as XeSS or DLSS weren't used. The small set of 6 Vulkan API titles show a more consistent performance lead for the A750 over the RTX 3060, whereas the DirectX 12 API titles sees the two trade blows, with a diversity of results varying among game engines. In "Dolmen," for example, the RTX 3060 scores 347 FPS compared to the Arc's 263. In "Resident Evil VIII," the Arc scores 160 FPS compared to 133 FPS of the GeForce. Such variations among the titles pulls up the average in favor of the Intel card. Intel stated that the A750 is on-course to launch "later this year," but without being any more specific than that. The individual test results can be seen below.

The testing notes and configuration follows.

View at TechPowerUp Main Site | Source

All testing was done without ray tracing, performance enhancements such as XeSS or DLSS weren't used. The small set of 6 Vulkan API titles show a more consistent performance lead for the A750 over the RTX 3060, whereas the DirectX 12 API titles sees the two trade blows, with a diversity of results varying among game engines. In "Dolmen," for example, the RTX 3060 scores 347 FPS compared to the Arc's 263. In "Resident Evil VIII," the Arc scores 160 FPS compared to 133 FPS of the GeForce. Such variations among the titles pulls up the average in favor of the Intel card. Intel stated that the A750 is on-course to launch "later this year," but without being any more specific than that. The individual test results can be seen below.

The testing notes and configuration follows.

View at TechPowerUp Main Site | Source

I think even if DX11 performance is behind the competition, it's still gonna be enough for my needs. I had a 5700 XT (with the weak cooler mount from Asus), and now I have both a 6500 XT and a 6400, so it can't be that bad.

I think even if DX11 performance is behind the competition, it's still gonna be enough for my needs. I had a 5700 XT (with the weak cooler mount from Asus), and now I have both a 6500 XT and a 6400, so it can't be that bad.