TheLostSwede

News Editor

- Joined

- Nov 11, 2004

- Messages

- 18,486 (2.47/day)

- Location

- Sweden

| System Name | Overlord Mk MLI |

|---|---|

| Processor | AMD Ryzen 7 7800X3D |

| Motherboard | Gigabyte X670E Aorus Master |

| Cooling | Noctua NH-D15 SE with offsets |

| Memory | 32GB Team T-Create Expert DDR5 6000 MHz @ CL30-34-34-68 |

| Video Card(s) | Gainward GeForce RTX 4080 Phantom GS |

| Storage | 1TB Solidigm P44 Pro, 2 TB Corsair MP600 Pro, 2TB Kingston KC3000 |

| Display(s) | Acer XV272K LVbmiipruzx 4K@160Hz |

| Case | Fractal Design Torrent Compact |

| Audio Device(s) | Corsair Virtuoso SE |

| Power Supply | be quiet! Pure Power 12 M 850 W |

| Mouse | Logitech G502 Lightspeed |

| Keyboard | Corsair K70 Max |

| Software | Windows 10 Pro |

| Benchmark Scores | https://valid.x86.fr/yfsd9w |

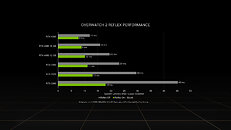

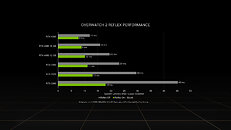

Rather than another leak of performance numbers for NVIDIA's upcoming RTX 4090 and 4080 cards, the company has shared some performance numbers of their upcoming cards in Overwatch 2. According to NVIDIA, Blizzard had to increase the framerate cap in Overwatch 2 to 600 FPS, as the new cards from NVIDIA were simply too fast for the previous 400 FPS framerate cap. NVIDIA also claims that with Reflex enabled, system latency will be reduced by up to 60 percent.

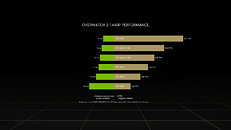

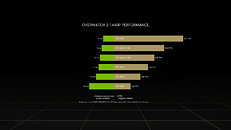

As for the performance numbers, the RTX 4090 managed to 507 FPS at 1440p in Overwatch 2, using an Intel Core i9-12900K CPU, at the Ultra graphics quality setting. The RTX 4080 16 GB manages 368 FPS, with the RTX 4080 12 GB coming in at 296 FPS. The comparison to the previous generation cards is seemingly a bit unfair, as the highest-end comparison we get is an unspecified RTX 3080 that managed to push 249 FPS. This is followed by an RTX 3070 at 195 FPS and an RTX 3060 at 122 FPS. NVIDIA recommends an RTX 4080 16 GB if you want to play Overwatch 2 at 1440p 360 FPS+ and an RTX 3080 Ti at 1080p 360 FPS+.

View at TechPowerUp Main Site | Source

As for the performance numbers, the RTX 4090 managed to 507 FPS at 1440p in Overwatch 2, using an Intel Core i9-12900K CPU, at the Ultra graphics quality setting. The RTX 4080 16 GB manages 368 FPS, with the RTX 4080 12 GB coming in at 296 FPS. The comparison to the previous generation cards is seemingly a bit unfair, as the highest-end comparison we get is an unspecified RTX 3080 that managed to push 249 FPS. This is followed by an RTX 3070 at 195 FPS and an RTX 3060 at 122 FPS. NVIDIA recommends an RTX 4080 16 GB if you want to play Overwatch 2 at 1440p 360 FPS+ and an RTX 3080 Ti at 1080p 360 FPS+.

View at TechPowerUp Main Site | Source