- Joined

- Nov 11, 2016

- Messages

- 3,700 (1.17/day)

| System Name | The de-ploughminator Mk-III |

|---|---|

| Processor | 9800X3D |

| Motherboard | Gigabyte X870E Aorus Master |

| Cooling | DeepCool AK620 |

| Memory | 2x32GB G.SKill 6400MT Cas32 |

| Video Card(s) | Asus Astral 5090 LC OC |

| Storage | 4TB Samsung 990 Pro |

| Display(s) | 48" LG OLED C4 |

| Case | Corsair 5000D Air |

| Audio Device(s) | KEF LSX II LT speakers + KEF KC62 Subwoofer |

| Power Supply | Corsair HX1200 |

| Mouse | Razor Death Adder v3 |

| Keyboard | Razor Huntsman V3 Pro TKL |

| Software | win11 |

User error. Don't try to stick an RTX 4090 in a small cramped case and make sure it's plugged in all the way.

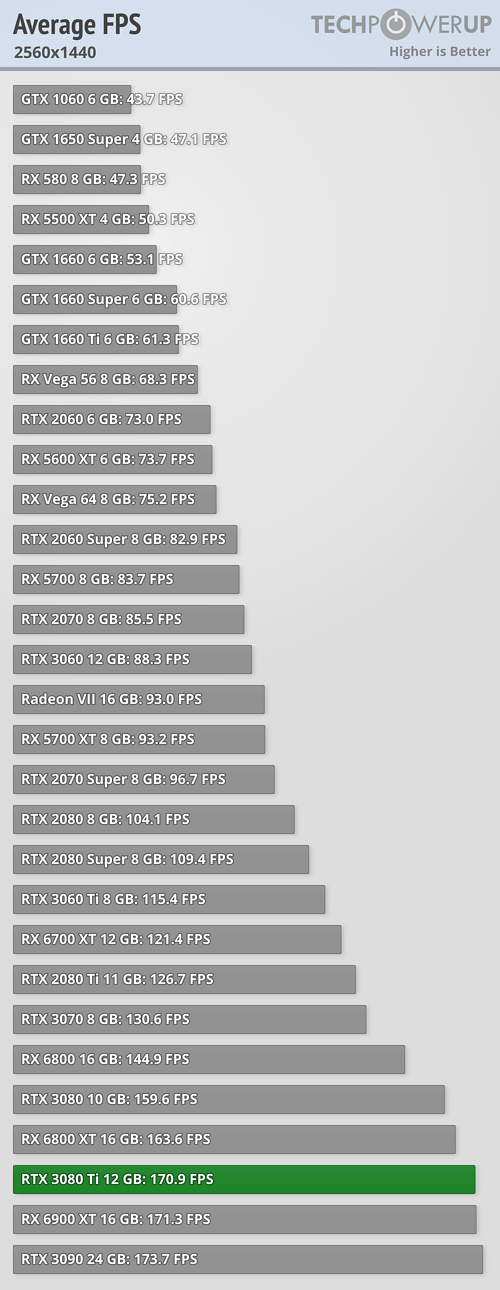

Any guesses on where the RTX 4070 Ti will place on this list after Wizard's review of that card?

https://pcpartpicker.com/search/?q=RTX+3080+Ti <--- current US prices for the RTX 3080 Ti 12GB via PC Partpicker

I will take a guess, 4070Ti will be

~3090Ti at 1440p

3090Ti -5% at 4K

Top the efficiency chart

I have a laptop with a dual-core Celeron (that's basically an Atom), and 4 GB RAM. It's ok for light browsing.

I have a laptop with a dual-core Celeron (that's basically an Atom), and 4 GB RAM. It's ok for light browsing.